As previously mentioned, zuul has new drives.

It is now time to replace the existing drives with the new drives. I will do this one at a time. Replace. Wait for resilver. At no time will the mirror be degraded (as would be the case if we removed a drive and mirrored).

In this approach, we will mirror one of the drives to a new drive, and zfs will automatically remove the old drive when the mirroring process is completed.

In this post:

- FreeBSD 12.0

- old drives: ada0 & ada1

- new drives ada2 & ada3

- graphs produced by LibreNMS

The existing zpool and drives

The zpool is:

[dan@zuul:~] $ zpool status pool: system state: ONLINE scan: scrub repaired 0 in 0 days 07:02:10 with 0 errors on Wed Jul 17 12:18:13 2019 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/disk0 ONLINE 0 0 0 gpt/disk1 ONLINE 0 0 0 errors: No known data errors [dan@zuul:~] $

The drives are:

[dan@zuul:~] $ gpart show

=> 34 976773101 ada0 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789711 - free - (2.8G)

=> 34 976773101 ada1 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789711 - free - (2.8G)

[dan@zuul:~] $

I feel this is cheating. If there were many more drives in the system, this approach would not work. I want to show the drive details based on what I see in zpool status.

I searching my blog for gpt/disk0 and found the answer in Adding a failed HDD back into a ZFS mirror:

[dan@zuul:~] $ glabel status

Name Status Components

gpt/bootcode0 N/A ada0p1

gptid/7a204641-5ed6-11e3-ab70-74d02b981922 N/A ada0p1

gpt/swapada0 N/A ada0p2

gpt/disk0 N/A ada0p3

gpt/bootcode1 N/A ada1p1

gptid/7a62ee07-5ed6-11e3-ab70-74d02b981922 N/A ada1p1

gpt/swapada1 N/A ada1p2

gpt/disk1 N/A ada1p3

diskid/DISK-WD-WMAYP6365671 N/A ada2

diskid/DISK-WD-WMAYP6365619 N/A ada3

[dan@zuul:~] $

Looking at lines 6 and 10 I can see that gpt/disk0 is ada0. Similarly, gpt/disk1 is ada1. Specifically, they are partition number 3 on each of those drives. That is what the p3 means.

Prepare the new drives

I have already run badblocks on the two new drives. I did this because I could, and helps identify bad drives.

Next, I will partition the new drives exactly like the existing drives. Instead of doing this manually, gpart can duplicate it via a copy.

As taken from https://forums.freebsd.org/threads/copying-partitioning-to-new-disk.60937/, I am using this command:

[dan@zuul:~] $ gpart backup ada0 | sudo gpart restore ada2 ada3 [dan@zuul:~] $

This copies the partition table from ada0 to both ada2 and ada3.

Here is what we have now:

[dan@zuul:~] $ gpart show ada2 ada3

=> 40 976773088 ada2 GPT (466G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 ada3 GPT (466G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789704 - free - (2.8G)

[dan@zuul:~] $

Now I’m ready to start the replacement.

oh wait, these are not new drives

These are used drives. Let’s verify we do not have some left-over ZFS labels.

[dan@zuul:~] $ sudo zdb -l /dev/ada2 ------------------------------------ LABEL 0 ------------------------------------ failed to unpack label 0 ------------------------------------ LABEL 1 ------------------------------------ failed to unpack label 1 ------------------------------------ LABEL 2 ------------------------------------ failed to unpack label 2 ------------------------------------ LABEL 3 ------------------------------------ failed to unpack label 3 [dan@zuul:~] $ sudo zdb -l /dev/ada3 ------------------------------------ LABEL 0 ------------------------------------ failed to unpack label 0 ------------------------------------ LABEL 1 ------------------------------------ failed to unpack label 1 ------------------------------------ LABEL 2 ------------------------------------ failed to unpack label 2 ------------------------------------ LABEL 3 ------------------------------------ failed to unpack label 3 [dan@zuul:~] $

Good. They are empty. If they had lingering data, it would look like what you see in Adding a failed HDD back into a ZFS mirror.

Serial numbers as drive labels

I like to use serial numbers as drive labels. It makes it clear which drives needs to be replaced/kept when the time comes.

Here is the serial information:

[dan@zuul:~] $ grep ada2 /var/run/dmesg.boot ada2 at ahcich2 bus 0 scbus2 target 0 lun 0 ada2: <WDC WD5003ABYX-18WERA0 01.01S03> ATA8-ACS SATA 2.x device ada2: Serial Number WD-WMAYP6365671 ada2: 300.000MB/s transfers (SATA 2.x, UDMA6, PIO 8192bytes) ada2: Command Queueing enabled ada2: 476940MB (976773168 512 byte sectors) [dan@zuul:~] $ grep ada3 /var/run/dmesg.boot ada3 at ahcich3 bus 0 scbus3 target 0 lun 0 ada3: <WDC WD5003ABYX-18WERA0 01.01S03> ATA8-ACS SATA 2.x device ada3: Serial Number WD-WMAYP6365619 ada3: 300.000MB/s transfers (SATA 2.x, UDMA6, PIO 8192bytes) ada3: Command Queueing enabled ada3: 476940MB (976773168 512 byte sectors) [dan@zuul:~] $

This is how I set the labels:

[dan@zuul:~] $ sudo gpart modify -i 3 -l WD-WMAYP6365671 ada2 ada2p3 modified [dan@zuul:~] $ sudo gpart modify -i 3 -l WD-WMAYP6365619 ada3 ada3p3 modified

Notice the labels do not show up when you do this:

[dan@zuul:~] $ gpart show ada2 ada3

=> 40 976773088 ada2 GPT (466G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 ada3 GPT (466G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16777216 2 freebsd-swap (8.0G)

16779264 954204160 3 freebsd-zfs (455G)

970983424 5789704 - free - (2.8G)

But they appear when you use -l:

[dan@zuul:~] $ gpart show -l ada2 ada3

=> 40 976773088 ada2 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365671 (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 ada3 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365619 (455G)

970983424 5789704 - free - (2.8G)

[dan@zuul:~] $

The replace

I went back to Added new drive when existing one gave errors, then zpool replace to locate the command I have used in the past.

sudo zpool replace system gpt/disk0 gpt/WD-WMAYP6365671

This can take 5-10 seconds to come back to the command line. That is not unusual.

The following may help you follow along:

- gpt/disk0 – That is the drive from zpool status which I am replacing now

- gpt/WD-WMAYP6365671 – the new drive which will replace the above mentioned drive

When the command does complete, you will see this:

[dan@zuul:~] $ sudo zpool replace system gpt/disk0 gpt/WD-WMAYP6365671 Make sure to wait until resilver is done before rebooting. If you boot from pool 'system', you may need to update boot code on newly attached disk 'gpt/WD-WMAYP6365671'. Assuming you use GPT partitioning and 'da0' is your new boot disk you may use the following command: gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0 [dan@zuul:~] $

Read that carefully. It is important.

This system does boot from these drives. Therefore, I must do that bootcode command.

I ran it on both new drives so I don’t have to remember to do it later for the other drive. Repeating the process does no harm. I want bootcode on both drives so the system can boot from either one (should the other be dead).

[dan@zuul:~] $ sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 ada2 partcode written to ada2p1 bootcode written to ada2 [dan@zuul:~] $ sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 ada3 partcode written to ada3p1 bootcode written to ada3 [dan@zuul:~] $

zpool status during the replace

During the replace, here is a zpool status done immediately after the replace command:

[dan@zuul:~] $ zpool status pool: system state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Sun Jul 21 18:43:32 2019 356M scanned at 23.8M/s, 2.81M issued at 192K/s, 311G total 2.05M resilvered, 0.00% done, no estimated completion time config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 replacing-0 ONLINE 0 0 0 gpt/disk0 ONLINE 0 0 0 gpt/WD-WMAYP6365671 ONLINE 0 0 0 gpt/disk1 ONLINE 0 0 0 errors: No known data errors

Then I waited

While waiting, I noticed this item in /var/log/messages:

Jul 21 18:43:31 zuul ZFS[23955]: vdev state changed, pool_guid=$7534272725841389583 vdev_guid=$9760295329350970791

This is expected with doing a zfs replace.

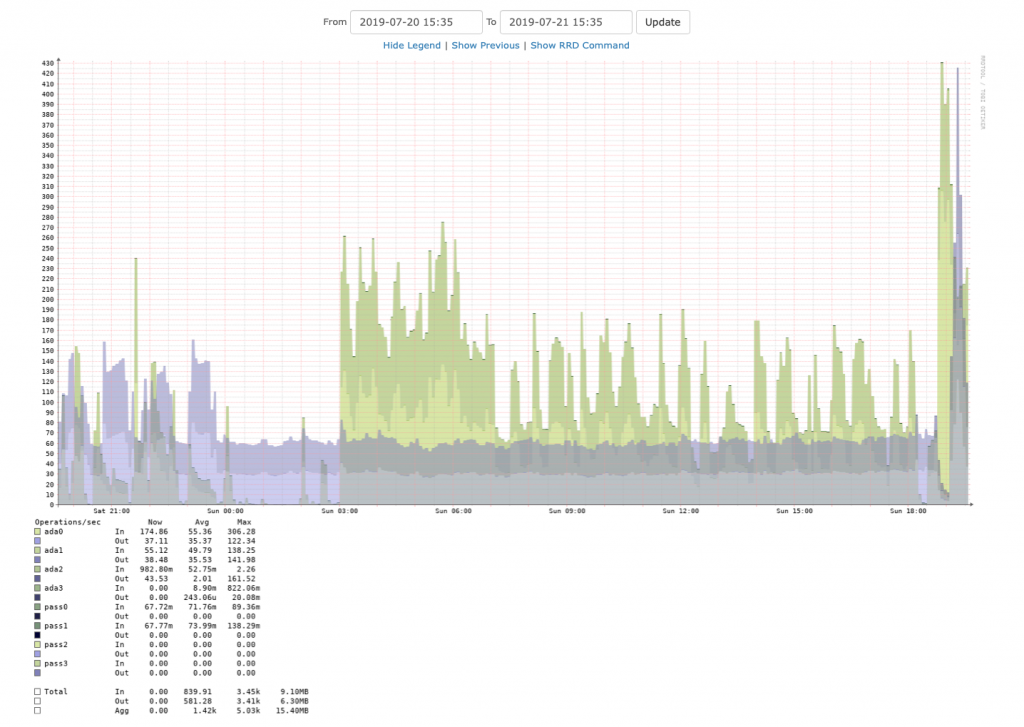

Here is the disk activity:

First drive done

The next morning, I saw this:

[dan@zuul:~] $ zpool status pool: system state: ONLINE scan: resilvered 279G in 0 days 05:01:03 with 0 errors on Sun Jul 21 23:44:35 2019 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/WD-WMAYP6365671 ONLINE 0 0 0 gpt/disk1 ONLINE 0 0 0 errors: No known data errors [dan@zuul:~] $

Five hours for 280G, that seems OK, especially so given the system was in use at the time. In fact, it’s the system saving this blog post.

As seen above, gpt/disk0 is no longer in the pool and it has been replaced by gpt/WD-WMAYP6365671, exactly as requested.

Next: the other drive.

Notice the additional gpart entry

I noticed this:

[dan@zuul:~] $ gpart show -l

=> 34 976773101 ada0 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 bootcode0 (512K)

1064 984 - free - (492K)

2048 16777216 2 swapada0 (8.0G)

16779264 954204160 3 disk0 (455G)

970983424 5789711 - free - (2.8G)

=> 34 976773101 ada1 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 bootcode1 (512K)

1064 984 - free - (492K)

2048 16777216 2 swapada1 (8.0G)

16779264 954204160 3 disk1 (455G)

970983424 5789711 - free - (2.8G)

=> 40 976773088 ada2 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365671 (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 ada3 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365619 (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 diskid/DISK-WD-WMAYP6365619 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365619 (455G)

970983424 5789704 - free - (2.8G)

Notice how WD-WMAYP6365619 appears both as ada3 and as diskid/DISK-WD-WMAYP6365619. I am not sure why.

EDIT: 2019-09-09 – Wallace Barrow figures gpart is also creating a label. If you do a glabel status after running gpart, you will see it in the output.

Let’s see what happens to it after we add it into the zpool.

Replacing gpt/disk1

Both drives have been partitioned via gpart.

Both drives have been bootcode‘d.

I will copy, paste, and update the command I ran for the first drive:

$ time sudo zpool replace system gpt/disk1 gpt/WD-WMAYP6365619 Make sure to wait until resilver is done before rebooting. If you boot from pool 'system', you may need to update boot code on newly attached disk 'gpt/WD-WMAYP6365619'. Assuming you use GPT partitioning and 'da0' is your new boot disk you may use the following command: gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0 real 0m15.565s user 0m0.006s sys 0m0.006s $

This 15 seconds to come back to the command line. That is not unusual.

The following may help you follow along:

- gpt/disk1 – That is the drive from zpool status which I am replacing now

- gpt/WD-WMAYP6365619 – the new drive which will replace the above mentioned drive

Let’s look at gpart again:

[dan@zuul:~] $ gpart show -l

=> 34 976773101 ada0 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 bootcode0 (512K)

1064 984 - free - (492K)

2048 16777216 2 swapada0 (8.0G)

16779264 954204160 3 disk0 (455G)

970983424 5789711 - free - (2.8G)

=> 34 976773101 ada1 GPT (466G)

34 6 - free - (3.0K)

40 1024 1 bootcode1 (512K)

1064 984 - free - (492K)

2048 16777216 2 swapada1 (8.0G)

16779264 954204160 3 disk1 (455G)

970983424 5789711 - free - (2.8G)

=> 40 976773088 ada2 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365671 (455G)

970983424 5789704 - free - (2.8G)

=> 40 976773088 ada3 GPT (466G)

40 1024 1 (null) (512K)

1064 984 - free - (492K)

2048 16777216 2 (null) (8.0G)

16779264 954204160 3 WD-WMAYP6365619 (455G)

970983424 5789704 - free - (2.8G)

[dan@zuul:~] $

Notice how diskid/DISK-WD-WMAYP6365619 is no longer present.

Here is the zpool status output:

[dan@zuul:~] $ zpool status pool: system state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Mon Jul 22 11:56:51 2019 104G scanned at 1.11G/s, 98.7M issued at 1.06M/s, 311G total 96.8M resilvered, 0.03% done, no estimated completion time config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/WD-WMAYP6365671 ONLINE 0 0 0 replacing-1 ONLINE 0 0 0 gpt/disk1 ONLINE 0 0 0 gpt/WD-WMAYP6365619 ONLINE 0 0 0 errors: No known data errors [dan@zuul:~] $

Finally, the entry from /var/log/messages:

Jul 22 11:56:50 zuul ZFS[24551]: vdev state changed, pool_guid=$7534272725841389583 vdev_guid=$17104486245226420452

Now, I wait again. The system will be usuable during this time period. I’ll wait about 30 minutes, then do a screen shot of the disk io graph again.

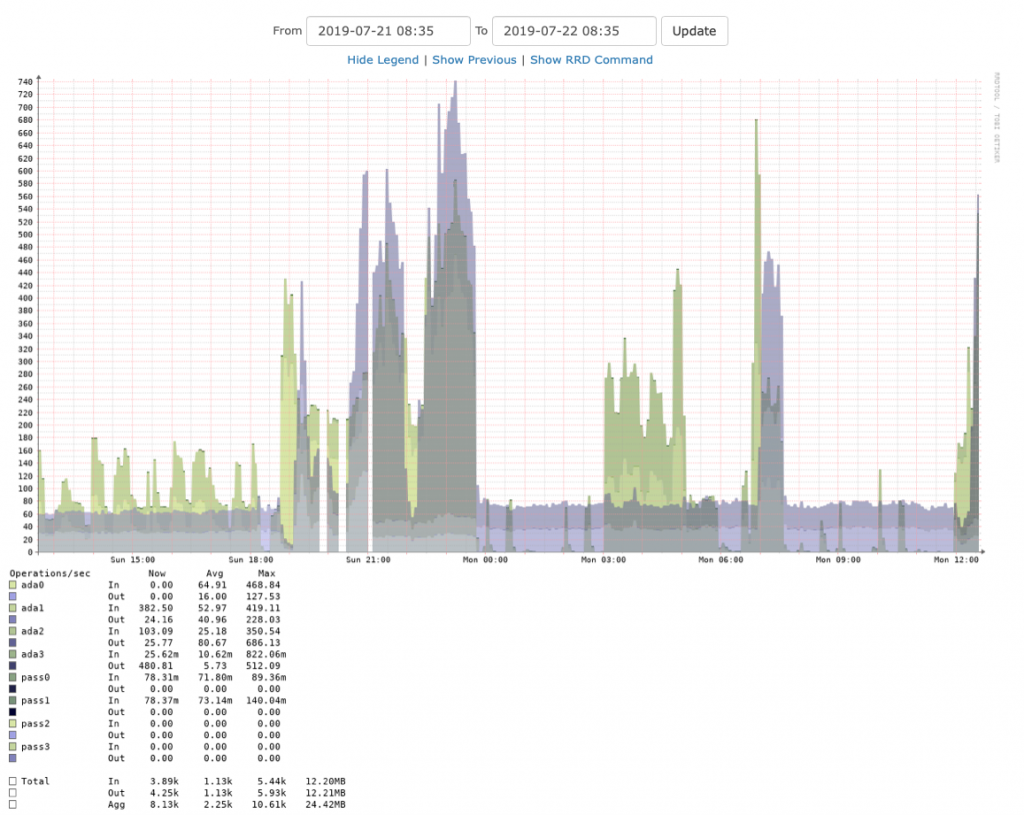

More graphs

While looking for the disk IO graph, I noticed this graph for ada0, the drive I replaced yesterday. There has been no activity since the drive left the zpool.

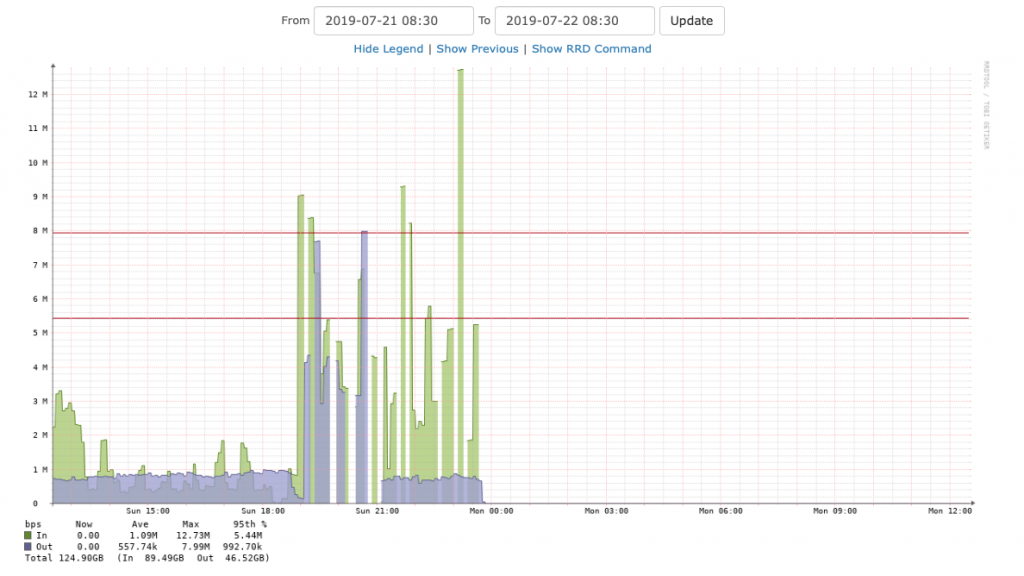

The following graph is overall disk IO (i.e. all drives). It also shows the spike since the replace command was issued.

The wait is over!

That took much less time.

[dan@zuul:~] $ zpool status pool: system state: ONLINE scan: resilvered 311G in 0 days 02:56:13 with 0 errors on Mon Jul 22 14:53:04 2019 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/WD-WMAYP6365671 ONLINE 0 0 0 gpt/WD-WMAYP6365619 ONLINE 0 0 0 errors: No known data errors [dan@zuul:~] $

Compare 3 hours against 5 hours for the previous drive.

Let’s just assume this represents the difference in drive performance.

The new drives are Western Digital RE4. The old drives are Western Digital Blue. Please leave your analysis in the comments. Thank you.