In this power, the server is running FreeBSD 10.3.

I am completely and unreasonably biased: ZFS is the best filesystem out there.

Do not take my word for it. Ask around.

Today, I started a process I’ve been waiting to do for a while. I am replacing the 3TB drives in a 10-drive raidz2 array with 5TB drives. These new drives are faster, I think perhaps cooler, and, more to the point, bigger.

Drives can be replaced, one at a time, until they are all 5TB drives. Then, if you’ve set zpool set autoexpand=on, your zpool size will magically expand.

If you have spare slots, or in my case, free space inside the case, you can add a new drive, then issue the replace command. Why do it that way? Read this from the zpool Administration section of the FreeBSD Handbook.

There are a number of situations where it may be desirable to replace one disk with a different disk. When replacing a working disk, the process keeps the old disk online during the replacement. The pool never enters a degraded state, reducing the risk of data loss. zpool replace copies all of the data from the old disk to the new one. After the operation completes, the old disk is disconnected from the vdev.

This is far superior to what I did for my last zpool upgrade. I pulled a drive, plugged a new one in. Not ideal.

Let us begin.

The drives

I do recommend this approach. I merely present for your education as to how to not do things.

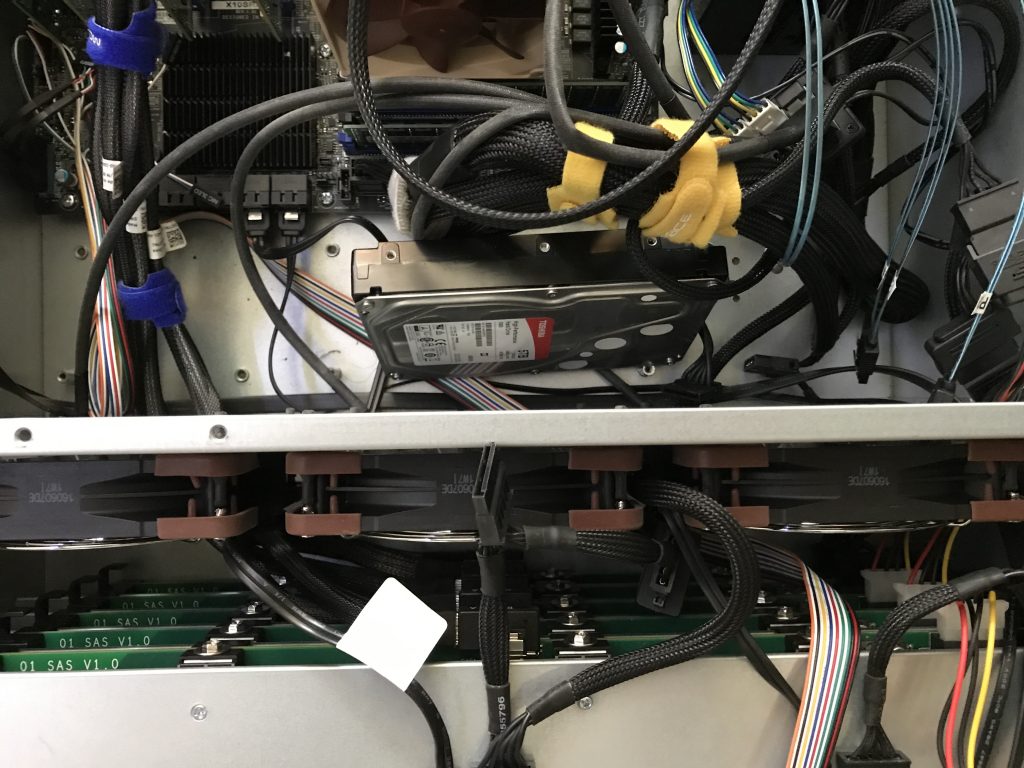

In the image below, you see knew, my main storage server. It is a 5U case with 20 hot-swap drive bays, all full.

The cards, from top right, are:

- ix0 – Intel(R) PRO/10GbE NIIC

- mps0 – Avago Technologies (LSI) SAS2008

- mps1 – Avago Technologies (LSI) SAS2008

- mps2 – Avago Technologies (LSI) SAS2008

Your keen eye will note that that the mps2 has an empty connection SFF-8087 connector. I connected one up and attached it to the disk, and found a spare power connector.

In this photo, you can see how securely I have attached this drive. I racked the server and powered it up.

After power up

After powering up the server, I saw this information.

$ sysctl kern.disks kern.disks: da20 da19 da18 da17 da16 da15 da14 da13 da12 da11 da10 da9 da8 da7 da6 da5 da4 da3 da2 da1 da0 ada1 ada0

The details of the new drive:

da20 at mps2 bus 0 scbus2 target 13 lun 0 da20:Fixed Direct Access SPC-4 SCSI device da20: Serial Number X643KHBFF57D da20: 600.000MB/s transfers da20: Command Queueing enabled da20: 4769307MB (9767541168 512 byte sectors)

That’s the drive I need to format.

Here I go:

sudo gpart create -s gpt da20 sudo gpart add -s 512K -t freebsd-boot -a 1M da20 sudo gpart add -s 4G -t freebsd-swap -a 1M da20 sudo gpart add -s 4653G -t freebsd-zfs -a 1M da20 gpart show da0 da20

Now it is ready for ZFS to use.

What does the pool look like?

This is the pool in question.

$ zpool list system NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT system 27T 17.6T 9.40T - 12% 65% 1.00x ONLINE -

Here are some details. It is a 10x 3TB raidz2 array.

$ zpool status system pool: system state: ONLINE scan: scrub repaired 0 in 21h33m with 0 errors on Thu Aug 10 00:42:38 2017 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 da2p3 ONLINE 0 0 0 da7p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 da3p3 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da9p3 ONLINE 0 0 0 da4p3 ONLINE 0 0 0 da6p3 ONLINE 0 0 0 da10p3 ONLINE 0 0 0 da5p3 ONLINE 0 0 0 logs mirror-1 ONLINE 0 0 0 ada1p1 ONLINE 0 0 0 ada0p1 ONLINE 0 0 0 errors: No known data errors

I’m going to work on replacing that da2 device first.

Prepare the pool

I set this on, so that once the drives are all replaced, the size will automatically expand.

[dan@knew:~] $ sudo zpool set autoexpand=on system [dan@knew:~] $

Replacing the device

The first device listed is already in the pool The second device is the new device.

$ sudo zpool replace system da2p3 da20p3 Make sure to wait until resilver is done before rebooting. If you boot from pool 'system', you may need to update boot code on newly attached disk 'da20p3'. Assuming you use GPT partitioning and 'da0' is your new boot disk you may use the following command: gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0

Remember to do that last part from above. It will ensure you can boot.

[dan@knew:~] $ sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da20 bootcode written to da20

The resilvering

After issuing the above commands, the status looks like this:

$ zpool status system

pool: system

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Wed Aug 16 21:51:39 2017

70.7M scanned out of 17.6T at 14.1M/s, 362h19m to go

5.11M resilvered, 0.00% done

config:

NAME STATE READ WRITE CKSUM

system ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

replacing-0 ONLINE 0 0 0

da2p3 ONLINE 0 0 0

da20p3 ONLINE 0 0 0 (resilvering)

da7p3 ONLINE 0 0 0

da1p3 ONLINE 0 0 0

da3p3 ONLINE 0 0 0

da0p3 ONLINE 0 0 0

da9p3 ONLINE 0 0 0

da4p3 ONLINE 0 0 0

da6p3 ONLINE 0 0 0

da10p3 ONLINE 0 0 0

da5p3 ONLINE 0 0 0

logs

mirror-1 ONLINE 0 0 0

ada1p1 ONLINE 0 0 0

ada0p1 ONLINE 0 0 0

errors: No known data errors

Note that it is not DEGRADED. SCORE!

To give you an idea of progress, while I wrote the above, it looks like this now:

[dan@knew:~] $ zpool status system

pool: system

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Wed Aug 16 21:51:39 2017

306G scanned out of 17.6T at 162M/s, 31h6m to go

29.4G resilvered, 1.70% done

config:

NAME STATE READ WRITE CKSUM

system ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

replacing-0 ONLINE 0 0 0

da2p3 ONLINE 0 0 0

da20p3 ONLINE 0 0 0 (resilvering)

da7p3 ONLINE 0 0 0

da1p3 ONLINE 0 0 0

da3p3 ONLINE 0 0 0

da0p3 ONLINE 0 0 0

da9p3 ONLINE 0 0 0

da4p3 ONLINE 0 0 0

da6p3 ONLINE 0 0 0

da10p3 ONLINE 0 0 0

da5p3 ONLINE 0 0 0

logs

mirror-1 ONLINE 0 0 0

ada1p1 ONLINE 0 0 0

ada0p1 ONLINE 0 0 0

errors: No known data errors

[dan@knew:~] $

Stay tuned for more updates. I have 9 more drives to replace.

Addenda – 2017.08.17

This morning, I noticed these entries in /var/log/messages:

Aug 16 21:51:38 knew devd: Executing 'logger -p kern.notice -t ZFS 'vdev state changed, pool_guid=15378250086669402288 vdev_guid=15077920823230281604'' Aug 16 21:51:38 knew ZFS: vdev state changed, pool_guid=15378250086669402288 vdev_guid=15077920823230281604

Looking in /var/log/auth.log, I found this entry:

Aug 16 21:51:38 knew sudo: dan : TTY=pts/0 ; PWD=/usr/home/dan ; USER=root ; COMMAND=/sbin/zpool replace system da2p3 da20p3

Note the times. The zpool replace command and the vdev state change are related.

Side note on logging and backups

For what it’s worth, the above review of log entries led to another blog post.

Addenda – 2017.08.17 #2

The resilvering complete succesful. da2 is no longer part of the array, but da20 is.

[dan@knew:~] $ zpool status system pool: system state: ONLINE scan: resilvered 1.68T in 19h32m with 0 errors on Thu Aug 17 17:23:50 2017 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 da20p3 ONLINE 0 0 0 da7p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 da3p3 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da9p3 ONLINE 0 0 0 da4p3 ONLINE 0 0 0 da6p3 ONLINE 0 0 0 da10p3 ONLINE 0 0 0 da5p3 ONLINE 0 0 0 logs mirror-1 ONLINE 0 0 0 ada1p1 ONLINE 0 0 0 ada0p1 ONLINE 0 0 0 errors: No known data errors [dan@knew:~] $

1 more hour to go for the last 5TB drive.