In this post I will replace a working, but suspect, drive with another drive. No down time. The server is knew.

In this post:

- FreeBSD 12.2

- ZFS

- TOSHIBA MD04ACA500 5TB drive – the suspect drive: da17

- TOSHIBA HDWE150 5TB drive – the replacement: da22

- None of these drives are under warranty

What drives are in this server?

I have had good luck with Toshiba DT01ACA300 3TB drives (presenting as Hitachi HDS723030BLE640), starting a few years back, and more recently, with Toshiba 5TB drives (Toshiba X300 presenting as TOSHIBA HDWE150 or TOSHIBA MD04ACA5 FP2A). Also great drives for me.

FYI Those 3TB drives presented as Hitachi HDS723030BLE640. They were great for me.

Why am I replacing

Deciding when to replace a drive seems to be an art, not a science. Opinions vary.

I find similar with asking about drive recommendations. Name a brand and someone will report a terrible experience with them.

In my case, I’m seeing these entries in /var/log/messages:

Dec 12 09:23:03 knew smartd[2124]: Device: /dev/da17 [SAT], 40 Currently unreadable (pending) sectors Dec 12 09:53:04 knew syslogd: last message repeated 1 times Dec 12 10:23:03 knew syslogd: last message repeated 1 times Dec 12 10:53:04 knew syslogd: last message repeated 1 times Dec 12 11:23:03 knew smartd[2124]: Device: /dev/da17 [SAT], 40 Currently unreadable (pending) sectors Dec 12 11:53:05 knew syslogd: last message repeated 1 times Dec 12 12:23:04 knew syslogd: last message repeated 1 times Dec 12 12:53:03 knew syslogd: last message repeated 1 times Dec 12 13:23:03 knew smartd[2124]: Device: /dev/da17 [SAT], 40 Currently unreadable (pending) sectors Dec 12 13:53:04 knew syslogd: last message repeated 1 times

The full smartctl output for this drive is in this recent blog post.

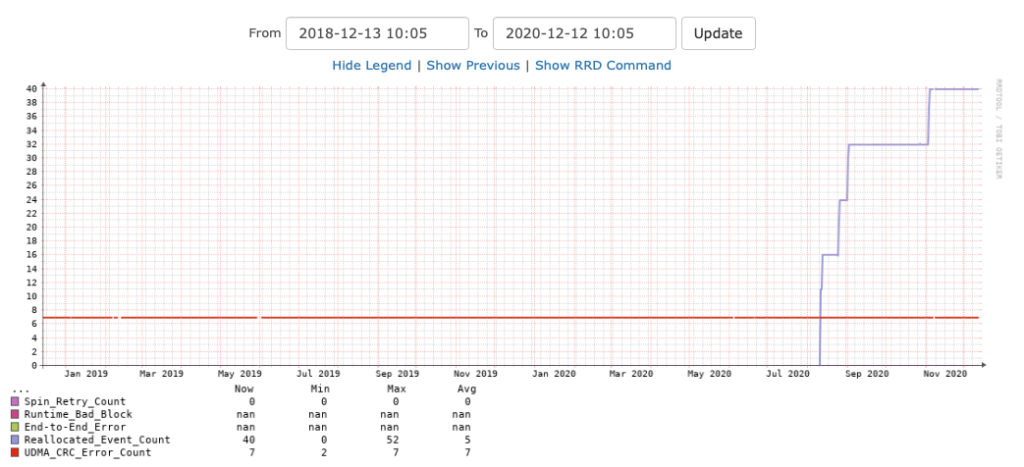

Here is a graph of the Reallocated_Event_Count going up since August, where is was zero before that.

This is the only drive in the system with a non-zero value for Current_Pending_Sector.

I think the Current_Pending_Sector and Reallocated_Event_Count refer to the same thing.

Both old and new drives are in the system

It is vital to note that both drives are in the system. The zpool is not degraded.

This is important. The failing drive *COULD* be removed from the system and the replacement drive put in the same drive bay and replaced. This is not optimal. When the drive is removed from the system the zpool will degrade.

Keeping both old and new drives in the system means that the zpool does not degrade during the replacement process.

Maintaining the vdev, and therefore zpool integrity is important.

This zpool consists of two raidz2 vdevs, each containing 10 5TB drives.

[dan@knew:~] $ zpool status system pool: system state: ONLINE status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. scan: scrub repaired 2.27M in 0 days 16:45:33 with 0 errors on Tue Dec 8 21:40:28 2020 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 da3p3 ONLINE 0 0 0 da10p3 ONLINE 0 0 0 da9p3 ONLINE 0 0 0 da2p3 ONLINE 0 0 0 da13p3 ONLINE 0 0 0 da15p3 ONLINE 0 0 0 da11p3 ONLINE 0 0 0 da14p3 ONLINE 0 0 0 da8p3 ONLINE 0 0 0 da7p3 ONLINE 0 0 0 raidz2-1 ONLINE 0 0 0 da5p1 ONLINE 0 0 0 da6p1 ONLINE 0 0 0 da19p1 ONLINE 0 0 0 da12p1 ONLINE 0 0 0 da4p1 ONLINE 0 0 0 da1p1 ONLINE 0 0 0 da17p1 ONLINE 0 0 0 da16p1 ONLINE 0 0 0 da0p1 ONLINE 0 0 0 da18p1 ONLINE 0 0 0 errors: No known data errors [dan@knew:~] $

The Some supported features are not enabled on the pool notice is related to a recent upgrade of this host from FreeBSD 12.1 to FreeBSD 12.2. The new ZFS features are not yet enabled.

Preparation of the new drive

I say new drive, but this drive was purchased some time ago and has been sitting in the system and powered on for some time. It has 17742 power on hours, so it’s been sitting there for 2 years, just waiting for this situation.

I’m running FreeBSD and it has long been the best practice to use partitioned drives for ZFS under FreeBSD. You might well ask why.

First step, partition the new drive. gpart can do that for you, using the partition of an existing drive.

[dan@knew:~] $ gpart backup da17 | sudo gpart restore da22

[dan@knew:~] $ gpart show da17 da22

=> 34 9767541101 da17 GPT (4.5T)

34 6 - free - (3.0K)

40 9766000000 1 freebsd-zfs (4.5T)

9766000040 1541095 - free - (752M)

=> 40 9767541088 da22 GPT (4.5T)

40 9766000000 1 freebsd-zfs (4.5T)

9766000040 1541088 - free - (752M)

[dan@knew:~] $

One thing I often do is label the drive partition using the serial number of the drive. This can be useful when it comes time to pull a drive from the system and helps to identify that you do have the correct drive.

[dan@knew:~] $ sudo gpart modify -i 1 -l 48JGK3AYF57D da22

da22p1 modified

[dan@knew:~] $ gpart show -l da22

=> 40 9767541088 da22 GPT (4.5T)

40 9766000000 1 48JGK3AYF57D (4.5T)

9766000040 1541088 - free - (752M)

[dan@knew:~] $

That label also appears in this directory:

[dan@knew:~] $ ls -l /dev/gpt/ total 0 crw-r----- 1 root operator 0x951 Dec 12 15:27 48JGK3AYF57D crw-r----- 1 root operator 0x10a Dec 8 22:21 BTJR5090037J240AGN.boot.internal crw-r----- 1 root operator 0x165 Dec 8 22:21 BTJR509003N7240AGN.boot.internal crw-r----- 1 root operator 0x17c Dec 8 22:21 S3Z8NB0KB11776R.Slot.11 crw-r----- 1 root operator 0x17b Dec 8 22:21 S3Z8NB0KB11784L.Slot.05 [dan@knew:~] $

You can see some other labels I have created to indicate the boot drives, which are mounted inside the chassis, as opposed to a drive bay. Also shown are drives from slot 11 and 05. Presumably they are still in those locations.

Just in case this drive has previously been used in a zpool, let’s check for any existing labels (note /dev/da22 also works for this command):

[dan@knew:~] $ sudo zdb -l /dev/gpt/48JGK3AYF57D ------------------------------------ LABEL 0 ------------------------------------ failed to unpack label 0 ------------------------------------ LABEL 1 ------------------------------------ failed to unpack label 1 ------------------------------------ LABEL 2 ------------------------------------ failed to unpack label 2 ------------------------------------ LABEL 3 ------------------------------------ failed to unpack label 3 [dan@knew:~] $

If I found anything other than failed, I would have used zpool labelclear as I did back in Feb 2016.

Starting the replace command

This is the command, with OLD drive first, and new drive last.

[dan@knew:~] $ sudo zpool replace system da17p1 da22p1 [dan@knew:~] $

It is normal and expected to see something like this in /var/log/messages after issuing a replace command:

Dec 12 15:35:47 knew ZFS[70753]: vdev state changed, pool_guid=$15378250086669402288 vdev_guid=$18428760864140250121

Now, we let the replacement drive resilver.

[dan@knew:~] $ zpool status system pool: system state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Sat Dec 12 15:35:49 2020 2.70T scanned at 283M/s, 1.33T issued at 1.23G/s, 58.5T total 0 resilvered, 2.27% done, 0 days 13:10:05 to go config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 da3p3 ONLINE 0 0 0 da10p3 ONLINE 0 0 0 da9p3 ONLINE 0 0 0 da2p3 ONLINE 0 0 0 da13p3 ONLINE 0 0 0 da15p3 ONLINE 0 0 0 da11p3 ONLINE 0 0 0 da14p3 ONLINE 0 0 0 da8p3 ONLINE 0 0 0 da7p3 ONLINE 0 0 0 raidz2-1 ONLINE 0 0 0 da5p1 ONLINE 0 0 0 da6p1 ONLINE 0 0 0 da19p1 ONLINE 0 0 0 da12p1 ONLINE 0 0 0 da4p1 ONLINE 0 0 0 da1p1 ONLINE 0 0 0 replacing-6 ONLINE 0 0 0 da17p1 ONLINE 0 0 0 da22p1 ONLINE 0 0 0 da16p1 ONLINE 0 0 0 da0p1 ONLINE 0 0 0 da18p1 ONLINE 0 0 0 errors: No known data errors [dan@knew:~] $

It claims 15.5 hours. Given it is now Sat Dec 12 15:37:09 UTC 2020, that would be at about 07:20 UTC tomorrow.

I have other things to do. I’ll complete this block post tomorrow.

EDIT: already, at 15:48:23, we have 03:44:42 to go. Oh, and now it’s back up to 04:56:09, oh and now 05:23:39

I think I’ll stop checking do some of my other tasks.