It is a rainy Wednesday morning and I’m drinking a cappuccino in my favorite cafe. A five minute walk from here, in the basement of an 1890 Victorian twin house, sits r730-04. Last weekend, I configured it to boot from two SATADOM drives. Today, I’ll move that zpool to a pair of larger drives. I’ve done that move before and it is a common procedure for increasing zpool capacity.

Later today, I’ll move the zroot back to the SATADOMs, thereby decreasing the capacity of the zpool. This object is less sought after. Rarely do you want to move to have less capacity. That process will be a dry-run and proof-of-concept before I do the same thing on r730-01.

In this post:

- FreeBSD 14.3

New drives in

When moving from one set of drives to another, it is safer if you can add the device to the host and let ZFS do its work. I have been known to have a drive sit loosely within the void within the chassis to do that.

This morning, before heading to the cafe, I inserted two SSDs into the drive slots at the front of the case. They were the same drives I removed from r730-01 to give space for a 4x NVMe card. (I just realized I took photos but I have not posted them).

This is what appeared in /var/log/messages when I did that. I see now the date is wrong on that host. I’ll fix that.

Nov 22 03:00:00 r730-04 kernel: mfi0: 94056838 (817095600s/0x0020/info) - Patrol Read can't be started, as PDs are either not ONLINE, or are in a VD with an active process, or are in an excluded VD Nov 22 11:52:49 r730-04 kernel: mfi0: 94056839 (817127571s/0x0002/info) - Inserted: PD 00(e0x20/s0) Nov 22 11:52:49 r730-04 kernel: mfisyspd0 numa-domain 0 on mfi0 Nov 22 11:52:49 r730-04 kernel: mfisyspd0: 953869MB (1953525168 sectors) SYSPD volume (deviceid: 0) Nov 22 11:52:49 r730-04 kernel: mfisyspd0: SYSPD volume attached Nov 22 11:52:49 r730-04 kernel: mfi0: 94056840 (817127571s/0x0002/info) - Inserted: PD 00(e0x20/s0) Info: enclPd=20, scsiType=0, portMap=00, sasAddr=4433221104000000,0000000000000000 Nov 22 11:52:49 r730-04 kernel: mfi0: 94056841 (817127571s/0x0002/WARN) - PD 00(e0x20/s0) is not a certified drive Nov 22 11:53:14 r730-04 kernel: mfi0: 94056842 (817127596s/0x0002/info) - Inserted: PD 01(e0x20/s1) Nov 22 11:53:14 r730-04 kernel: mfisyspd1 numa-domain 0 on mfi0 Nov 22 11:53:14 r730-04 kernel: mfisyspd1: 953869MB (1953525168 sectors) SYSPD volume (deviceid: 1) Nov 22 11:53:14 r730-04 kernel: mfisyspd1: SYSPD volume attached Nov 22 11:53:14 r730-04 kernel: mfi0: 94056843 (817127596s/0x0002/info) - Inserted: PD 01(e0x20/s1) Info: enclPd=20, scsiType=0, portMap=01, sasAddr=4433221100000000,0000000000000000 Nov 22 11:53:14 r730-04 kernel: mfi0: 94056844 (817127596s/0x0002/WARN) - PD 01(e0x20/s1) is not a certified drive

Here, I fix that date:

dvl@r730-04:~ $ su Password: root@r730-04:/home/dvl # date 202511191236 Wed Nov 19 12:36:00 UTC 2025 root@r730-04:/home/dvl # tail -2 /var/log/messages Nov 22 12:35:32 r730-04 su[10654]: dvl to root on /dev/pts/0 Nov 19 12:36:00 r730-04 date[10656]: date set by dvl

Changing from mfi to mrsas

The issue with mfi0 above goes away when I change to using the mrsas driver. I added this to /boot/loader.conf and rebooted.

shutdown -r now is not the same as reboot

When I say rebooted, that does not refer to the reboot command.

Note: shutdown -r now is not the same as reboot. The latter does not run the rc.d shutdown scripts. In general, I always use shutdown -r now (and sometimes shutdown -r +2 to give any logged-in users some notice).

After the reboot (aka shutdown), this was in /var/log/messages:

Nov 19 12:46:02 r730-04 kernel: AVAGO MegaRAID SAS FreeBSD mrsas driver version: 07.709.04.00-fbsd Nov 19 12:46:02 r730-04 kernel: mrsas0:port 0x2000-0x20ff mem 0x92000000-0x9200ffff,0x91f00000-0x91ffffff at device 0.0 numa-domain 0 on pci3

What drives do I have?

I often get this wrong on the first attempt:

dvl@r730-04:~ $ sysctl kern.drives sysctl: unknown oid 'kern.drives' dvl@r730-04:~ $ sysctl kern.disks kern.disks: da1 da0 ada1 ada0 dvl@r730-04:~ $

There, four drives. But which drive is which:

Nov 19 12:46:02 r730-04 kernel: ada1 at ahcich9 bus 0 scbus12 target 0 lun 0 Nov 19 12:46:02 r730-04 kernel: ada1:ACS-2 ATA SATA 3.x device Nov 19 12:46:02 r730-04 kernel: ada1: Serial Number 20170719[redacted] Nov 19 12:46:02 r730-04 kernel: ada1: 600.000MB/s transfers (SATA 3.x, UDMA6, PIO 1024bytes) Nov 19 12:46:02 r730-04 kernel: ada1: Command Queueing enabled Nov 19 12:46:02 r730-04 kernel: ada1: 118288MB (242255664 512 byte sectors) Nov 19 12:46:02 r730-04 kernel: ses1: ada1,pass4 in 'Slot 05', SATA Slot: scbus12 target 0

That’s one of my SATADOM. That is part of the zpool we are going to increase.

Nov 19 12:46:02 r730-04 kernel: da1 at mrsas0 bus 1 scbus1 target 1 lun 0 Nov 19 12:46:02 r730-04 kernel: da1:Fixed Direct Access SPC-4 SCSI device Nov 19 12:46:02 r730-04 kernel: da1: Serial Number S3Z8N[redacted] Nov 19 12:46:02 r730-04 kernel: da1: 150.000MB/s transfers Nov 19 12:46:02 r730-04 kernel: da1: 953869MB (1953525168 512 byte sectors) Nov 19 12:46:02 r730-04 kernel: da1: quirks=0x8<4K>

That’s one of the drives I’m going to migrate the zpool to.

Drive preparation

I just realized this is not as simple as I thought. I need to duplicate more than just the zpool.

This is one of the drives now in use:

dvl@r730-04:~ $ gpart show ada0

=> 40 242255584 ada0 GPT (116G)

40 532480 1 efi (260M)

532520 2008 - free - (1.0M)

534528 16777216 2 freebsd-swap (8.0G)

17311744 224942080 3 freebsd-zfs (107G)

242253824 1800 - free - (900K)

dvl@r730-04:~ $

The zpool replace command will duplicate only partition #3 (freebsd-zfs). I’m on my own for duplicating the efi partition. That gives me pause.

First, I duplicated the partition layout using gpart backup | restore. I also resized the last partition.

Now I have this:

root@r730-04:~ # gpart show da0

=> 34 1953525101 da0 GPT (932G)

34 6 - free - (3.0K)

40 532480 1 efi (260M)

532520 2008 - free - (1.0M)

534528 16777216 2 freebsd-swap (8.0G)

17311744 1936213384 3 freebsd-zfs (923G)

1953525128 7 - free - (3.5K)

Then I configured da1 the same way:

root@r730-04:~ # gpart backup da0 | gpart restore da1

root@r730-04:~ # gpart show da1

=> 34 1953525101 da1 GPT (932G)

34 6 - free - (3.0K)

40 532480 1 efi (260M)

532520 2008 - free - (1.0M)

534528 16777216 2 freebsd-swap (8.0G)

17311744 1936213384 3 freebsd-zfs (923G)

1953525128 7 - free - (3.5K)

However, I still must copy the bootcode section.

Bootcode

The bootcode part is Architecture specific.

This is what I did:

root@r730-04:~ # newfs_msdos -F 32 -c 1 /dev/da0p1 /dev/da0p1: 524256 sectors in 524256 FAT32 clusters (512 bytes/cluster) BytesPerSec=512 SecPerClust=1 ResSectors=32 FATs=2 Media=0xf0 SecPerTrack=63 Heads=255 HiddenSecs=0 HugeSectors=532480 FATsecs=4096 RootCluster=2 FSInfo=1 Backup=2 root@r730-04:~ # mount -t msdosfs /dev/da0p1 /mnt root@r730-04:~ # mkdir -p /mnt/EFI/BOOT root@r730-04:~ # cp /boot/loader.efi /mnt/EFI/BOOT/BOOTX64.efi root@r730-04:~ # umount /mnt

Then I repeated the same steps for da1.

autoexpand

If autoexpand is on, the zpool will automatically increase in pool sizew ithout having to export and import pool or reboot the system.

See also expandsize which is more useful when using virtual drives (for example, with a hypervisor, perhaps also with file-based devices).

dvl@r730-04:~ $ zpool get all zroot | grep expand zroot autoexpand off default zroot expandsize - -

You will see that autoexpand is off. You may want to set it on before expanding the zpool. I didn’t. See below for the online commands I issued to fix that.

zpool replace

As described in my plan under What’s Next, I will use zpool replace to, one-by-one, replace the existing devices within the zroot pool.

This is the zpool I’m starting with:

root@r730-04:~ # zpool status pool: zroot state: ONLINE scan: scrub repaired 0B in 00:00:02 with 0 errors on Wed Nov 19 18:06:13 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ada0p3 ONLINE 0 0 0 ada1p3 ONLINE 0 0 0 errors: No known data errors root@r730-04:~ #

Here’s the first command. I selected da1 first, for no reason.

root@r730-04:~ # zpool replace zroot ada1p3 da1p3 root@r730-04:~ # zpool status pool: zroot state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Wed Nov 19 13:57:23 2025 1.07G / 1.07G scanned, 466M / 1.07G issued at 116M/s 460M resilvered, 42.62% done, 00:00:05 to go config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ada0p3 ONLINE 0 0 0 replacing-1 ONLINE 0 0 0 ada1p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 (resilvering) errors: No known data errors root@r730-04:~ #

Not long after that, all was well:

root@r730-04:~ # zpool status pool: zroot state: ONLINE scan: resilvered 1.10G in 00:00:13 with 0 errors on Wed Nov 19 13:57:36 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 ada0p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 errors: No known data errors

The zroot is still the same size:

root@r730-04:~ # zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 107G 1.07G 106G - - 0% 0% 1.00x ONLINE -

Now for the next drive:

root@r730-04:~ # zpool replace zroot ada0p3 da0p3 root@r730-04:~ # zpool status pool: zroot state: ONLINE scan: resilvered 1.10G in 00:00:12 with 0 errors on Wed Nov 19 13:59:07 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 errors: No known data errors root@r730-04:~ # zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 107G 1.07G 106G - - 0% 0% 1.00x ONLINE -

Still the same size.

Oh yes, autoexpand was off.

How to fix that… I recall the online command:

root@r730-04:~ # zpool online zroot da1p3 root@r730-04:~ # zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 107G 1.07G 106G - 815G 0% 0% 1.00x ONLINE - root@r730-04:~ # zpool online zroot da0p3 root@r730-04:~ # zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 107G 1.07G 106G - 815G 0% 0% 1.00x ONLINE -

No, that’s not it. Oh yes, I need to specify that expand option.

root@r730-04:~ # zpool online -e zroot da0p3 root@r730-04:~ # zpool online -e zroot da1p3 root@r730-04:~ # zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 923G 1.07G 922G - - 0% 0% 1.00x ONLINE - root@r730-04:~ #

OK, the zpool has been migrated to larger drives.

Will it boot?

Will it boot? It’s of no use if it will not boot.

I am sure I need to go into the system setup and change the boot information.

I’m rather cautious and paranoid about this step.

root@r730-04:~ # zpool scrub zroot root@r730-04:~ # zpool status pool: zroot state: ONLINE scan: scrub repaired 0B in 00:00:06 with 0 errors on Wed Nov 19 14:09:05 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 errors: No known data errors root@r730-04:~ #

Yes, of course, it SHOULD be fine, but now I know it is.

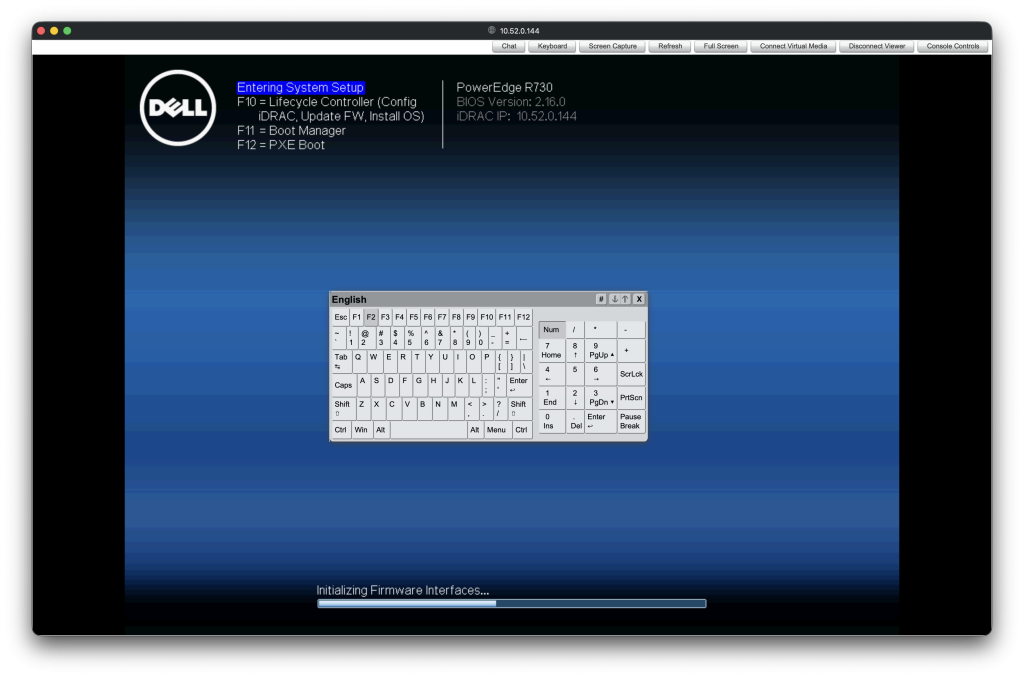

I have the iDRAC console open (from this cafe). Let’s go into system setup.

First, I pressed F2 when the option appeared:

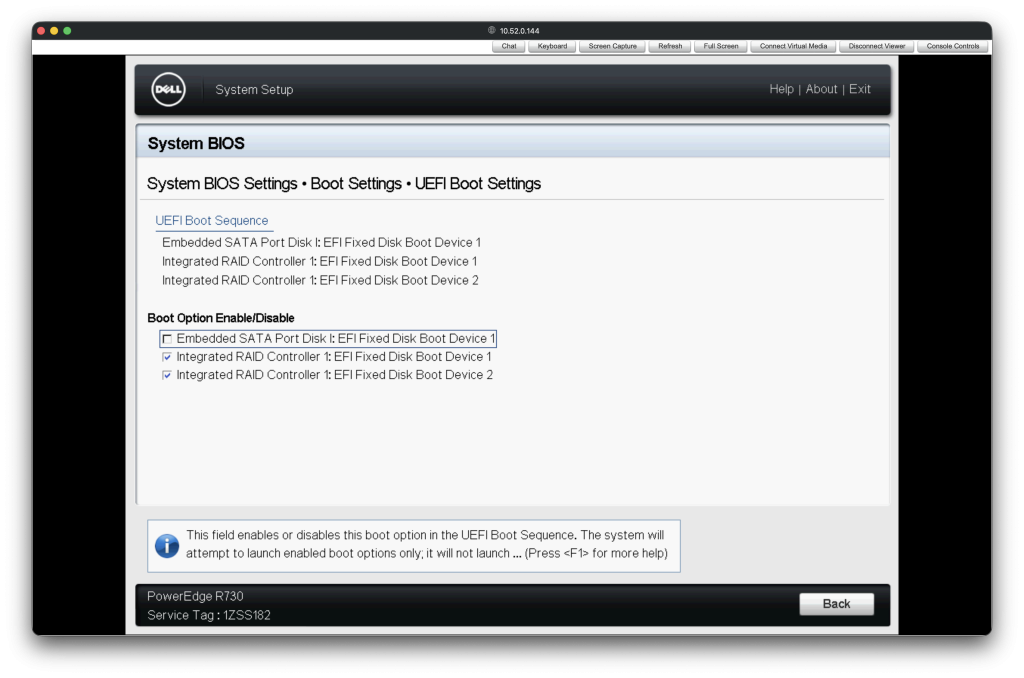

I went into the BIOS settings and disabled booting from the SATA ports on the main board.

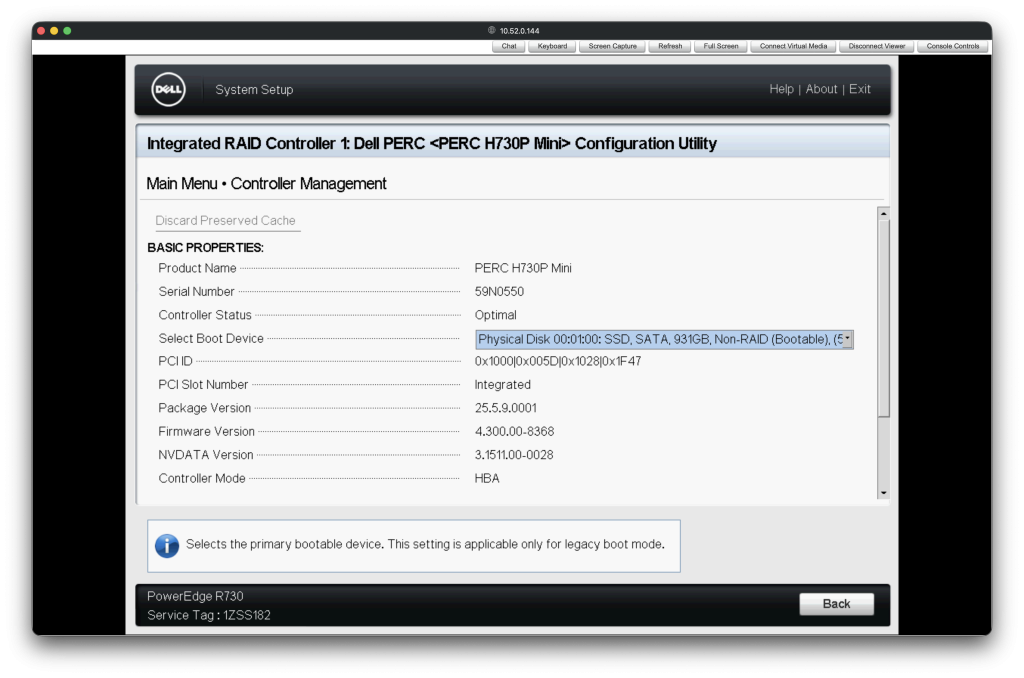

I also selected the first SATA device on the disk controller.

Restart, and hope, then:

Logging into the host, there we go:

dvl@r730-04:~ $ uptime 2:36PM up 1 min, 1 user, load averages: 0.11, 0.06, 0.02 dvl@r730-04:~ $ date Wed Nov 19 14:36:41 UTC 2025 dvl@r730-04:~ $ zpool status pool: zroot state: ONLINE scan: scrub repaired 0B in 00:00:06 with 0 errors on Wed Nov 19 14:09:05 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 errors: No known data errors dvl@r730-04:~ $

Definitely on the new zpool.

Success. Next, let’s go the other way with zfs add.