I’ve been having boot issues with a server containing 20 HDD, all behind HBAs. I have decided to start booting off SSDs.

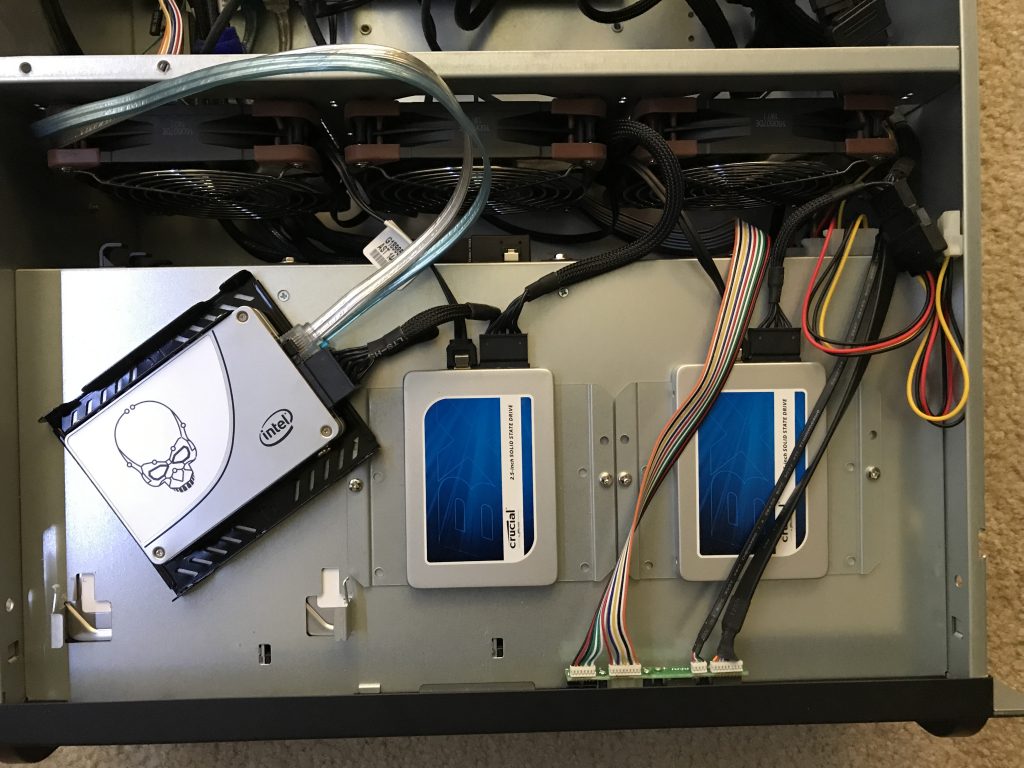

In that previous blog post, there was much speculation about where to mount the new drives, etc. In the meantime, I came up with a solution which did not involve magnets and used a pair of INTEL 730 SSDs I had in another chassis.

Let’s start with how I configured those SSDS.

At the end of this post, some tips to make your process easier.

We are using mfsBSD (based on FreeBSD 11.1), the server is running FreeBSD 10.3, and more information on that server is available.

zroot pool configuration

These steps were carried out on a another server. When completed, the SSDs were moved into the server in question.

I initially did this with a 10G freebsd-zfs partition, but concluded that was too small so I used 20G.

sudo gpart create -s gpt ada0 sudo gpart add -s 512K -t freebsd-boot -a 1M ada0 sudo gpart add -s 4G -t freebsd-swap -a 1M ada0 sudo gpart add -s 20G -t freebsd-zfs -a 1M ada0 sudo gpart create -s gpt ada1 sudo gpart add -s 512K -t freebsd-boot -a 1M ada1 sudo gpart add -s 4G -t freebsd-swap -a 1M ada1 sudo gpart add -s 20G -t freebsd-zfs -a 1M ada1

Here is what that creates:

[dan@knew:~] $ gpart show ada0 ada1

=> 40 468862048 ada0 GPT (224G)

40 2008 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 8388608 2 freebsd-swap (4.0G)

8392704 41943040 3 freebsd-zfs (20G)

50335744 418526344 - free - (200G)

=> 40 468862048 ada1 GPT (224G)

40 2008 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 8388608 2 freebsd-swap (4.0G)

8392704 41943040 3 freebsd-zfs (20G)

50335744 418526344 - free - (200G)

[dan@knew:~] $

This creates a mirror:

$ sudo zpool create zroot mirror ada0p3 ada1p3

zfs configuration

In this section, I set a number of things and create datasets. This is just for reference.

sudo zfs set compression=on zroot sudo zfs set atime=off zroot sudo zfs create zroot/ROOT sudo zfs create zroot/ROOT/default sudo zfs create zroot/tmp sudo zfs create zroot/usr sudo zfs create zroot/usr/local sudo zfs create zroot/var sudo zfs create zroot/var/audit sudo zfs create zroot/var/crash sudo zfs create zroot/var/empty sudo zfs create zroot/var/log sudo zfs create zroot/var/mail sudo zfs create zroot/var/tmp zfs set canmount=noauto zroot/ROOT/default zfs set canmount=off zroot/usr zfs set canmount=off zroot/var zfs set mountpoint=/import zroot/ROOT/default zfs set mountpoint=/import/zroot zroot zfs set mountpoint=none zroot/ROOT zfs set mountpoint=/import zroot/ROOT/default zfs set mountpoint=/ zroot/ROOT/default zfs set mountpoint=/tmp zroot/tmp zfs set mountpoint=/usr zroot/usr zfs set mountpoint=/var zroot/var zfs set mountpoint=/zroot zroot zfs set atime=on zroot/var/mail zfs set setuid=off zroot/tmp zfs set exec=off zroot/tmp zfs set setuid=off zroot/var zfs set exec=off zroot/var zfs set setuid=on zroot/var/mail zfs set exec=on zroot/var/mail

This will ensure the the SSDs bootable:

$ sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 ada0 bootcode written to ada0 $ sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 ada1 bootcode written to ada1 $

This defines the default bootable dataset for this zpool:

sudo zpool set bootfs=zroot/ROOT/default zroot

Some assembly required

Not shown: I moved the above referenced SSDs into the server.

For your viewing pleasure, the entire album is online.

Reboot into mfsBSD

mfsBSD is a live working minimal installation of FreeBSD. You can customize it for your own uses, or download an image and burn it to a thumbdrive. I am doing the latter.

By booting from another image, I can attach both the old and new images to alternative mount locations and copy data. Also, the data is guaranteed to be at rest.

The current goals are:

- Copy data from old to new

- Disable old mount points

- Enable new mount points

- Reboot

- Adjust as necessary

When I boot, I start with this:

root@mfsbsd:~ # zpool import -o altroot=/import -N zroot cannot import 'zroot': more than one matching pool import by numeric ID instead

Oh, yes, don’t be bothered by that. I had configured another set of SSDs in another box and in this box. Both are now installed here. Thus, I have two zroots in this box. But we can’t have two.

Let’s see what we have:

root@mfsbsd:~ # zpool import -o altroot=/import

pool: tank_data

id: 11338904941440479568

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://illumos.org/msg/ZFS-8000-EY

config:

tank_data ONLINE

raidz3-0 ONLINE

da9p1 ONLINE

da10p1 ONLINE

da8p1 ONLINE

da11p1 ONLINE

da12p1 ONLINE

da13p1 ONLINE

da16p1 ONLINE

da17p1 ONLINE

da18p1 ONLINE

da19p1 ONLINE

pool: system

id: 15378250086669402288

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://illumos.org/msg/ZFS-8000-EY

config:

system ONLINE

raidz2-0 ONLINE

da1p3 ONLINE

da7p3 ONLINE

da2p3 ONLINE

da3p3 ONLINE

da4p3 ONLINE

da0p3 ONLINE

da14p3 ONLINE

da5p3 ONLINE

da6p3 ONLINE

da15p3 ONLINE

pool: zroot

id: 11886963753957655559

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://illumos.org/msg/ZFS-8000-EY

config:

zroot ONLINE

mirror-0 ONLINE

diskid/DISK-1543F00F287Fp3 ONLINE

diskid/DISK-1543F00F3587p3 ONLINE

pool: zroot

id: 15879539137961201777

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://illumos.org/msg/ZFS-8000-EY

config:

zroot ONLINE

mirror-0 ONLINE

diskid/DISK-BTJR509003N7240AGNp3 ONLINE

diskid/DISK-BTJR5090037J240AGNp3 ONLINE

I’m not sure which one, let’s try the first one and import by id

root@mfsbsd:~ # zpool import -o altroot=/import -N 11886963753957655559 cannot import 'zroot': pool may be in use from other system, it was last accessed by knew.int.example.org (hostid: 0xcab729ce) on Thu Sep 28 20:32:25 2017 use '-f' to import anyway

Ahh, see the hostname mentioned there? That is *this* host. The ‘other system’ warning is because we booted this box from an thumb drive running mfsBSD; hence it is another system.

This is the zroot I want to destroy/rename.

Let’s look at the other one, just to confirm.

root@mfsbsd:~ # zpool import -o altroot=/import -N 15879539137961201777 cannot import 'zroot': pool may be in use from other system, it was last accessed by mfsbsd (hostid: 0xc807dc72) on Fri Sep 29 12:44:29 2017 use '-f' to import anyway root@mfsbsd:~ #

Yes, that’s look right. It was on mfsBSD that I configured this zroot, albeit on another physical box, booting from this same thumb drive.

Let’s force the first zroot to import:

root@mfsbsd:~ # zpool import -fo altroot=/import -N 11886963753957655559

And mount it. NOTE the -N above specifies ‘Import the pool without mounting any file systems’. That can be very useful if you need to manipulate the properties.

root@mfsbsd:~ # zfs mount zroot

And look inside:

root@mfsbsd:~ # ls /import/zroot .cshrc boot libexec sbin .logstash-forwarder dev media sys .profile entropy mnt tmp COPYRIGHT etc proc usr ROOT home rescue var bin lib root root@mfsbsd:~ #

Yes, this is the original zroot I created, and no longer want. I want the other zroot, which is empty.

Let’s rename this one by exporting it, then importing it with a new name, zroot_DELETE

root@mfsbsd:~ # zpool export zroot root@mfsbsd:~ # zpool import -fo altroot=/import -N 11886963753957655559 zroot_DELETE root@mfsbsd:~ # zpool list NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot_DELETE 19.9G 8.37G 11.5G - 26% 42% 1.00x ONLINE /import root@mfsbsd:~ # zfs mount zroot_DELETE root@mfsbsd:~ # ls /import/zroot .cshrc boot libexec sbin .logstash-forwarder dev media sys .profile entropy mnt tmp COPYRIGHT etc proc usr ROOT home rescue var bin lib root root@mfsbsd:~ #

Yes, I have renamed the correct one. Let’s export it again, and be done with it for now.

root@mfsbsd:~ # zpool export zroot_DELETE

Back to the pool we want:

root@mfsbsd:~ # zpool import -fo altroot=/import -N 15879539137961201777 root@mfsbsd:~ # zfs mount zroot root@mfsbsd:~ # zfs list NAME USED AVAIL REFER MOUNTPOINT zroot 509K 19.3G 25K /import/zroot zroot/ROOT 48K 19.3G 23K none zroot/ROOT/default 25K 19.3G 25K /import zroot/tmp 23K 19.3G 23K /import/tmp zroot/usr 46K 19.3G 23K /import/usr zroot/usr/local 23K 19.3G 23K /import/usr/local zroot/var 163K 19.3G 25K /import/var zroot/var/audit 23K 19.3G 23K /import/var/audit zroot/var/crash 23K 19.3G 23K /import/var/crash zroot/var/empty 23K 19.3G 23K /import/var/empty zroot/var/log 23K 19.3G 23K /import/var/log zroot/var/mail 23K 19.3G 23K /import/var/mail zroot/var/tmp 23K 19.3G 23K /import/var/tmp root@mfsbsd:~ # ls /import/zroot tmp usr var root@mfsbsd:~ #

Yes, this is the empty one.

Look at the pool details:

root@mfsbsd:~ # zpool status zroot pool: zroot state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 diskid/DISK-BTJR509003N7240AGNp3 ONLINE 0 0 0 diskid/DISK-BTJR5090037J240AGNp3 ONLINE 0 0 0 errors: No known data errors

Looking at that drive, yes, it looks like the correct size to me. I expected a 240G drive:

root@mfsbsd:~ # gpart show diskid/DISK-BTJR509003N7240AGN

=> 40 468862048 diskid/DISK-BTJR509003N7240AGN GPT (224G)

40 2008 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 8388608 2 freebsd-swap (4.0G)

8392704 41943040 3 freebsd-zfs (20G)

50335744 418526344 - free - (200G)

root@mfsbsd:~ #

Now we can proceed with copying the data over, using rsync.

Placing the data before copying

In order to make source and destination obvious, I am going to mount the new zroot at /new and the original data at /original. First, I export the zroot, since it was imported:

root@mfsbsd:~ # mkdir /new root@mfsbsd:~ # zpool import -fo altroot=/new zroot root@mfsbsd:~ #

The original zpool is imported with this command:

root@mfsbsd:~ # mkdir /original root@mfsbsd:~ # zpool import -fo altroot=/original system

I did not see what I expected at /original:

root@mfsbsd:/new # ls -l /original total 129 drwxr-xr-x 7 root wheel 20 Sep 28 11:19 root drwxrwxrwt 8 root wheel 8 Sep 29 13:11 tmp drwxr-xr-x 8 root wheel 384 Sep 29 14:59 usr drwxr-xr-x 6 root wheel 256 Sep 29 14:59 var

That is not a typical listing for /. I think the problem is mount related.

root@mfsbsd:/new # zfs set mountpoint=/ system/rootfs root@mfsbsd:/new # ls -l /original/ total 289 -rw-r--r-- 1 root wheel 966 Jul 26 2016 .cshrc -rw-r--r-- 1 root wheel 134 Jan 31 2017 .logstash-forwarder -rw-r--r-- 1 root wheel 254 Jul 26 2016 .profile -r--r--r-- 1 root wheel 6197 Jul 26 2016 COPYRIGHT drwxr-xr-x 2 root wheel 47 Sep 27 20:19 bin drwxr-xr-x 10 root wheel 53 Sep 27 20:19 boot dr-xr-xr-x 2 root wheel 2 Dec 4 2012 dev -rw------- 1 root wheel 4096 Sep 29 13:11 entropy drwxr-xr-x 23 root wheel 133 Sep 27 20:17 etc lrwxr-xr-x 1 root wheel 8 Jul 18 2013 home -> usr/home drwxr-xr-x 3 root wheel 3 Sep 28 18:14 import drwxr-xr-x 3 root wheel 52 Feb 23 2017 lib drwxr-xr-x 3 root wheel 4 Jul 26 2016 libexec drwxr-xr-x 2 root wheel 2 Dec 4 2012 media drwxr-xr-x 3 root wheel 3 Aug 30 21:17 mnt dr-xr-xr-x 2 root wheel 2 Dec 4 2012 proc drwxr-xr-x 2 root wheel 146 Dec 11 2016 rescue drwxr-xr-x 2 root wheel 8 Feb 21 2016 root drwxr-xr-x 2 root wheel 132 Apr 29 18:01 sbin lrwxr-xr-x 1 root wheel 11 Dec 4 2012 sys -> usr/src/sys drwxr-xr-x 2 root wheel 2 Dec 1 2016 tank_data drwxrwxrwt 6 root wheel 6 Dec 19 2015 tmp drwxr-xr-x 17 root wheel 18 Aug 23 2015 usr drwxr-xr-x 29 root wheel 29 Sep 28 10:52 var drwxr-xr-x 2 root wheel 2 Aug 30 21:11 zoomzoom root@mfsbsd:/new #

That. That is better looking.

rsync

This is the rsync which made sense for my uses:

# rsync --progress --archive --exclude-from '/original/usr/home/dan/rsync-exclude.txt' /original/ /new/

What’s in that file? A bunch of stuff I did not want to copy over.

$ cat /usr/home/dan/rsync-exclude.txt zoomzoom tank_data usr/home usr/jails usr/obj usr/ports usr/src import

Why not use zfs send | zfs receive? Good question. The data I want is not complete datasets. rsync is a better fit.

After the rsync completed, I started adjusting the zpools for their intended use and mountpoints.

This short script turns off the canmount property for the datasets in the system zpool which are no longer required:

#!/bin/sh

DATASETS="system

system/root

system/rootfs

system/tmp

system/usr/local

system/var/audit

system/var/empty

system/var/log

system/var/tmp"

for dataset in ${DATASETS}

do

zfs set canmount=off ${dataset}

done

I mentioned this previously, but bootfs must be adjusted. I need to clear and set bootfs on the old and new boot pools:

# zpool get bootfs NAME PROPERTY VALUE SOURCE system bootfs system/rootfs local zroot bootfs zroot/ROOT/default local

zroot is already set. Now clear the system value:

# zpool set bootfs= system # zpool get bootfs NAME PROPERTY VALUE SOURCE system bootfs - default zroot bootfs zroot/ROOT/default local

OK, let’s reboot

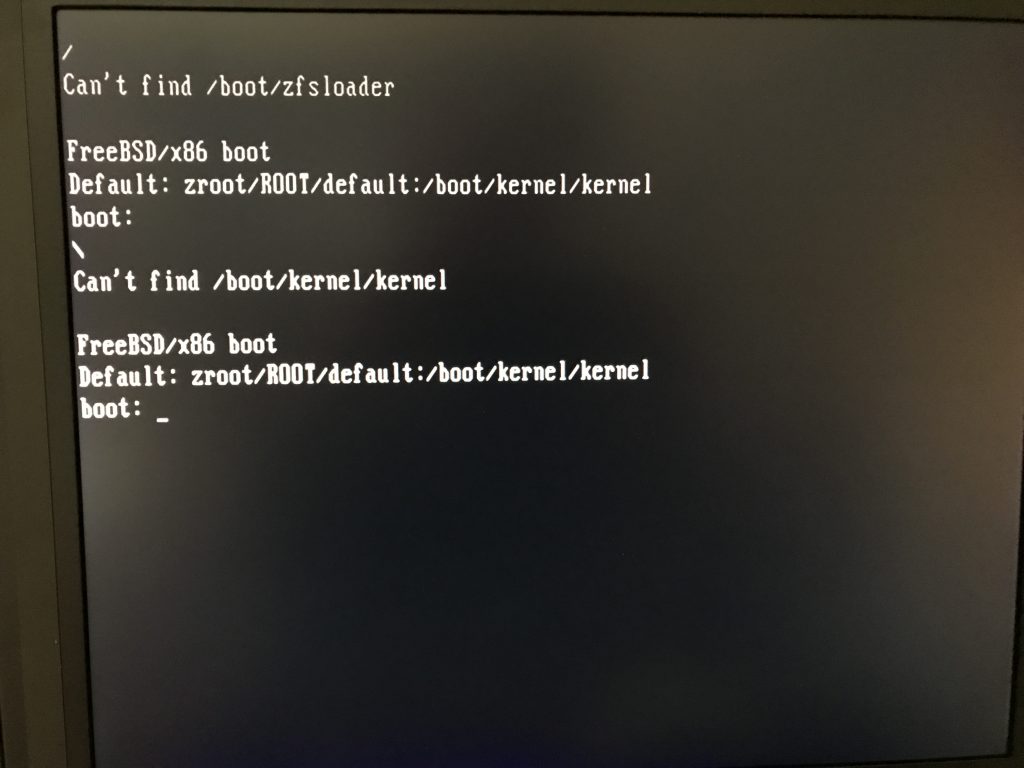

The first reboot failed with this message:

Can't find /boot/zfsloader Can't find /boot/kernel/kernel

I took a snapshot of that boot screen.

Can’t find /boot/kernel/kernel

The fix, boot back into mfsBSD and issue this command:

zfs set canmount=on zroot/ROOT/default

NOTE: I should have disabled jails in /etc/rc.conf before rebooting. Instead, I resorted to CONTROL-C at the console to stop the jails from starting up.

Second boot

On the second boot attempt, I was prompted for a password. Usually, I get in with my ssh-key.

Let’s see how this looks:

[dan@knew /]$ beadm list BE Active Mountpoint Space Created default NR / 7.8G 2017-09-28 21:37 [dan@knew /]$

That’s good. Let’s look at zfs:

[dan@knew /]$ zfs list NAME USED AVAIL REFER MOUNTPOINT tank_data 23.4T 5.83T 4.40G /tank_data tank_data/data 22.4T 5.83T 256K none tank_data/data/bacula 884K 5.83T 277K /usr/jails/bacula-sd-01/usr/local/bacula tank_data/data/bacula-volumes 22.4T 5.83T 256K none tank_data/data/bacula-volumes/FullFile 22.4T 5.83T 20.3T /usr/jails/bacula-sd-01/usr/local/bacula/volumes/FullFile tank_data/data/bacula/spooling 256K 5.83T 256K /usr/jails/bacula-sd-01/usr/local/bacula/spooling tank_data/data/bacula/working 352K 5.83T 352K /usr/jails/bacula-sd-01/usr/local/bacula/working tank_data/foo 256K 5.83T 256K /tank_data/foo tank_data/logging 19.3G 5.83T 19.3G /tank_data/logging tank_data/time_capsule 975G 5.83T 767K /tank_data/time_capsule tank_data/time_capsule/dvl-air01 693G 57.2G 693G /tank_data/time_capsule/dvl-air01 tank_data/time_capsule/dvl-dent 282G 218G 282G /tank_data/time_capsule/dvl-dent zroot 10.1G 9.12G 23K /zroot zroot/ROOT 7.77G 9.12G 23K none zroot/ROOT/default 7.77G 9.12G 7.77G / zroot/tmp 25K 9.12G 25K /tmp zroot/usr 373M 9.12G 23K /usr zroot/usr/local 373M 9.12G 373M /usr/local zroot/var 2.00G 9.12G 25K /var zroot/var/audit 23K 9.12G 23K /var/audit zroot/var/crash 1.50G 9.12G 1.50G /var/crash zroot/var/empty 23K 9.12G 23K /var/empty zroot/var/log 516M 9.12G 516M /var/log zroot/var/mail 27K 9.12G 27K /var/mail zroot/var/tmp 33K 9.12G 33K /var/tmp [dan@knew /]$

There is no system zpool. That’s bad. It’s also why I can’t use my ssh public key: my home directory is not mounted.

Let’s fix that up.

Screwed it up

I booted back into mfsBSD and imported the system zpool, completely messing up my run-time environment.

[root@knew:~] # zpool import system zfs list [root@knew:~] # zfs list bash: /sbin/zfs: No such file or directory [root@knew:~] # zfs list bash: /sbin/zfs: No such file or directory [root@knew:~] # shutdown -r now bash: shutdown: command not found [root@knew:~] #

Yes, I should have used zpool import -o altroot=/import system so that everything was mounted outside the existing filesystems.

Here is what I found, in terms of zfs get -r mountpoint system. I don’t list them here, but there were a number of mountpoint / canmount adjustments to be made.

I think it took three changes to get them right.

But, we’re back up and running off a separate zroot zpool.

Faster boot times!

Once side effect of booting from M/B attached drives (vs HBA attached): faster boot times. There is no delay as the HBA enumerate the drives.

This in addition to faster booting because we are booting from SSD not spinning drives.

Things to watch

Use the –dry-run option on rsync to see what might get copied.

Closely examine the proposed root directory of your new and old boot environments. Make sure you have everything.

Examine the mount points to make sure they are where they should be.

Go slowly & cautiously.

I think my whole process took about 4 hours.