In this post, I’m moving my zroot zpool from larger devices to smaller devices. Why? Well, the smaller devices happen to attach directly to the main board of my server, albeit with some slight modification to a fan cowl.

There have been several recent posts regarding this topic. I tried a different zpool approach, where I added new devices to the zpool and removed the old devices. That worked. It wasn’t ideal for me. It left the original zpool unbootable. That’s why I took this approach.

The original inspiration for the following is https://www.hagen-bauer.de/2025/08/zfs-migration-to-smaller-disk.html and I particularly like their use of labels on each partition.

In this post:

- FreeBSe 14.3

- mfsbsd-14.2-RELEASE-amd64

Change compression at the source

If you check one of my previous test runs, you’ll see that despite specifying compression=zstd on the newly created zpool, after the send | receive, the compression was lz4, the value at the source zpool.

The following fixes that.

Before:

[21:29 r730-01 dvl ~] % zfs get -t filesystem -r compression zroot NAME PROPERTY VALUE SOURCE zroot compression lz4 local zroot/ROOT compression lz4 inherited from zroot zroot/ROOT/default compression lz4 inherited from zroot zroot/reserved compression lz4 inherited from zroot zroot/tmp compression lz4 inherited from zroot zroot/usr compression lz4 inherited from zroot zroot/usr/home compression lz4 inherited from zroot zroot/usr/ports compression lz4 inherited from zroot zroot/usr/src compression lz4 inherited from zroot zroot/var compression lz4 inherited from zroot zroot/var/audit compression lz4 inherited from zroot zroot/var/crash compression lz4 inherited from zroot zroot/var/log compression lz4 inherited from zroot zroot/var/mail compression lz4 inherited from zroot zroot/var/tmp compression lz4 inherited from zroot

After:

[21:30 r730-01 dvl ~] % sudo zfs set compression=zstd zroot [21:30 r730-01 dvl ~] % zfs get -t filesystem -r compression zroot NAME PROPERTY VALUE SOURCE zroot compression zstd local zroot/ROOT compression zstd inherited from zroot zroot/ROOT/default compression zstd inherited from zroot zroot/reserved compression zstd inherited from zroot zroot/tmp compression zstd inherited from zroot zroot/usr compression zstd inherited from zroot zroot/usr/home compression zstd inherited from zroot zroot/usr/ports compression zstd inherited from zroot zroot/usr/src compression zstd inherited from zroot zroot/var compression zstd inherited from zroot zroot/var/audit compression zstd inherited from zroot zroot/var/crash compression zstd inherited from zroot zroot/var/log compression zstd inherited from zroot zroot/var/mail compression zstd inherited from zroot zroot/var/tmp compression zstd inherited from zroot [21:30 r730-01 dvl ~] %

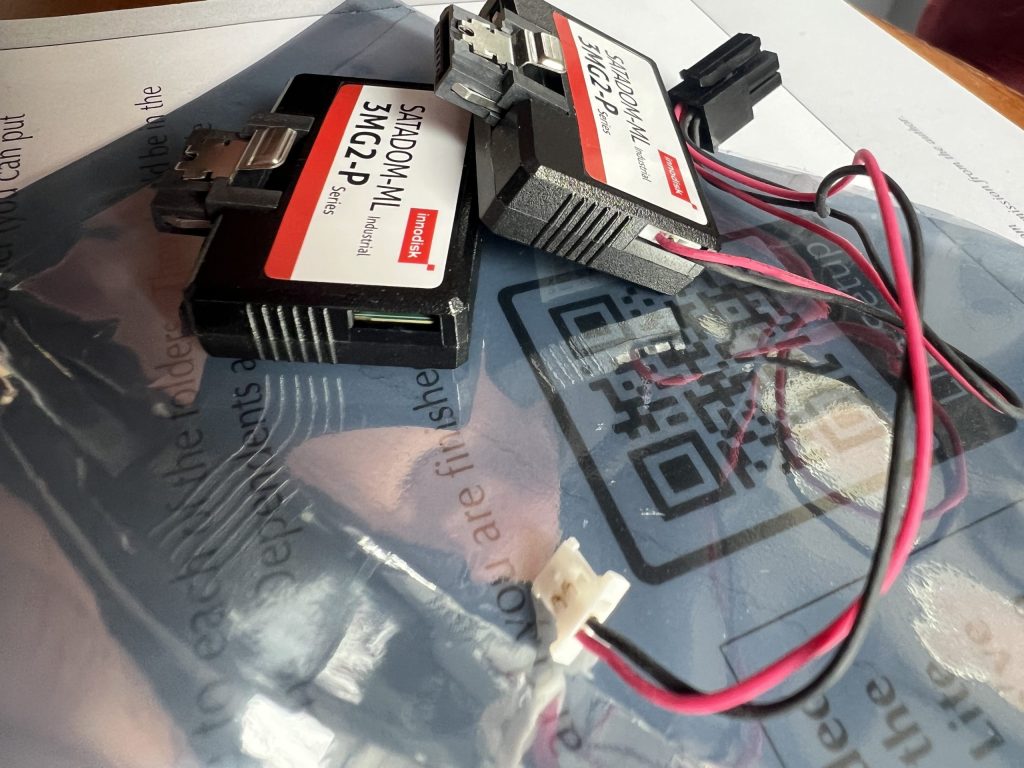

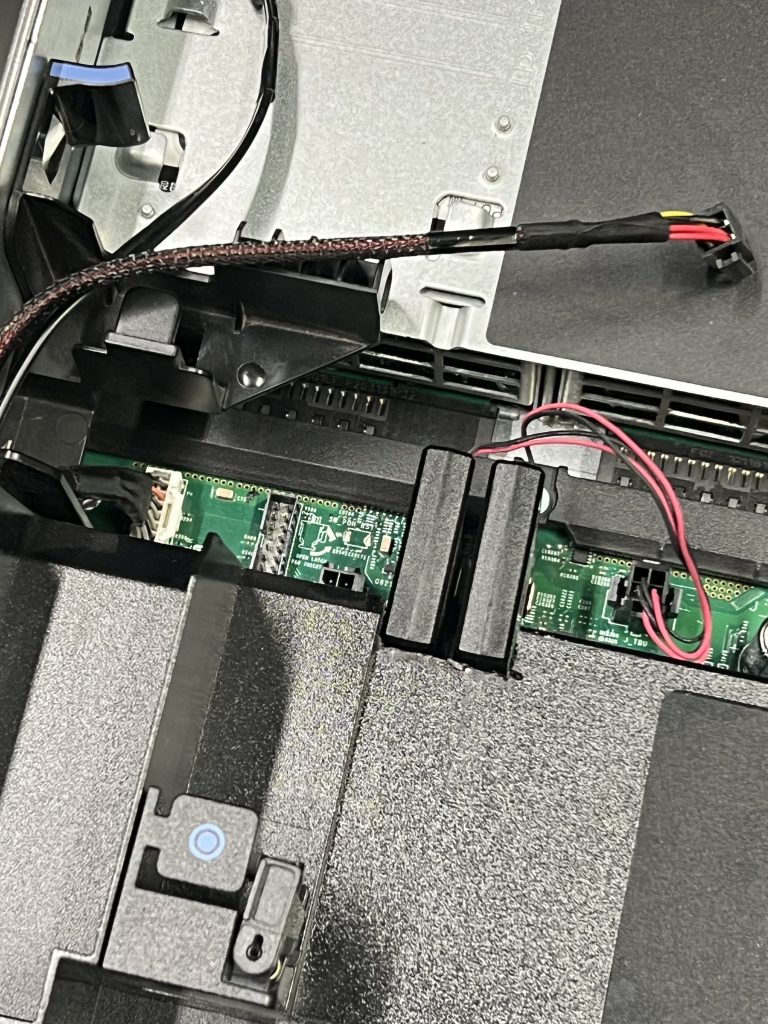

Installing the SATADOM devices

This is just for interest. The real work begins below.

The SATADOM devices have plugs – I’m glad of that. I didn’t notice that when I used the first pair. For this exercise, I have moved the SATADOM I was using for testing and moved them to the production box. I’ll be completely overwriting them later. In their place, I added another pair I recently purchased.

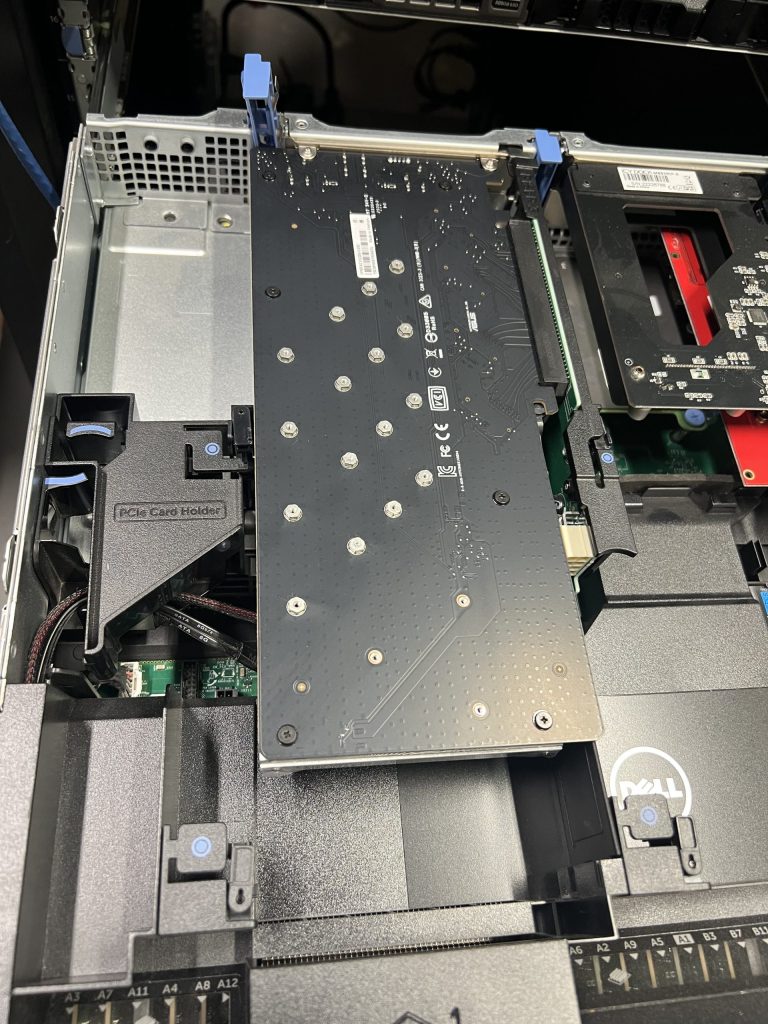

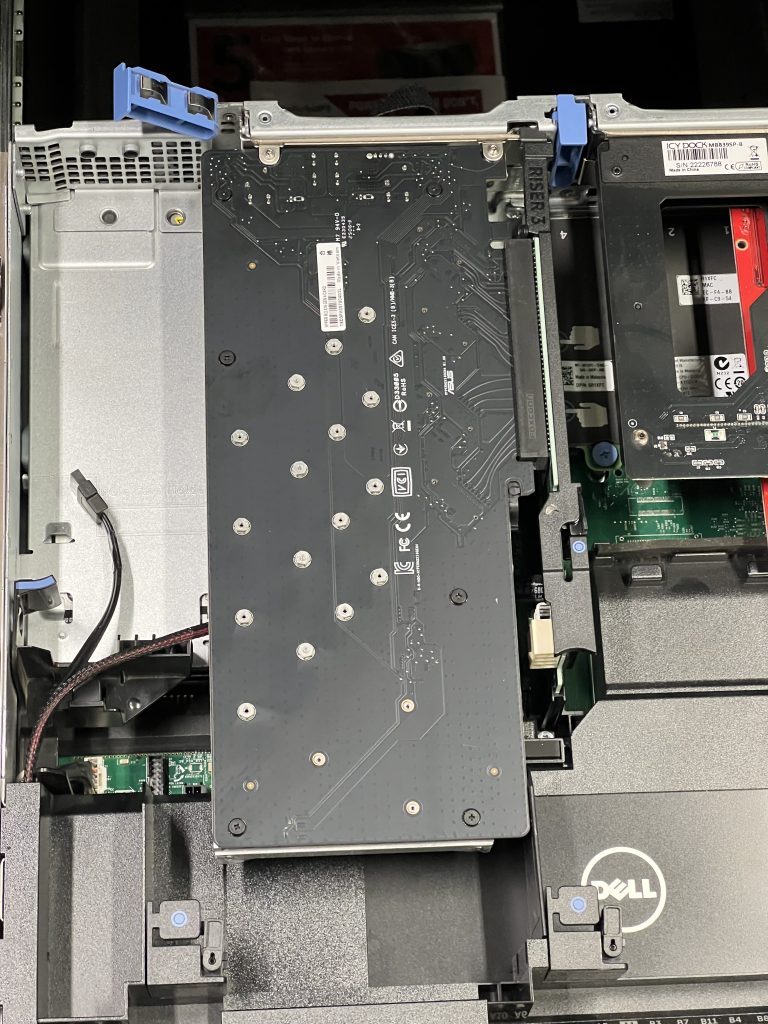

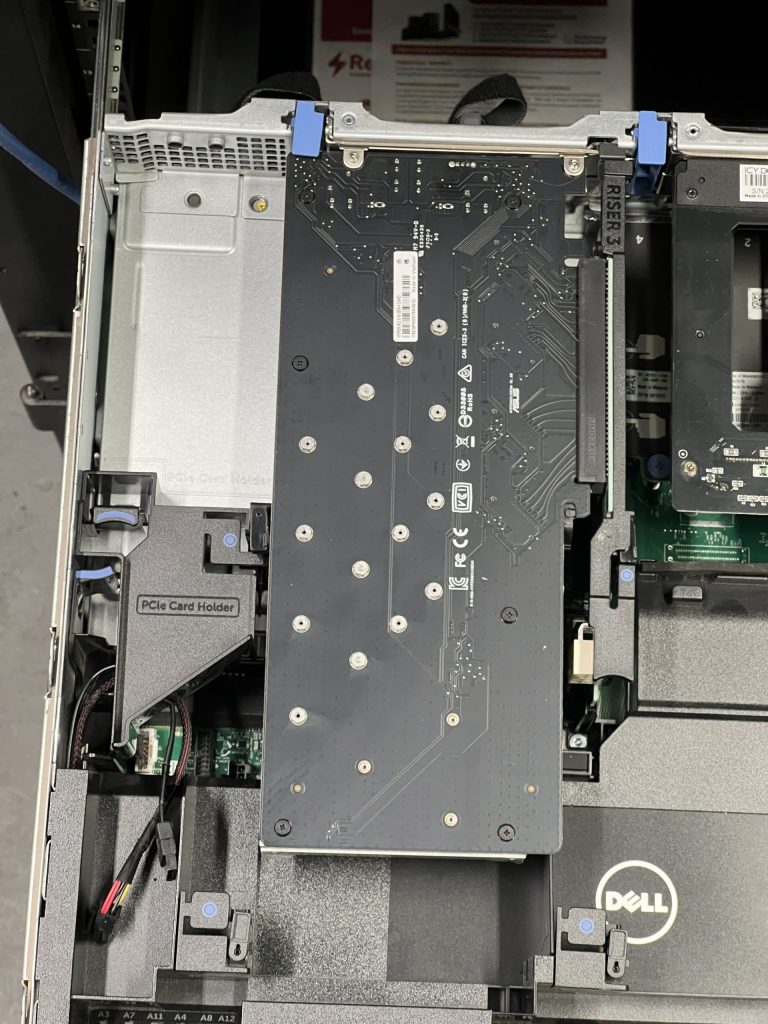

First step, remove this NVMe card I installed a while back.

The NVMe card has been removed.

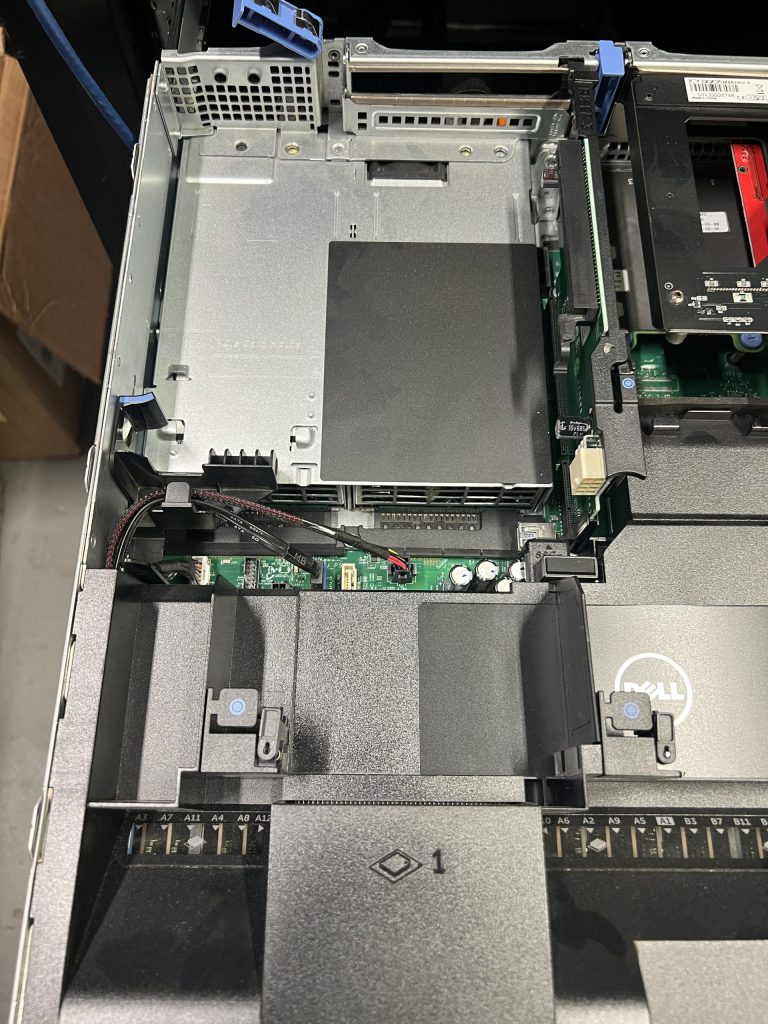

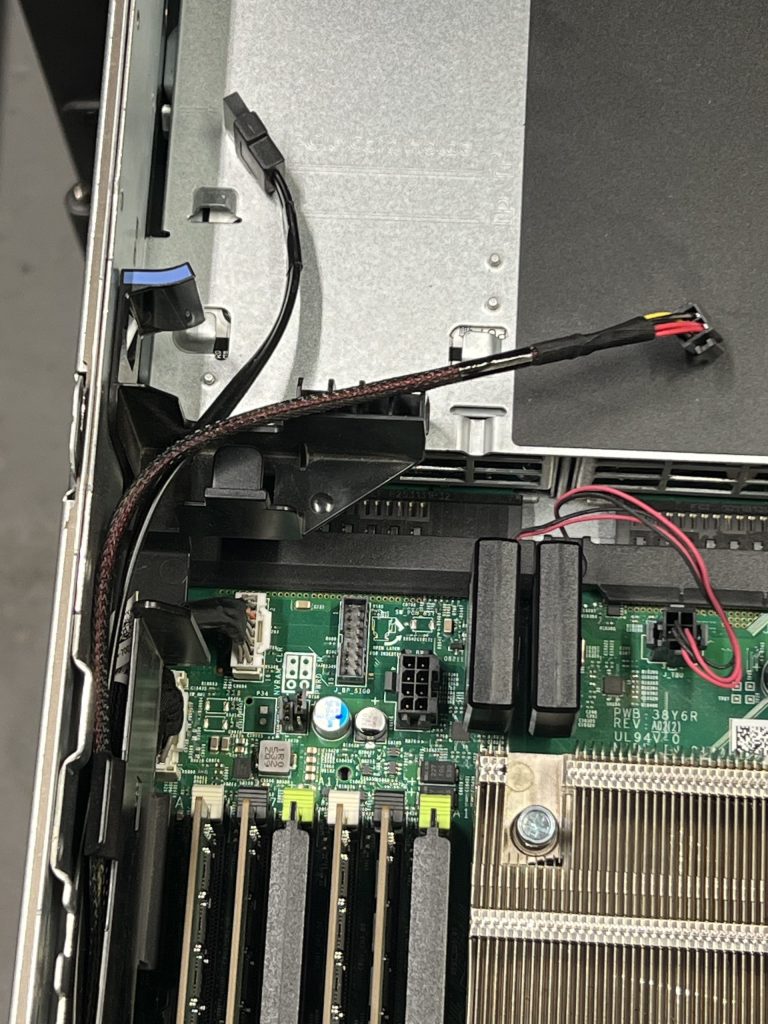

SATADOMs installed.

The fan cowl modification, courtesy of my Dewalt reciprocating tool.

The NVMe card has been reinstalled.

The PCIe Card Holder is back in place.

The existing zpool

This is the zroot for this host:

[16:02 r730-01 dvl ~] % zpool list zroot NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 424G 33.1G 391G - - 15% 7% 1.00x ONLINE - [16:46 r730-01 dvl ~] % zpool status zroot pool: zroot state: ONLINE scan: scrub repaired 0B in 00:02:02 with 0 errors on Thu Nov 20 04:12:59 2025 config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 da12p3 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 errors: No known data errors [16:46 r730-01 dvl ~] %

Notice how we have 500G drives and use only 33G – lots of free space.

The destination drives

These are the SATADOM drives I’m aiming for. These are the same ones used in recent posts.

ada0 at ahcich8 bus 0 scbus11 target 0 lun 0 ada0:ACS-2 ATA SATA 3.x device ada0: Serial Number 20170718AA0000185556 ada0: 600.000MB/s transfers (SATA 3.x, UDMA6, PIO 1024bytes) ada0: Command Queueing enabled ada0: 118288MB (242255664 512 byte sectors) ada1 at ahcich9 bus 0 scbus12 target 0 lun 0 ada1: ACS-2 ATA SATA 3.x device ada1: Serial Number 20170719AA1178164201 ada1: 600.000MB/s transfers (SATA 3.x, UDMA6, PIO 1024bytes) ada1: Command Queueing enabled ada1: 118288MB (242255664 512 byte sectors)

They are sold as 128G devices. Photos are in r730-01: storage plan and smarctl here.

Clearing out the destination

My first step, delete the old partitions:

[16:50 r730-01 dvl ~] % gpart show ada0 ada1

=> 40 242255584 ada0 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi (200M)

411648 16777216 2 freebsd-swap (8.0G)

17188864 225066760 3 freebsd-zfs (107G)

=> 40 242255584 ada1 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi (200M)

411648 16777216 2 freebsd-swap (8.0G)

17188864 225066760 3 freebsd-zfs (107G)

[16:53 r730-01 dvl ~] %

Here are those destructive commands:

[16:53 r730-01 dvl ~] % sudo gpart destroy -F ada0 ada0 destroyed [16:56 r730-01 dvl ~] % sudo gpart destroy -F ada1 ada1 destroyed [16:56 r730-01 dvl ~] %

Preparing the destination drives

I’m following recent work.

root@r730-01:~ # gpart create -s gpt /dev/ada0 ada0 created root@r730-01:~ # gpart create -s gpt /dev/ada1 ada1 created root@r730-01:~ # gpart add -a 1M -s 200M -t efi -l efi0_20170718AA0000185556 /dev/ada0 ada0p1 added root@r730-01:~ # gpart add -a 1M -s 200M -t efi -l efi1_20170719AA1178164201 /dev/ada1 ada1p1 added root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # newfs_msdos /dev/gpt/efi0_20170718AA0000185556 /dev/gpt/efi0_20170718AA0000185556: 409360 sectors in 25585 FAT16 clusters (8192 bytes/cluster) BytesPerSec=512 SecPerClust=16 ResSectors=1 FATs=2 RootDirEnts=512 Media=0xf0 FATsecs=100 SecPerTrack=63 Heads=16 HiddenSecs=0 HugeSectors=409600 root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # newfs_msdos /dev/gpt/efi1_20170719AA1178164201 /dev/gpt/efi1_20170719AA1178164201: 409360 sectors in 25585 FAT16 clusters (8192 bytes/cluster) BytesPerSec=512 SecPerClust=16 ResSectors=1 FATs=2 RootDirEnts=512 Media=0xf0 FATsecs=100 SecPerTrack=63 Heads=16 HiddenSecs=0 HugeSectors=409600 root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # gpart add -a 1m -s 8G -t freebsd-swap -l swap0 /dev/ada0 ada0p2 added root@r730-01:~ # gpart add -a 1m -s 8G -t freebsd-swap -l swap1 /dev/ada1 ada1p2 added root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # gpart add -t freebsd-zfs -l zfs0_20170718AA0000185556 /dev/ada0 ada0p3 added root@r730-01:~ # gpart add -t freebsd-zfs -l zfs1_20170719AA1178164201 /dev/ada1 ada1p3 added root@r730-01:~ # root@r730-01:~ #

This is what the destination drives look like now:

root@r730-01:~ # gpart show ada0 ada1

=> 40 242255584 ada0 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi (200M)

411648 16777216 2 freebsd-swap (8.0G)

17188864 225066760 3 freebsd-zfs (107G)

=> 40 242255584 ada1 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi (200M)

411648 16777216 2 freebsd-swap (8.0G)

17188864 225066760 3 freebsd-zfs (107G)

root@r730-01:~ #

This is what they look like with labels showing:

root@r730-01:~ # gpart show -l ada0 ada1

=> 40 242255584 ada0 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi0_20170718AA0000185556 (200M)

411648 16777216 2 swap0 (8.0G)

17188864 225066760 3 zfs0_20170718AA0000185556 (107G)

=> 40 242255584 ada1 GPT (116G)

40 2008 - free - (1.0M)

2048 409600 1 efi1_20170719AA1178164201 (200M)

411648 16777216 2 swap1 (8.0G)

17188864 225066760 3 zfs1_20170719AA1178164201 (107G)

root@r730-01:~ #

New zpool creation

This is the command for easy copy/paste. A side benefit of using labels: In this command, it is device-name independent.

zpool create -f \ -o altroot=/altroot \ -o cachefile=/tmp/zpool.cache \ -O mountpoint=none \ -O atime=off \ -O compression=zstd \ zroot_n mirror /dev/gpt/zfs0_20170718AA0000185556 /dev/gpt/zfs1_20170719AA1178164201

This is what I ran. Don’t you love that prompt?

root@r730-01:~ # zpool create -f \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B -o altroot=/altroot \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B -o cachefile=/tmp/zpool.cache \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B -O mountpoint=none \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B -O atime=off \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B -O compression=zstd \

[%F{cyan}%B%T%b%f %F{green}%B>%b%f] %F{magenta}%B zroot_n mirror /dev/gpt/zfs0_20170718AA0000185556 /dev/gpt/zfs1_20170719AA1178164201

root@r730-01:~ #

root@r730-01:~ # zpool list zroot_n

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot_n 107G 432K 107G - - 0% 0% 1.00x ONLINE /altroot

root@r730-01:~ # zpool status zroot_n

pool: zroot_n

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

zroot_n ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gpt/zfs0_20170718AA0000185556 ONLINE 0 0 0

gpt/zfs1_20170719AA1178164201 ONLINE 0 0 0

errors: No known data errors

root@r730-01:~ #

snapshot and replication

On my host, I’m going to shut down all the jails first. I’m not showing that command. Ideally, I could do this in single user mode. I’m not.

The snapshot:

root@r730-01:~ # zfs snapshot -r zroot@2delete-later-full2

The send | recv:

root@r730-01:~ # zfs send -Rv zroot@2delete-later-full2 | zfs receive -uFv zroot_n full send of zroot@autosnap_2025-11-16_00:00:16_daily estimated size is 43.1K send from @autosnap_2025-11-16_00:00:16_daily to zroot@autosnap_2025-11-17_00:00:04_daily estimated size is 624B send from @autosnap_2025-11-17_00:00:04_daily to zroot@autosnap_2025-11-18_00:00:02_daily estimated size is 624B send from @autosnap_2025-11-18_00:00:02_daily to zroot@autosnap_2025-11-19_00:00:08_daily estimated size is 624B send from @autosnap_2025-11-19_00:00:08_daily to zroot@autosnap_2025-11-20_00:00:03_daily estimated size is 624B send from @autosnap_2025-11-20_00:00:03_daily to zroot@autosnap_2025-11-21_00:00:11_daily estimated size is 624B send from @autosnap_2025-11-21_00:00:11_daily to zroot@autosnap_2025-11-22_00:00:13_daily estimated size is 624B send from @autosnap_2025-11-22_00:00:13_daily to zroot@autosnap_2025-11-23_00:00:01_daily estimated size is 624B send from @autosnap_2025-11-23_00:00:01_daily to zroot@autosnap_2025-11-23_17:00:04_hourly estimated size is 624B send from @autosnap_2025-11-23_17:00:04_hourly to zroot@autosnap_2025-11-23_18:00:04_hourly estimated size is 624B ... received 312B stream in 0.10 seconds (3.19K/sec) receiving incremental stream of zroot/var/mail@autosnap_2025-11-25_17:00:00_hourly into zroot_n/var/mail@autosnap_2025-11-25_17:00:00_hourly received 312B stream in 0.10 seconds (3.07K/sec) receiving incremental stream of zroot/var/mail@autosnap_2025-11-25_17:00:00_frequently into zroot_n/var/mail@autosnap_2025-11-25_17:00:00_frequently received 312B stream in 0.07 seconds (4.53K/sec) receiving incremental stream of zroot/var/mail@2delete-later-full2 into zroot_n/var/mail@2delete-later-full2 received 312B stream in 0.03 seconds (10.1K/sec) root@r730-01:~ #

The full output is in this gist (you’ll need the raw view to see it all).

See how the allocated amounts are so very similar

root@r730-01:~ # zpool list zroot zroot_n NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 424G 33.1G 391G - - 15% 7% 1.00x ONLINE - zroot_n 107G 28.0G 79.0G - - 1% 26% 1.00x ONLINE /altroot

In fact, it’s more than 10% smaller – I say that’s because I went from lz4 to zstd for compression.

I suspect that is precisely because I set compression=zstd above before sending the data. Changes to compression affects only new writing, not existing data on disk.

root@r730-01:~ # zfs get compression zroot_n NAME PROPERTY VALUE SOURCE zroot_n compression zstd received

Mountpoint and bootfs

root@r730-01:~ # zfs set mountpoint=/ zroot_n/ROOT/default root@r730-01:~ # zpool set bootfs=zroot_n/ROOT/default zroot_n root@r730-01:~ #

Bootloader for uefi

I don’t know why the instructions had this mount command, but I’m blindly following along (bad advice).

root@r730-01:~ # zfs mount -a root@r730-01:~ # mkdir -p /mnt/boot/efi root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # mount -t msdosfs /dev/gpt/efi0_20170718AA0000185556 /mnt/boot/efi root@r730-01:~ # mkdir -p /mnt/boot/efi/EFI/BOOT root@r730-01:~ # cp /boot/loader.efi /mnt/boot/efi/EFI/BOOT/BOOTX64.EFI root@r730-01:~ # umount /mnt/boot/efi root@r730-01:~ # root@r730-01:~ # root@r730-01:~ # mount -t msdosfs /dev/gpt/efi1_20170719AA1178164201 /mnt/boot/efi root@r730-01:~ # mkdir -p /mnt/boot/efi/EFI/BOOT root@r730-01:~ # cp /boot/loader.efi /mnt/boot/efi/EFI/BOOT/BOOTX64.EFI root@r730-01:~ # umount /mnt/boot/efi root@r730-01:~ #

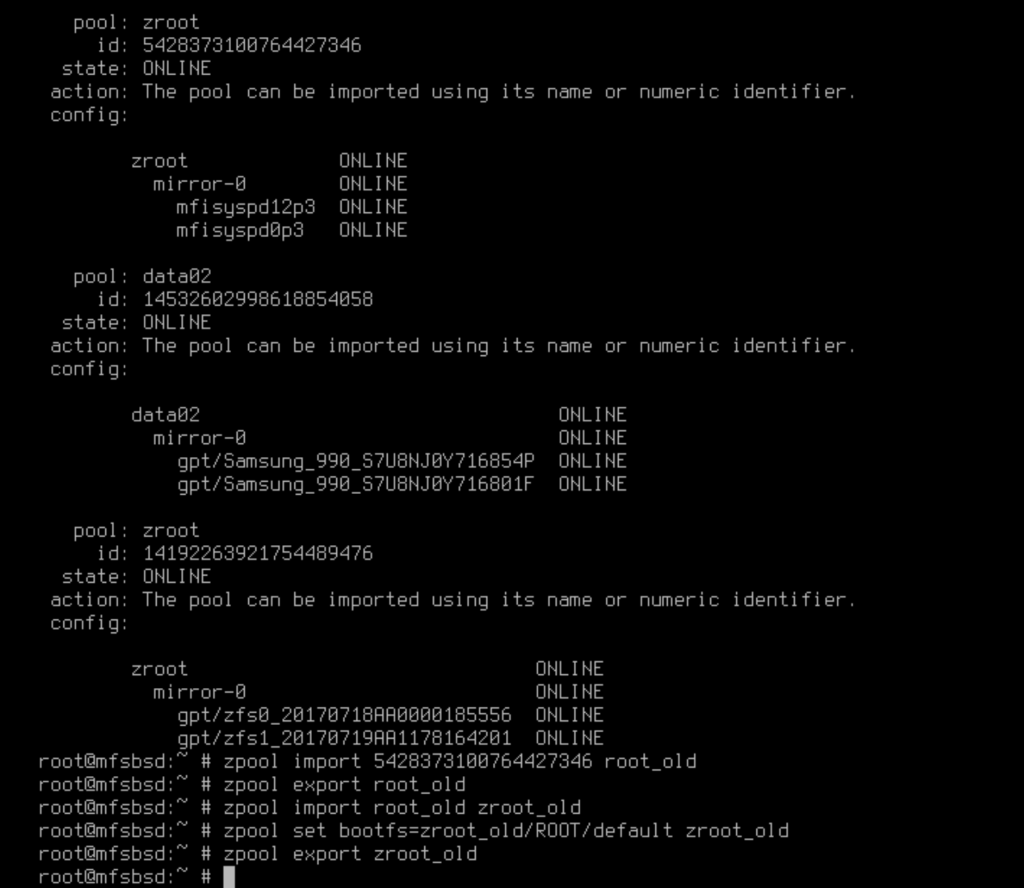

Rename the pool

It was here that I booted into mfsbsd. I deviated slightly from the original instructions by adding -f to the first import. I did this from the console, and the following is not a copy/paste, but it represents what I did.

root@mfsbsd:~ # zpool import -f zroot_n zroot root@mfsbsd:~ # zpool export zroot root@mfsbsd:~ # shutdown -r now

Next, I got rid of the existing zroot, just like last time. I can’t fully explain it, but even with setting my boot devices to the SATADOM SSDs, I still wound up booting from the wrong pool, I think.

This is a screen shot of the HTML console, because I didn’t get networking running. Typing that long zpool ID wasn’t fun, but I did get it right on the first try.

Getting back in

That worked, it booted into the SATADOMs:

[13:34 pro05 dvl ~] % r730 Last login: Tue Nov 25 17:01:32 2025 from pro05.startpoint.vpn.unixathome.org [18:34 r730-01 dvl ~] % jls JID IP Address Hostname Path [18:34 r730-01 dvl ~] % zpool status zroot pool: zroot state: ONLINE config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/zfs0_20170718AA0000185556 ONLINE 0 0 0 gpt/zfs1_20170719AA1178164201 ONLINE 0 0 0 [18:39 r730-01 dvl ~] % zpool list zroot NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zroot 107G 28.0G 79.0G - - 1% 26% 1.00x ONLINE -

Yes. That is the new devices. I’ll just enable jails, and restart the box. I’ll closely watch the console for anodd things.

[18:40 r730-01 dvl ~] % sudo service jail enable jail enabled in /etc/rc.conf

zpools not imported

After rebooting, I found my other zpools were not imported, so I did that manually, then restarted.

[18:52 r730-01 dvl ~] % zpool status

pool: zroot

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gpt/zfs0_20170718AA0000185556 ONLINE 0 0 0

gpt/zfs1_20170719AA1178164201 ONLINE 0 0 0

errors: No known data errors

[18:52 r730-01 dvl ~] % zpool import

cannot discover pools: permission denied

[18:52 r730-01 dvl ~] % sudo zpool import

pool: data02

id: 14532602998618854058

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

data02 ONLINE

mirror-0 ONLINE

gpt/Samsung_990_S7U8NJ0Y716854P ONLINE

gpt/Samsung_990_S7U8NJ0Y716801F ONLINE

pool: data01

id: 801222743406015344

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

data01 ONLINE

raidz2-0 ONLINE

gpt/Y7P0A022TEVE ONLINE

gpt/Y7P0A02ATEVE ONLINE

gpt/Y7P0A02DTEVE ONLINE

gpt/Y7P0A02GTEVE ONLINE

gpt/Y7P0A02LTEVE ONLINE

gpt/Y7P0A02MTEVE ONLINE

gpt/Y7P0A02QTEVE ONLINE

gpt/Y7P0A033TEVE ONLINE

pool: data03

id: 18080010574273476177

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

data03 ONLINE

mirror-0 ONLINE

gpt/WD_22492H800867 ONLINE

gpt/WD_230151801284 ONLINE

mirror-1 ONLINE

gpt/WD_230151801478 ONLINE

gpt/WD_230151800473 ONLINE

pool: zroot_old

id: 5428373100764427346

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

zroot_old ONLINE

mirror-0 ONLINE

gpt/BTWA602403NR480FGN ONLINE

gpt/BTWA602401SD480FGN ONLINE

[18:52 r730-01 dvl ~] % sudo zpool import data01

[18:52 r730-01 dvl ~] % sudo zpool import data02

[18:53 r730-01 dvl ~] % sudo zpool import data03

[18:53 r730-01 dvl ~] % sudo zpool import

pool: zroot_old

id: 5428373100764427346

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

zroot_old ONLINE

mirror-0 ONLINE

gpt/BTWA602403NR480FGN ONLINE

gpt/BTWA602401SD480FGN ONLINE

The next time I logged in, I saw them all:

[18:56 r730-01 dvl ~] % zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT data01 5.81T 4.85T 985G - - 3% 83% 1.00x ONLINE - data02 3.62T 703G 2.94T - - 32% 18% 1.00x ONLINE - data03 7.25T 1.30T 5.95T - - 32% 17% 1.00x ONLINE - zroot 107G 28.0G 79.0G - - 1% 26% 1.00x ONLINE -

There are many Nagios alerts, but they are all related to the host being offline for a while:

- snapshot age – nothing newer than 90 minutes old

- some files are more than an hour old – they are usually refreshed from the database, but the jail wasn’t running at the time of that periodic script

I suspect that within an hour, all will be clear.