This problem was difficult to figure out. The cause was simple, but not obvious.

Messages such as this were appearing in emails

From July:

newsyslog: chmod(/var/log/sendmail.st.9) in change_attrs: No such file or directory

In August:

newsyslog: chmod(/var/log/auth.log.6.bz2) in change_attrs: No such file or directory

I had no idea why. My initial suspicion was the /etc/newsyslog.conf configuration for that file:

[dan@knew:~] $ grep auth /etc/newsyslog.conf /var/log/auth.log root:logcheck 640 7 * @T00 JC [dan@knew:~] $

That count is 7, so why is it complaining about 6? The files look ok:

[dan@knew:~] $ ls -l /var/log/auth.log* -rw-r----- 1 root logcheck 943409 Aug 14 19:09 /var/log/auth.log -rw-r----- 1 root logcheck 20036 Aug 14 00:00 /var/log/auth.log.0.bz2 -rw-r----- 1 root logcheck 21375 Aug 13 00:00 /var/log/auth.log.1.bz2 -rw-r----- 1 root logcheck 20002 Aug 12 00:00 /var/log/auth.log.2.bz2 -rw-r----- 1 root logcheck 21031 Aug 11 00:00 /var/log/auth.log.3.bz2 -rw-r----- 1 root logcheck 20636 Aug 4 00:00 /var/log/auth.log.4.bz2 -rw-r----- 1 root logcheck 20446 Aug 3 00:00 /var/log/auth.log.5.bz2 -rw-r----- 1 root logcheck 20370 Aug 2 00:00 /var/log/auth.log.6.bz2 [dan@knew:~] $

NOTE: the above ls was run several days after I solved the problem.

I will look, find nothing, and go on with my day.

After some time, I had a clue. quota

$ zfs get quota zroot/var/log NAME PROPERTY VALUE SOURCE zroot/var/log quota 3.50G local

Ahh, that’s got to be it. I bumped it up and the problem went away.

What was using all the space?

Now that I knew this was a space limitation, I looked around at what was taking up all the space. I found it quickly:

[dan@knew:/var/log] $ ls -lt | head total 3512974 -rw-r--r-- 1 root wheel 22638798824 Aug 10 17:15 netatalk.log -rw-r--r-- 1 root wheel 80481 Aug 10 17:15 snmpd.log -rw-r----- 1 root logcheck 42713 Aug 10 17:15 maillog -rw------- 1 root wheel 3635 Aug 10 17:15 cron -rw-r----- 1 root logcheck 451826 Aug 10 17:12 auth.log -rw-r--r-- 1 root wheel 98945 Aug 10 16:20 utx.log -rw-r--r-- 1 root wheel 848 Aug 10 16:20 messages -rw-r--r-- 1 root wheel 591 Aug 10 16:20 utx.lastlogin -rw------- 1 root wheel 369078 Aug 10 16:09 debug.log [dan@knew:/var/log] $ ls -lh netatalk.log -rw-r--r-- 1 root wheel 21G Aug 10 17:16 netatalk.log

Yes, that is 21G of logging. Compression for the win.

Checking the configuration file:

[dan@knew:~] $ head -4 /usr/local/etc/afp.conf [Global] vol preset = default_for_all_vol log file = /var/log/netatalk.log log level = default:maxdebug

I remembered enabling debug when I was trying to get my ZFS-based Time Capsule working again.

I waited for any running backups to complete, then changed the file to look like this:

[Global] vol preset = default_for_all_vol log file = /var/log/netatalk.log log level = default:warn

Then I restarted afp.

I should also rotate that file.

Monitor, monitor, monitor

But wait, there’s more.

Why was I not monitoring quotas?

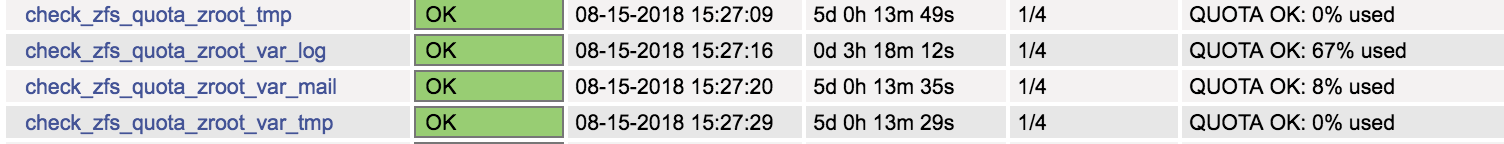

That led me to a script, check_zfs_quota from Claudiu Vasadi.

Now I am monitoring those quotas. The deployment of that script had some unintended side effects, not related to the script, which took FreshPorts offline for a few hours.

This is what that looks like in Nagios, for one host:

I think I would prefer a script which:

* detects filesystems containing quotas

* runs this check_zfs_quota on that filesystem

I guess I’ll add that to my list of things to do. EDIT: Done, contributed back to check_zpool_scrub but I am not using it on my Nagios configuration yet.