Yesterday, I added a new drive into the system. Today, I will replace the failing drive with that one.

In this post:

- FreeBSD 14.1

Before

[18:23 r730-03 dvl ~] % zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT data01 32.7T 26.0T 6.76T - - 32% 79% 1.00x ONLINE - zroot 412G 18.5G 393G - - 18% 4% 1.00x ONLINE - [18:24 r730-03 dvl ~] % zpool status data01 pool: data01 state: ONLINE scan: scrub repaired 0B in 1 days 10:31:52 with 0 errors on Sat Dec 21 16:06:52 2024 config: NAME STATE READ WRITE CKSUM data01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/HGST_8CJVT8YE ONLINE 0 0 0 gpt/SG_ZHZ16KEX ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gpt/SG_ZHZ03BAT ONLINE 0 0 0 gpt/HGST_8CJW1G4E ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gpt/SG_ZL2NJBT2 ONLINE 0 0 0 gpt/HGST_8CJR6GZE ONLINE 0 0 0 errors: No known data errors [18:24 r730-03 dvl ~] % tail /var/log/messages Dec 23 16:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Currently unreadable (pending) sectors Dec 23 16:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Offline uncorrectable sectors Dec 23 16:52:16 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Currently unreadable (pending) sectors Dec 23 16:52:16 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Offline uncorrectable sectors Dec 23 17:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Currently unreadable (pending) sectors Dec 23 17:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Offline uncorrectable sectors Dec 23 17:52:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Currently unreadable (pending) sectors Dec 23 17:52:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Offline uncorrectable sectors Dec 23 18:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Currently unreadable (pending) sectors Dec 23 18:22:17 r730-03 smartd[15472]: Device: /dev/da6 [SAT], 18 Offline uncorrectable sectors [18:25 r730-03 dvl ~] % grep da6 /var/run/dmesg.boot da6 at mrsas0 bus 1 scbus1 target 7 lun 0 da6:Fixed Direct Access SPC-4 SCSI device da6: Serial Number 8CJR6GZE da6: 150.000MB/s transfers da6: 11444224MB (2929721344 4096 byte sectors)

It is da6 I want to replace. That’s the old drive. Also known as gpt/HGST_8CJR6GZE from the zpool status command above. I know because 8CJR6GZE is the serial number.

What’s the replacement drive?

These are the drives in the host:

[18:26 r730-03 dvl ~] % sysctl kern.disks kern.disks: da7 da3 da2 da5 da1 da6 da4 da0 cd0 ada1 ada0

These are the partitions:

[18:27 r730-03 dvl ~] % gpart status Name Status Components ada0p1 OK ada0 ada0p2 OK ada0 ada0p3 OK ada0 ada1p1 OK ada1 ada1p2 OK ada1 ada1p3 OK ada1 da4p1 OK da4 da6p1 OK da6 da1p1 OK da1 da5p1 OK da5 da2p1 OK da2 da3p1 OK da3

So I bet it’s da0 which is the new drive. It’s listed above, but not below.

[18:27 r730-03 dvl ~] % gpart show da0 gpart: No such geom: da0. [18:28 r730-03 dvl ~] % grep da0 /var/run/dmesg.boot da0 at mrsas0 bus 1 scbus1 target 1 lun 0 da0:Fixed Direct Access SPC-4 SCSI device da0: Serial Number 5PGGTH3D da0: 150.000MB/s transfers da0: 11444224MB (23437770752 512 byte sectors) [18:28 r730-03 dvl ~] % grep ^da0 /var/run/dmesg.boot da0 at mrsas0 bus 1 scbus1 target 1 lun 0 da0: Fixed Direct Access SPC-4 SCSI device da0: Serial Number 5PGGTH3D da0: 150.000MB/s transfers da0: 11444224MB (23437770752 512 byte sectors) [18:29 r730-03 dvl ~] %

The ^ in the grep avoids listing ada0, one of the boot drives.

That serial number is also not in the zpool status output.

Yes, that looks right.

Copy the partitioning

The old drive: 11444224MB (2929721344 4096 byte sectors)

The new drive: 11444224MB (23437770752 512 byte sectors)

[19:08 r730-03 dvl ~] % gpart show da6

=> 6 2929721333 da6 GPT (11T)

6 2929721325 1 freebsd-zfs (11T)

2929721331 8 - free - (32K)

Based on Adding another pair of drives to a zpool mirror on FreeBSD (that’s why I blog, so I don’t have to remember), I’m going to do this:

[19:08 r730-03 dvl ~] % gpart backup da6 | sudo gpart restore da0 gpart: start '6': Invalid argument

I bet that’s because of the different sector sizes.

Instead, I will do this manually:

[19:08 r730-03 dvl ~] % sudo gpart create -s gpt da0

da0 created

[19:16 r730-03 dvl ~] % sudo sudo gpart add -i 1 -t freebsd-zfs -a 4k -s 23437770600 da0

da0p1 added

[19:16 r730-03 dvl ~] % gpart show da0

=> 40 23437770672 da0 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

Where did that big number come from? I took 2929721344 from the old drive and multiply it by 8 = 4096/512 to adjust for the different sector sizes.

Let’s check that

Looking at the other drives, I see 23437770600 used on da4 and several other drives. I’m confident of this value.

[19:16 r730-03 dvl ~] % gpart show

=> 40 937703008 ada0 GPT (447G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 67108864 2 freebsd-swap (32G)

67110912 870590464 3 freebsd-zfs (415G)

937701376 1672 - free - (836K)

=> 40 937703008 ada1 GPT (447G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 67108864 2 freebsd-swap (32G)

67110912 870590464 3 freebsd-zfs (415G)

937701376 1672 - free - (836K)

=> 40 23437770672 da4 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

=> 6 2929721333 da6 GPT (11T)

6 2929721325 1 freebsd-zfs (11T)

2929721331 8 - free - (32K)

=> 40 23437770672 da1 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

=> 34 23437770685 da5 GPT (11T)

34 6 - free - (3.0K)

40 23437770600 1 freebsd-zfs (11T)

23437770640 79 - free - (40K)

=> 40 23437770672 da2 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

=> 40 23437770672 da3 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

=> 40 23437770672 da0 GPT (11T)

40 23437770600 1 freebsd-zfs (11T)

23437770640 72 - free - (36K)

Label

Let’s do the fancy labels, which I could have specified on the gpart add:

[19:36 r730-03 dvl ~] % sudo gpart modify -i 1 -l HGST_5PGGTH3D da0

da0p1 modified

[19:37 r730-03 dvl ~] % gpart show -l da0

=> 40 23437770672 da0 GPT (11T)

40 23437770600 1 HGST_5PGGTH3D (11T)

23437770640 72 - free - (36K)

zpool replace

Oh wait, that linked URL for what I did before is not actually a zpool replace. But Added new drive when existing one gave errors, then zpool replace is.

First, let’s look in here:

[19:37 r730-03 dvl ~] % ls -l /dev/gpt total 0 crw-r----- 1 root operator 0x18a 2024.12.23 19:37 HGST_5PGGTH3D crw-r----- 1 root operator 0xa5 2024.12.22 21:20 HGST_8CJR6GZE crw-r----- 1 root operator 0xab 2024.12.22 21:20 HGST_8CJVT8YE crw-r----- 1 root operator 0xa4 2024.12.22 21:20 HGST_8CJW1G4E crw-r----- 1 root operator 0xac 2024.12.22 21:20 SG_ZHZ03BAT crw-r----- 1 root operator 0xa6 2024.12.22 21:20 SG_ZHZ16KEX crw-r----- 1 root operator 0xaa 2024.12.22 21:20 SG_ZL2NJBT2 crw-r----- 1 root operator 0x8a 2024.12.22 21:20 gptboot0 crw-r----- 1 root operator 0x85 2024.12.22 21:20 gptboot1

So here I go with:

[19:37 r730-03 dvl ~] % sudo zpool replace data01 gpt/HGST_8CJR6GZE gpt/HGST_5PGGTH3D

The very long pause as that command is executed is always unnerving.

However, we see the expected updated status:

[19:38 r730-03 dvl ~] % zpool status data01 pool: data01 state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Mon Dec 23 19:38:16 2024 10.2G / 26.0T scanned at 328M/s, 0B / 26.0T issued 0B resilvered, 0.00% done, no estimated completion time config: NAME STATE READ WRITE CKSUM data01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/HGST_8CJVT8YE ONLINE 0 0 0 gpt/SG_ZHZ16KEX ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gpt/SG_ZHZ03BAT ONLINE 0 0 0 gpt/HGST_8CJW1G4E ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gpt/SG_ZL2NJBT2 ONLINE 0 0 0 replacing-1 ONLINE 0 0 0 gpt/HGST_8CJR6GZE ONLINE 0 0 0 gpt/HGST_5PGGTH3D ONLINE 0 0 0 errors: No known data errors

Notice the last mirror. When the new drive is fully silvered, the old one will be dropped from the zpool.

Perhaps it will be done soon. By 20:46 it was saying:

scan: resilver in progress since Mon Dec 23 19:38:16 2024 3.08T / 26.0T scanned at 841M/s, 0B / 23.8T issued 0B resilvered, 0.00% done, no estimated completion time

Tune in tomorrow. For now, I’m done.

23:31

scan: resilver in progress since Mon Dec 23 19:38:16 2024

4.01T / 26.0T scanned at 304M/s, 166G / 23.3T issued at 12.3M/s

172G resilvered, 0.70% done, 22 days 19:53:38 to go

00:46

scan: resilver in progress since Mon Dec 23 19:38:16 2024 4.01T / 26.0T scanned at 228M/s, 249G / 23.3T issued at 13.8M/s 257G resilvered, 1.04% done, 20 days 05:54:44 to go

19:34

scan: resilver in progress since Mon Dec 23 19:38:16 2024 25.7T / 26.0T scanned, 5.19T / 9.07T issued at 105M/s 5.22T resilvered, 57.21% done, 10:47:53 to go

2024-12-25

Success!

[13:17 r730-03 dvl ~] % zpool status data01 pool: data01 state: ONLINE scan: resilvered 8.83T in 1 days 10:08:51 with 0 errors on Wed Dec 25 05:47:07 2024 config: NAME STATE READ WRITE CKSUM data01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/HGST_8CJVT8YE ONLINE 0 0 0 gpt/SG_ZHZ16KEX ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gpt/SG_ZHZ03BAT ONLINE 0 0 0 gpt/HGST_8CJW1G4E ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gpt/SG_ZL2NJBT2 ONLINE 0 0 0 gpt/HGST_5PGGTH3D ONLINE 0 0 0 errors: No known data errors [13:17 r730-03 dvl ~] %

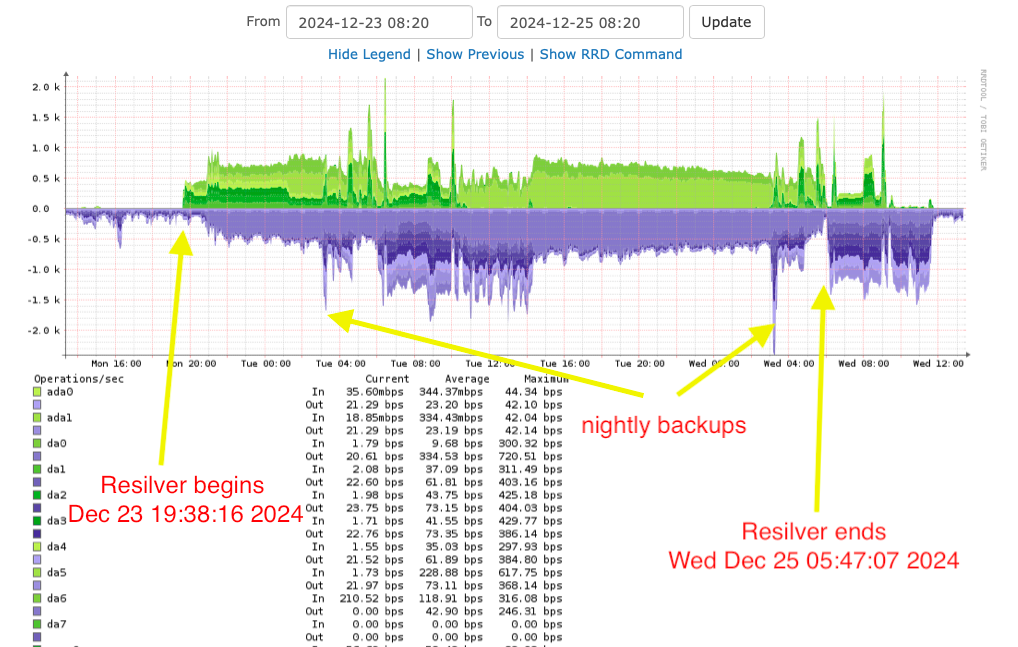

Here is the graph from LibreNMS showing the disk IO over a 48 hour period. Keep in mind that regular system functioning was also carried out during this process. That means two sets of backups (Tuesday 0300 and Wednesday 0300).

Next, I want to write zeroes to the faulty drive and see if it recovers from that issue. Hmm, I might first do a read of every sector… Fun times.

Merry Christmas! Happy Hanukkah!