In this post

In this post, we have:

- FreeBSD 14.1-RELEASE-p5

- r730-03

- Lots of boring repetitive sections, so skip over that to find what you need

This article was written over a couple of days.

The zpool in question is 3 pairs of 12TB HDD:

[13:44 r730-03 dvl ~/tmp] % zpool status data01 pool: data01 state: ONLINE scan: scrub in progress since Fri Jan 17 05:29:21 2025 22.1T / 26.1T scanned at 200M/s, 19.2T / 25.9T issued at 173M/s 0B repaired, 74.06% done, 11:17:54 to go config: NAME STATE READ WRITE CKSUM data01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/HGST_8CJVT8YE ONLINE 0 0 0 gpt/SG_ZHZ16KEX ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gpt/SG_ZHZ03BAT ONLINE 0 0 0 gpt/HGST_8CJW1G4E ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gpt/SG_ZL2NJBT2 ONLINE 0 0 0 gpt/HGST_5PGGTH3D ONLINE 0 0 0 errors: No known data errors

Yes, that’s a scrub going on….

Background

The other day during the ZFS users meeting, numbers of snapshots was mentioned. I found about 61,000 snapshots on one host.

What I didn’t know was that 58,000 snapshots were one on filesystem:

[20:23 r730-03 dvl ~] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/HA.snapshots [20:26 r730-03 dvl ~] % wc -l ~/tmp/HA.snapshots 57850 /home/dvl/tmp/HA.snapshots

How old are they?

[20:30 r730-03 dvl ~] % head -4 ~/tmp/HA.snapshots NAME USED AVAIL REFER MOUNTPOINT data01/snapshots/homeassistant-r730-01@autosnap_2023-05-01_00:00:10_monthly 6.34G - 20.8G - data01/snapshots/homeassistant-r730-01@autosnap_2023-06-01_00:00:00_monthly 3.39G - 20.3G - data01/snapshots/homeassistant-r730-01@autosnap_2023-07-01_00:00:08_monthly 2.89G - 19.2G - [20:30 r730-03 dvl ~] % tail -4 ~/tmp/HA.snapshots data01/snapshots/homeassistant-r730-01@autosnap_2025-01-17_19:30:07_frequently 88.6M - 18.0G - data01/snapshots/homeassistant-r730-01@autosnap_2025-01-17_19:45:01_frequently 84.5M - 18.0G - data01/snapshots/homeassistant-r730-01@autosnap_2025-01-17_20:00:04_hourly 0B - 18.0G - data01/snapshots/homeassistant-r730-01@autosnap_2025-01-17_20:00:04_frequently 0B - 18.0G - [20:30 r730-03 dvl ~] % [20:30 r730-03 dvl ~] % [20:30 r730-03 dvl ~] % grep -c autosnap ~/tmp/HA.snapshots 57849

I don’t need snapshots from May 2023… Let’s clean those up.

All of them are snapshots taken on the remote host (r730-01), not this host (r730-03).

Let’s look at what we have at the origin:

[20:37 r730-01 dvl ~] % zfs list -r -t snapshot data02/vm/hass NAME USED AVAIL REFER MOUNTPOINT data02/vm/hass@syncoid_r730-01.int.unixathome.org_2023-02-27:21:27:41-GMT00:00 16.0G - 16.2G - data02/vm/hass@autosnap_2024-10-01_00:00:05_monthly 4.86G - 11.9G - data02/vm/hass@autosnap_2024-11-01_00:00:05_monthly 7.64G - 17.4G - data02/vm/hass@autosnap_2024-12-01_00:00:10_monthly 3.13G - 14.0G - data02/vm/hass@autosnap_2024-12-14_00:00:18_daily 460M - 17.9G - data02/vm/hass@autosnap_2024-12-15_00:00:01_daily 274M - 18.0G - data02/vm/hass@autosnap_2024-12-16_00:00:08_daily 460M - 18.1G - data02/vm/hass@autosnap_2024-12-17_00:00:10_daily 245M - 16.3G - data02/vm/hass@autosnap_2024-12-18_00:00:09_daily 267M - 16.6G - data02/vm/hass@autosnap_2024-12-19_00:00:06_daily 342M - 16.7G - data02/vm/hass@autosnap_2024-12-20_00:00:11_daily 352M - 16.8G - data02/vm/hass@autosnap_2024-12-21_00:00:20_daily 357M - 16.9G - data02/vm/hass@autosnap_2024-12-22_00:00:06_daily 329M - 17.0G - data02/vm/hass@autosnap_2024-12-23_00:00:08_daily 570M - 17.1G - data02/vm/hass@autosnap_2024-12-24_00:00:18_daily 301M - 17.8G - data02/vm/hass@autosnap_2024-12-25_00:00:08_daily 295M - 17.9G - data02/vm/hass@autosnap_2024-12-26_00:00:13_daily 333M - 18.0G - data02/vm/hass@autosnap_2024-12-27_00:00:15_daily 332M - 18.1G - data02/vm/hass@autosnap_2024-12-28_00:00:12_daily 327M - 18.2G - data02/vm/hass@autosnap_2024-12-29_00:00:12_daily 314M - 18.2G - data02/vm/hass@autosnap_2024-12-30_00:00:11_daily 459M - 18.3G - data02/vm/hass@autosnap_2024-12-31_00:00:23_daily 328M - 17.0G - data02/vm/hass@autosnap_2025-01-01_00:00:06_monthly 0B - 17.2G - data02/vm/hass@autosnap_2025-01-01_00:00:06_daily 0B - 17.2G - data02/vm/hass@autosnap_2025-01-02_00:00:05_daily 324M - 17.3G - data02/vm/hass@autosnap_2025-01-03_00:00:09_daily 317M - 17.4G - data02/vm/hass@autosnap_2025-01-04_00:00:07_daily 319M - 17.6G - data02/vm/hass@autosnap_2025-01-05_00:00:15_daily 387M - 17.7G - data02/vm/hass@autosnap_2025-01-06_00:00:11_daily 469M - 17.8G - data02/vm/hass@autosnap_2025-01-07_02:00:11_daily 374M - 17.1G - data02/vm/hass@autosnap_2025-01-08_00:00:09_daily 363M - 17.2G - data02/vm/hass@autosnap_2025-01-09_00:00:11_daily 378M - 17.3G - data02/vm/hass@autosnap_2025-01-10_00:00:08_daily 377M - 17.4G - data02/vm/hass@autosnap_2025-01-11_14:45:05_daily 235M - 17.4G - data02/vm/hass@autosnap_2025-01-12_00:00:17_daily 336M - 17.5G - data02/vm/hass@autosnap_2025-01-13_00:00:10_daily 1.29G - 19.4G - data02/vm/hass@autosnap_2025-01-14_00:00:01_daily 309M - 17.6G - data02/vm/hass@autosnap_2025-01-15_00:00:20_daily 325M - 17.7G - data02/vm/hass@autosnap_2025-01-15_20:00:01_hourly 120M - 17.8G - data02/vm/hass@autosnap_2025-01-15_21:00:15_hourly 106M - 17.8G - data02/vm/hass@autosnap_2025-01-15_22:00:15_hourly 106M - 17.8G - data02/vm/hass@autosnap_2025-01-15_23:00:21_hourly 107M - 17.8G - data02/vm/hass@autosnap_2025-01-16_00:00:22_daily 0B - 17.8G - data02/vm/hass@autosnap_2025-01-16_00:00:22_hourly 0B - 17.8G - data02/vm/hass@autosnap_2025-01-16_01:00:15_hourly 99.0M - 17.8G - data02/vm/hass@autosnap_2025-01-16_02:00:04_hourly 99.3M - 17.8G - data02/vm/hass@autosnap_2025-01-16_03:00:05_hourly 107M - 17.8G - data02/vm/hass@autosnap_2025-01-16_04:00:01_hourly 111M - 17.8G - data02/vm/hass@autosnap_2025-01-16_05:00:14_hourly 117M - 17.8G - data02/vm/hass@autosnap_2025-01-16_06:00:03_hourly 105M - 17.8G - data02/vm/hass@autosnap_2025-01-16_07:00:09_hourly 104M - 17.8G - data02/vm/hass@autosnap_2025-01-16_08:00:07_hourly 106M - 17.8G - data02/vm/hass@autosnap_2025-01-16_09:00:19_hourly 98.0M - 17.8G - data02/vm/hass@autosnap_2025-01-16_10:00:14_hourly 99.0M - 17.8G - data02/vm/hass@autosnap_2025-01-16_11:00:02_hourly 109M - 17.8G - data02/vm/hass@autosnap_2025-01-16_12:00:12_hourly 101M - 17.8G - data02/vm/hass@autosnap_2025-01-16_13:00:06_hourly 102M - 17.8G - data02/vm/hass@autosnap_2025-01-16_14:00:02_hourly 109M - 17.8G - data02/vm/hass@autosnap_2025-01-16_15:00:10_hourly 111M - 17.8G - data02/vm/hass@autosnap_2025-01-16_16:00:07_hourly 110M - 17.8G - data02/vm/hass@autosnap_2025-01-16_17:00:05_hourly 111M - 17.9G - data02/vm/hass@autosnap_2025-01-16_18:00:13_hourly 113M - 17.9G - data02/vm/hass@autosnap_2025-01-16_19:00:12_hourly 105M - 17.9G - data02/vm/hass@autosnap_2025-01-16_20:00:01_hourly 97.9M - 17.9G - data02/vm/hass@autosnap_2025-01-16_21:00:15_hourly 97.8M - 17.9G - data02/vm/hass@autosnap_2025-01-16_22:00:04_hourly 98.9M - 17.9G - data02/vm/hass@autosnap_2025-01-16_23:00:11_hourly 109M - 17.9G - data02/vm/hass@autosnap_2025-01-17_00:00:08_daily 0B - 17.9G - data02/vm/hass@autosnap_2025-01-17_00:00:08_hourly 0B - 17.9G - data02/vm/hass@autosnap_2025-01-17_01:00:04_hourly 108M - 17.9G - data02/vm/hass@autosnap_2025-01-17_02:00:06_hourly 109M - 17.9G - data02/vm/hass@autosnap_2025-01-17_03:00:06_hourly 108M - 17.9G - data02/vm/hass@autosnap_2025-01-17_04:00:15_hourly 114M - 17.9G - data02/vm/hass@autosnap_2025-01-17_05:00:05_hourly 112M - 17.9G - data02/vm/hass@autosnap_2025-01-17_06:00:02_hourly 105M - 17.9G - data02/vm/hass@autosnap_2025-01-17_07:00:13_hourly 105M - 17.9G - data02/vm/hass@autosnap_2025-01-17_08:00:02_hourly 106M - 17.9G - data02/vm/hass@autosnap_2025-01-17_09:00:13_hourly 107M - 18.0G - data02/vm/hass@autosnap_2025-01-17_10:00:12_hourly 99.4M - 18.0G - data02/vm/hass@autosnap_2025-01-17_11:00:10_hourly 100M - 18.0G - data02/vm/hass@autosnap_2025-01-17_12:00:01_hourly 110M - 18.0G - data02/vm/hass@autosnap_2025-01-17_13:00:10_hourly 101M - 18.0G - data02/vm/hass@autosnap_2025-01-17_14:00:02_hourly 102M - 18.0G - data02/vm/hass@autosnap_2025-01-17_15:00:13_hourly 104M - 18.0G - data02/vm/hass@autosnap_2025-01-17_16:00:07_hourly 92.8M - 18.0G - data02/vm/hass@autosnap_2025-01-17_16:15:06_frequently 87.5M - 18.0G - data02/vm/hass@autosnap_2025-01-17_16:30:06_frequently 87.8M - 18.0G - data02/vm/hass@autosnap_2025-01-17_16:45:03_frequently 88.4M - 18.0G - data02/vm/hass@autosnap_2025-01-17_17:00:07_hourly 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_17:00:07_frequently 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_17:15:07_frequently 88.6M - 18.0G - data02/vm/hass@autosnap_2025-01-17_17:30:07_frequently 89.0M - 18.0G - data02/vm/hass@autosnap_2025-01-17_17:45:05_frequently 90.3M - 18.0G - data02/vm/hass@autosnap_2025-01-17_18:00:05_hourly 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_18:00:05_frequently 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_18:15:04_frequently 88.9M - 18.0G - data02/vm/hass@autosnap_2025-01-17_18:30:01_frequently 89.6M - 18.0G - data02/vm/hass@autosnap_2025-01-17_18:45:02_frequently 89.3M - 18.0G - data02/vm/hass@autosnap_2025-01-17_19:00:13_hourly 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_19:00:13_frequently 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_19:15:04_frequently 88.6M - 18.0G - data02/vm/hass@autosnap_2025-01-17_19:30:07_frequently 88.5M - 18.0G - data02/vm/hass@autosnap_2025-01-17_19:45:01_frequently 84.4M - 18.0G - data02/vm/hass@autosnap_2025-01-17_20:00:04_hourly 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_20:00:04_frequently 0B - 18.0G - data02/vm/hass@autosnap_2025-01-17_20:15:04_frequently 82.8M - 18.0G - data02/vm/hass@autosnap_2025-01-17_20:30:07_frequently 79.6M - 18.0G - [20:38 r730-01 dvl ~] %

OK, I’m deleting every before 2024-10-01_00. I’ll start with all daily and frequently from not 2025, or 2024 months 10, 11, or 12.

Just the names

[20:43 r730-03 dvl /usr/local/etc/cron.d] % cut -f 1 -w ~/tmp/HA.snapshots | grep -v '^NAME$' > ~/tmp/HA.snapshot.names [20:43 r730-03 dvl /usr/local/etc/cron.d] % wc -l ~/tmp/HA.snapshot.names 57849 /home/dvl/tmp/HA.snapshot.names

Delete about 56000 snapshots

After some playing I did this:

[20:48 r730-03 dvl /usr/local/etc/cron.d] % egrep -e 'autosnap_202[3-4]' ~/tmp/HA.snapshot.names | grep -v 'monthly' | egrep -ve '2024-1[0-2]' | xargs -n 1 sudo zfs destroy

Then I read the manpage

From the manpage I read:

An inclusive range of snapshots may be specified by separating the first and last snapshots with a percent sign. The first and/or last snapshots may be left blank, in which case the filesystem’s oldest or newest snapshot will be implied.

I control-c’d my script and got a fresh list of snapshots. The latest one was data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_01:00:12_hourly and the one before the next monthly was data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:45:03_frequently.

Let’s try this:

[20:55 r730-03 dvl /usr/local/etc/cron.d] % zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_01:00:12_hourly%autosnap_2023-09-30_23:45:03_frequently cannot destroy snapshots: permission denied

That took a long time to get back to me. So I tried what I meant:

[20:57 r730-03 dvl /usr/local/etc/cron.d] % zfs destroy -nv data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_01:00:12_hourly%autosnap_2023-09-30_23:45:03_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_01:00:12_hourly would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_02:00:08_hourly would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_03:00:01_hourly would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_04:00:00_hourly would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-25_05:00:10_hourly ... would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_22:00:09_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_22:15:02_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_22:30:03_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_22:45:01_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:00:07_hourly would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:00:07_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:15:02_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:30:03_frequently would destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-09-30_23:45:03_frequently would reclaim 53.3G

In other ssh sessions, I issued a similar commands and waited:

[21:13 r730-03 dvl ~] % ps auwwx | grep destroy root 6311 0.0 0.0 20368 9844 1 I+ 21:08 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-10-01_00:00:02_daily%autosnap_2023-10-31_23:45:00_frequently root 8576 0.0 0.0 20368 9848 10 I+ 21:11 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-02-01_00:00:11_daily%autosnap_2024-02-29_23:45:02_frequently root 6312 0.0 0.0 20368 9840 2 Is 21:08 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-10-01_00:00:02_daily%autosnap_2023-10-31_23:45:00_frequently root 6313 0.0 0.4 528456 481940 2 I+ 21:08 0:49.43 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-10-01_00:00:02_daily%autosnap_2023-10-31_23:45:00_frequently root 6412 0.0 0.0 20368 9848 5 Is 21:09 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-11-01_00:00:13_daily%autosnap_2023-11-30_23:45:05_frequently root 6413 0.0 0.0 19528 8400 5 D+ 21:09 0:00.00 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-11-01_00:00:13_daily%autosnap_2023-11-30_23:45:05_frequently root 6900 0.0 0.0 20368 9844 6 I+ 21:10 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-12-01_00:00:03_daily%autosnap_2023-12-31_23:45:02_frequently root 6901 0.0 0.0 20368 9840 7 Is 21:10 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-12-01_00:00:03_daily%autosnap_2023-12-31_23:45:02_frequently root 6902 0.0 0.0 19528 8396 7 D+ 21:10 0:00.00 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-12-01_00:00:03_daily%autosnap_2023-12-31_23:45:02_frequently root 6411 0.0 0.0 20368 9852 3 I+ 21:09 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2023-11-01_00:00:13_daily%autosnap_2023-11-30_23:45:05_frequently root 8462 0.0 0.0 20368 9856 8 I+ 21:10 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-01-01_00:00:19_daily%autosnap_2024-01-31_23:45:01_frequently root 8463 0.0 0.0 20368 9852 9 Is 21:10 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-01-01_00:00:19_daily%autosnap_2024-01-31_23:45:01_frequently root 8464 0.0 0.0 19528 8408 9 D+ 21:10 0:00.00 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-01-01_00:00:19_daily%autosnap_2024-01-31_23:45:01_frequently root 8577 0.0 0.0 20368 9844 11 Is 21:11 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-02-01_00:00:11_daily%autosnap_2024-02-29_23:45:02_frequently root 8578 0.0 0.0 19528 8396 11 D+ 21:11 0:00.00 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-02-01_00:00:11_daily%autosnap_2024-02-29_23:45:02_frequently root 8676 0.0 0.0 20368 9848 12 I+ 21:13 0:00.01 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-03-01_00:00:03_daily%autosnap_2024-08-31_23:45:05_frequently root 8677 0.0 0.0 20368 9844 13 Is 21:13 0:00.00 sudo zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-03-01_00:00:03_daily%autosnap_2024-08-31_23:45:05_frequently root 8678 0.0 0.0 19528 8396 13 D+ 21:13 0:00.00 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-03-01_00:00:03_daily%autosnap_2024-08-31_23:45:05_frequently dvl 8758 0.0 0.0 12808 2380 14 S+ 21:13 0:00.00 grep destroy

I wanted to keep some monthly snapshots, then I gave up and went for a much larger range: 2024-03-01 to 2024-08-31.

iostat

Did you know iostat will prompt you for drives? Well, it did in my zsh shell. In the following command, I pressed CTL-D after typing 10:

[21:16 r730-03 dvl ~] % sudo iostat -w 2 -c 10 ada0 ada1 cd0 da0 da1 da2 da3 da4 da5 da6

Which means you get to see this:

[21:16 r730-03 dvl ~] % sudo iostat -w 2 -c 10 da0 da1 da2 da4 da4 da5 da6

tty da0 da1 da2 da4 da5 da6 cpu

tin tout KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s us ni sy in id

0 37 134 135 17.72 140 133 18.17 128 151 18.90 140 126 17.21 129 144 18.11 15.4 0 0.00 0 0 1 0 99

1 392 0.0 0 0.00 4.5 23 0.10 4.6 19 0.09 5.0 31 0.15 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 123 0.0 0 0.00 4.6 32 0.14 4.4 26 0.11 4.6 27 0.12 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 136 0.0 0 0.00 5.2 25 0.12 4.5 23 0.10 4.8 39 0.18 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 130 0.0 0 0.00 4.5 30 0.13 4.6 32 0.14 4.8 28 0.13 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 129 0.0 0 0.00 4.8 21 0.10 4.8 20 0.09 5.0 29 0.14 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 131 0.0 0 0.00 4.3 15 0.06 5.0 23 0.11 4.8 34 0.16 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 128 0.0 0 0.00 5.5 14 0.08 5.3 22 0.12 4.7 34 0.15 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 133 0.0 0 0.00 4.6 16 0.07 4.6 14 0.06 5.3 22 0.12 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

0 130 128 1 0.13 4.1 31 0.12 4.5 39 0.17 14.2 33 0.46 0.0 0 0.00 0.0 0 0.00 0 0 1 0 99

Running so many destroys….

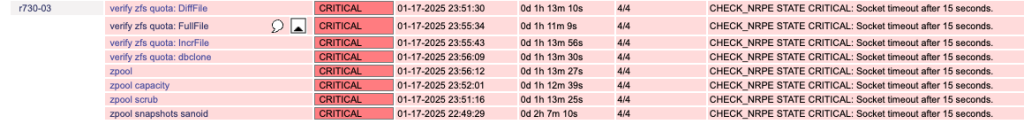

Those 12 concurrent destroys, each acting upon the same filesysteme, ran for several hours. The system became unusable. Monitoring checks failed:

It wasn’t until about 01:42 (about 2.5 hours later) that the checks went green.

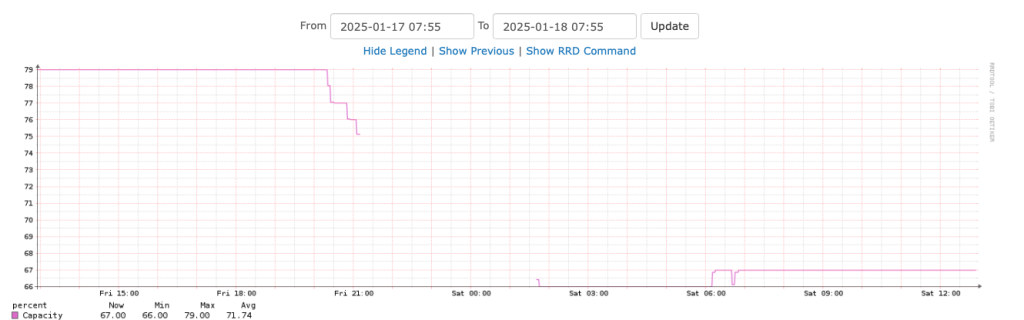

I’m also pleased that the pool capacity went from 79% down to 67% – the gap in the graph is because monitoring could get data from the filesystem during the multiple concurrent destroys.

So how many are left?

We still have 16,000 snapshots left.

[12:54 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now [12:54 r730-03 dvl ~/tmp] % wc -l ~/tmp/now 16695 /home/dvl/tmp/now

Let’s try one more range delete

I decided to try one more range delete, that is: delete everything between this and that. I also timed it and captured the list of deleted snapshots.

[13:04 r730-03 dvl ~] % time sudo zfs destroy -v data01/snapshots/homeassistant-r730-01@autosnap_2024-09-01_00:00:19_daily%autosnap_2024-09-30_23:45:03_frequently will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-01_00:00:19_daily will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-01_00:00:19_hourly will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-01_00:00:19_frequently will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-01_00:15:06_frequently ... will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-30_23:00:07_frequently will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-30_23:15:05_frequently will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-30_23:30:02_frequently will destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-09-30_23:45:03_frequently will reclaim 339G sudo zfs destroy -v 0.03s user 0.04s system 0% cpu 38:00.89 total

I haven’t listed all the output here. However, I did get a count: 3630 snapshots. That’s not trivial.

It took 38 minutes. Again, the system because unusable – there were more gaps in the monitoring.

When running this command, I could not get sysutils/btop to display. However, iostat ran just fine. Filesystem commands such as zfs list did not run. Indeed, once the command was entered, I could not CTL-C out of it. CTL-T did work, as shown here:

[13:07 r730-03 dvl ~] % zfs list load: 1.12 cmd: zfs 52910 [rrl->rr_cv] 2.63r 0.00u 0.00s 0% 8432k load: 1.12 cmd: zfs 52910 [rrl->rr_cv] 3.78r 0.00u 0.00s 0% 8432k load: 1.12 cmd: zfs 52910 [rrl->rr_cv] 3.97r 0.00u 0.00s 0% 8432k load: 1.12 cmd: zfs 52910 [rrl->rr_cv] 4.43r 0.00u 0.00s 0% 8432k load: 2.51 cmd: zfs 52910 [rrl->rr_cv] 538.48r 0.00u 0.00s 0% 8432k

Let’s try one at a time

Let’s go back to my long time approach, delete one-at-a-time with xargs.

In this test, I’m going to delete snapshots from Oct 2024.

[13:43 r730-03 dvl ~] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now

[13:49 r730-03 dvl ~] % grep autosnap_2024-10 ~/tmp/now | cut -f 1 -w | grep -v monthly | wc -l

3751

[13:50 r730-03 dvl ~] % grep autosnap_2024-10 ~/tmp/now | cut -f 1 -w | grep -v monthly | head

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_daily

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_hourly

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:15:04_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:30:02_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:45:01_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:00:02_hourly

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:00:02_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:15:05_frequently

data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:30:04_frequently

A short test to validate:

[13:51 r730-03 dvl ~] % grep autosnap_2024-10 ~/tmp/now | cut -f 1 -w | grep -v monthly | head | xargs -n 1 echo sudo destroy sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_daily sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_hourly sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:00:05_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:15:04_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:30:02_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_00:45:01_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:00:02_hourly sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:00:02_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:15:05_frequently sudo destroy data01/snapshots/homeassistant-r730-01@autosnap_2024-10-01_01:30:04_frequently

And here we go, adding time, removing head, and adding in the zfs command.

[13:52 r730-03 dvl ~] % time grep autosnap_2024-10 ~/tmp/now | cut -f 1 -w | grep -v monthly | xargs -n 1 sudo zfs destroy grep autosnap_2024-10 ~/tmp/now 0.01s user 0.00s system 0% cpu 1:39:34.11 total cut -f 1 -w 0.01s user 0.00s system 0% cpu 2:22:20.67 total grep -v monthly 0.00s user 0.00s system 0% cpu 3:05:17.08 total xargs -n 1 sudo zfs destroy 26.03s user 22.66s system 0% cpu 3:39:04.70 total [17:31 r730-03 dvl ~] %

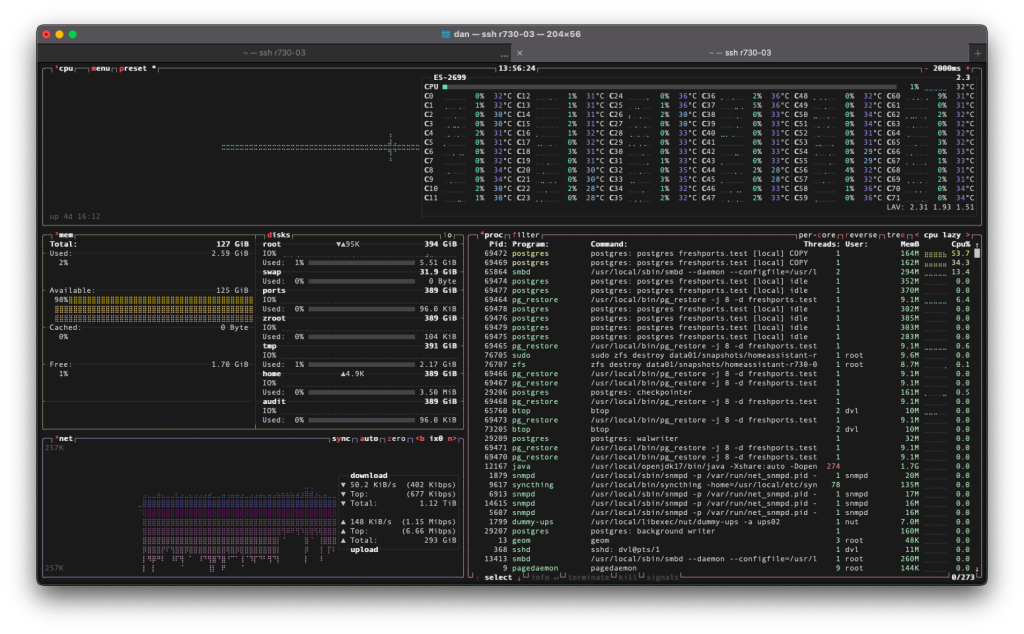

That took 3.5 hours, however, the system was quite usable. And there are many more snapshots to delete.

While that ran, btop was usable:

See also, Why does the same command appear on two different ports with different times??

November, it’s time for November

This next session, I’ll do within tmux.

[17:49 r730-03 dvl ~] % time grep autosnap_2024-11 ~/tmp/now | cut -f 1 -w | grep -v monthly | xargs -n 1 echo sudo zfs destroy | wc -l

3598

grep autosnap_2024-11 ~/tmp/now 0.01s user 0.00s system 1% cpu 0.926 total

cut -f 1 -w 0.00s user 0.01s system 0% cpu 1.351 total

grep -v monthly 0.00s user 0.00s system 0% cpu 1.793 total

xargs -n 1 echo sudo zfs destroy 0.72s user 1.33s system 100% cpu 2.044 total

wc -l 0.01s user 0.01s system 1% cpu 2.044 total

With 3600 snapshots to delete, I’m going to assume it’ll take another 3.5 hours. Here goes:

[17:51 r730-03 dvl ~] % time grep autosnap_2024-11 ~/tmp/now | cut -f 1 -w | grep -v monthly | xargs -n 1 sudo zfs destroy

And that died when I rebooting my laptop, despite it being in a tmux session. Why?

sudo: 3 incorrect password attempts Sorry, try again. Sorry, try again. sudo: 3 incorrect password attempts grep autosnap_2024-11 ~/tmp/now 0.01s user 0.01s system 0% cpu 1:26:09.42 total cut -f 1 -w 0.00s user 0.02s system 0% cpu 1:51:09.42 total grep -v monthly 0.00s user 0.00s system 0% cpu 1:51:13.94 total xargs -n 1 sudo zfs destroy 19.57s user 15.38s system 0% cpu 1:51:16.56 total

My sudo auth is based on ssh keys.

Let’s go again, redoing the list, because we’ve deleted some stuff from there.

[22:13 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now

[22:14 r730-03 dvl ~/tmp] % wc -l ~/tmp/now

7345 /home/dvl/tmp/now

[22:14 r730-03 dvl ~] % grep autosnap_2024-11 ~/tmp/now | cut -f 1 -w | grep -v monthly | wc -l

1579

[22:14 r730-03 dvl ~/tmp] % time grep autosnap_2024-11 ~/tmp/now | cut -f 1 -w | grep -v monthly | xargs -n 1 sudo zfs destroy

grep autosnap_2024-11 ~/tmp/now 0.01s user 0.00s system 94% cpu 0.006 total

cut -f 1 -w 0.00s user 0.00s system 79% cpu 0.006 total

grep -v monthly 0.00s user 0.00s system 0% cpu 11:08.96 total

xargs -n 1 sudo zfs destroy 9.37s user 7.62s system 1% cpu 21:47.85 total

That didn’t take so long.

frequently and hourly

Next up:

[22:42 r730-03 dvl ~/tmp] % time grep autosnap_2024-12 ~/tmp/now | cut -f 1 -w | egrep -e 'frequently|hourly' | wc -l

3710

grep autosnap_2024-12 ~/tmp/now 0.01s user 0.01s system 35% cpu 0.035 total

cut -f 1 -w 0.01s user 0.01s system 24% cpu 0.042 total

egrep -e 'frequently|hourly' 0.05s user 0.00s system 99% cpu 0.047 total

wc -l 0.00s user 0.00s system 6% cpu 0.046 total

Let’s go again:

[22:44 r730-03 dvl ~/tmp] % time grep autosnap_2024-12 ~/tmp/now | cut -f 1 -w | egrep -e 'frequently|hourly' | xargs -n 1 sudo zfs destroy load: 0.66 cmd: sudo 12117 [running] 0.00r 0.00u 0.00s 0% 6620k load: 1.50 cmd: sudo 40626 [select] 0.97r 0.01u 0.00s 0% 9824k grep autosnap_2024-12 ~/tmp/now 0.01s user 0.00s system 0% cpu 22:24.66 total cut -f 1 -w 0.00s user 0.01s system 0% cpu 34:18.98 total egrep -e 'frequently|hourly' 0.04s user 0.01s system 0% cpu 46:33.18 total xargs -n 1 sudo zfs destroy 22.43s user 18.07s system 1% cpu 55:10.18 total

That took an hour.

Down near 2000 now

The next morning, I found the scrub had finished:

[14:32 r730-03 dvl ~] % zpool status data01 pool: data01 state: ONLINE scan: scrub repaired 0B in 1 days 15:49:11 with 0 errors on Sat Jan 18 21:18:32 2025 config: NAME STATE READ WRITE CKSUM data01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gpt/HGST_8CJVT8YE ONLINE 0 0 0 gpt/SG_ZHZ16KEX ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gpt/SG_ZHZ03BAT ONLINE 0 0 0 gpt/HGST_8CJW1G4E ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gpt/SG_ZL2NJBT2 ONLINE 0 0 0 gpt/HGST_5PGGTH3D ONLINE 0 0 0 errors: No known data errors

And only about 200 snapshots remaining:

[23:39 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now

[13:21 r730-03 dvl ~/tmp] % wc -l ~/tmp/now

2132 /home/dvl/tmp/now

I’m going to leave that to sanoid.

sanoid?

I’m already running sanoid over here. Let’s see if that can help clean up old stuff for the long term. I want to remove snapshots, but not create snapshots.

This is where the snapshots are created, on r730-01. This is an extract from /usr/local/etc/sanoid/sanoid.conf on that host:

[data02/vm]

use_template = vm

recursive = zfs

# based on production

[template_vm]

frequently = 15

hourly = 48

daily = 35

monthly = 4

autosnap = yes

autoprune = yes

This is what I have prepared on r730-03, in the same file name:

[data01/snapshots]

process_children_only = yes

use_template = snapshots

recursive = yes

[template_snapshots]

# based on template_vm on r730-01

frequently = 60

hourly = 72

daily = 120

monthly = 24

autosnap = no

autoprune = yes

The autosnap directive should prevent sanoid from creating snapshots here. The autoprune will prune old snapshots based on the local directives, not the directives on the original host.

You will notice the difference between “recursive = yes” and “recursive= zfs“. The “zfs” type of recursion does not allow for “the possibility to override settings for child datasets.” – “yes” allows you to specify difference settings for child datasets. “zfs” does not.

Now I want until 51 past the hour to see how my sanoid instance cleans up the remaining snapshots.

[13:26 r730-03 dvl ~/tmp] % cat /usr/local/etc/cron.d/sanoid # mail any output to `dan', no matter whose crontab this is MAILTO=dan@example.org PATH=/etc:/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin */15 * * * * root /usr/bin/lockf -t 0 /tmp/.sanoid-cron-snapshot /usr/local/bin/sanoid --take-snapshots 51 * * * * root /usr/bin/lockf -t 0 /tmp/.sanoid-cron-prune /usr/local/bin/sanoid --prune-snapshots

51 past the hour

Look what I see now:

[13:26 r730-03 dvl ~/tmp] % ps auwwx | grep destroy root 31653 0.0 0.0 19528 8916 - S 13:51 0:00.01 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2025-01-01_05:30:07_frequently dvl 31655 0.0 0.0 12808 2396 2 S+ 13:51 0:00.00 grep destroy [13:51 r730-03 dvl ~/tmp] % ps auwwx | grep sanoid root 30411 0.2 0.0 36332 23544 - S 13:51 0:01.21 /usr/local/bin/perl /usr/local/bin/sanoid --prune-snapshots root 30410 0.0 0.0 12712 2124 - Is 13:51 0:00.00 /usr/bin/lockf -t 0 /tmp/.sanoid-cron-prune /usr/local/bin/sanoid --prune-snapshots dvl 31667 0.0 0.0 12808 2392 2 S+ 13:51 0:00.00 grep sanoid [13:51 r730-03 dvl ~/tmp] % [13:51 r730-03 dvl ~/tmp] % ps auwwx | grep destroy root 31736 0.0 0.0 19528 8920 - S 13:52 0:00.01 zfs destroy data01/snapshots/homeassistant-r730-01@autosnap_2025-01-01_14:30:02_frequently dvl 31738 0.0 0.0 12808 2392 2 S+ 13:52 0:00.00 grep destroy [13:52 r730-03 dvl ~/tmp] %

Now go the numbers:

[13:54 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now

[13:54 r730-03 dvl ~/tmp] % wc -l ~/tmp/now

1914 /home/dvl/tmp/now

I suppose I could have let sanoid handle this from the start….

A bit more cleanup

We still have too many hourly snapshots:

[14:17 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now [14:18 r730-03 dvl ~/tmp] % grep -c hourly now 419

So let’s destroy all but the latest 72 hourly snapshots:

[14:21 r730-03 dvl ~/tmp] % grep hourly ~/tmp/now | cut -f 1 -w | head -347 | xargs -n 1 sudo zfs destroy [14:27 r730-03 dvl ~/tmp] %

This assumes the snapshots are sorted already. I could have run this through sort before head.

There’s a similar problem with frequently:

[14:27 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now [14:28 r730-03 dvl ~/tmp] % grep -c hourly now 72 [14:29 r730-03 dvl ~/tmp] % grep -c frequently now 236 [14:29 r730-03 dvl ~/tmp] % grep frequently ~/tmp/now | cut -f 1 -w | sort | head -1761 | xargs -n 1 sudo zfs destroy [14:33 r730-03 dvl ~/tmp] %

This time I did use sort.

Are we there yet?

Based on the sanoid configuration:

# based on template_vm on r730-01

frequently = 60

hourly = 72

daily = 120

monthly = 24

autosnap = no

autoprune = yes

Let’s compare:

[15:32 r730-03 dvl ~/tmp] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now [15:32 r730-03 dvl ~/tmp] % grep -c freq now 5 [15:32 r730-03 dvl ~/tmp] % grep -c hourly now 73 [15:32 r730-03 dvl ~/tmp] % grep -c daily now 50 [15:32 r730-03 dvl ~/tmp] % grep -c monthly now 16

Oh, perhaps I overdid it with daily and monthly. Not to worry.

This host now has 1/20 of the snapshots it had before:

[15:32 r730-03 dvl ~/tmp] % zfs list -r -t snapshot | wc -l

3098

I’ll leave it at that.

How much did this free up?

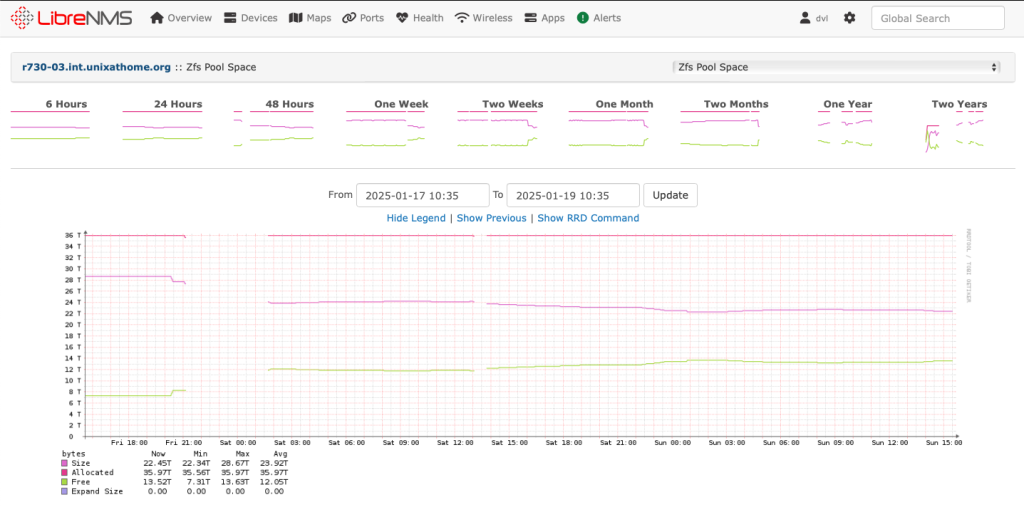

I wasn’t keep tracking, so let’s refer to LibreNMS:

From the looks of that, data01 went from 7.31TB free to 13.63TB free – the deleted snapshots had consumed 6.3TB – in a 22.45TB pool, that represents 28% of the pool. That is not trivial.

You can also see the two gaps where I was doing multiple destroys.

2025-01-20

I came back to check the number of snapshots:

[14:24 r730-03 dvl ~] % zfs list -r -t snapshot data01/snapshots/homeassistant-r730-01 > ~/tmp/now

[14:24 r730-03 dvl ~] % cd tmp

[14:24 r730-03 dvl ~/tmp] % wc -l now

205 now

[14:24 r730-03 dvl ~/tmp] % grep -c hourly now

73

[14:24 r730-03 dvl ~/tmp] % grep -c freq now

64

[14:24 r730-03 dvl ~/tmp] % grep -c daily now

51

[14:24 r730-03 dvl ~/tmp] %

That’s looking better.