I started replacing 3TB drives with 5TB drives in a 10 drive raidz3 array on a FreeBSD 10.3 box. I was not sure which drive tray to pull, so I powered off the server, and, one by one, pulled the drive tray, photographed it, and reinserted the drive tray.

No changes were made.

The first reboot

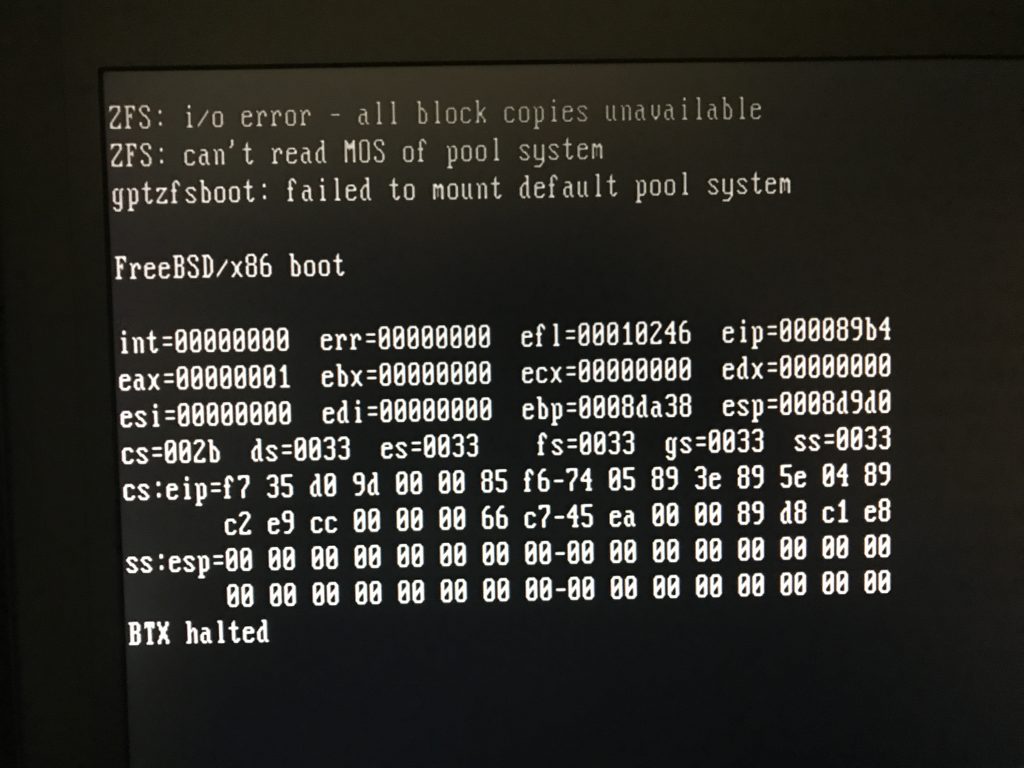

Upon powering up, I was greeted by this (I have typed out the text for search purposes):

ZFS: i/o error - all block copies unavailable ZFS: can't read MOS of pool system gptzfsboot: failed to mount default pool system

My first instinct was: I know I ran gpart bootcode.

Some background:

- The server has 3x SAS2008 cards

- The server has 20 HDD in two zpools

- The two zpools are split amongst the three HBAs

- The new drive was added to the third HBA which had only 4 drives

- That new drive was on a new 8087 breakout-cable attached to an unused connector on that HBA

The device which was replaced was the first one listed in zpool status of the bootable zpool, specifically, da2p3 as shown in this output taken prior to the zpool replace command being issued.

$ zpool status system pool: system state: ONLINE scan: scrub repaired 0 in 21h33m with 0 errors on Thu Aug 10 00:42:38 2017 config: NAME STATE READ WRITE CKSUM system ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 da2p3 ONLINE 0 0 0 da7p3 ONLINE 0 0 0 da1p3 ONLINE 0 0 0 da3p3 ONLINE 0 0 0 da0p3 ONLINE 0 0 0 da9p3 ONLINE 0 0 0 da4p3 ONLINE 0 0 0 da6p3 ONLINE 0 0 0 da10p3 ONLINE 0 0 0 da5p3 ONLINE 0 0 0 logs mirror-1 ONLINE 0 0 0 ada1p1 ONLINE 0 0 0 ada0p1 ONLINE 0 0 0 errors: No known data errors

Make all HBA bootable

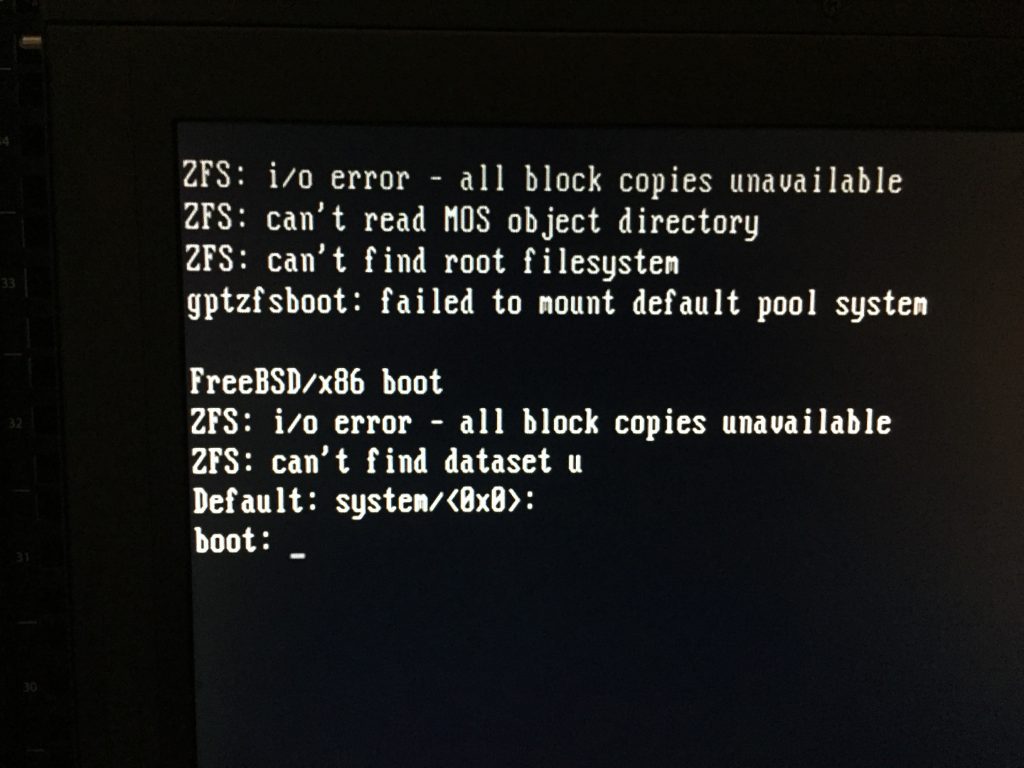

I rebooted the box, and went into the HBA bios. I set all HBA bootable. Previously only one HBA was bootable. The next reboot result was slightly different. All 21 drives were reported by the HBA BIOS.

It seems to me that the boot process got farther, but then failed again. The main difference is the inclusion of the can’t find root filesystem message and it is at the boot: prompt, not BTX halted.

For search purposes:

ZFS: i/o error - all block copies unavailable ZFS: can't read MOS of pool system ZFS: can't find root filesystem gptzfsboot: failed to mount default pool system

Move new drive to where old drive was

My next thought: move the new 5TB drive to where the original 3TB drive was located.

At the same time, I added a new 5TB drive into the internal space of the box (where the first 5TB drive was originally installed, but this time, attached to the motherboard, not to the HBA).

The box booted just fine. Problem solved.

For discussion

What happened? Why did I have to move the new drive into a specific drive bay?

I let Josh Paetzel read the above. He and I chatted about the potential problems.

Any incorrect information below is my failure to correctly interpret what Josh told me.

In short, booting from HBAs can be tricky. They sometimes lie to the BIOS. They try to keep their SAS infrastructure a certain way (I don’t know enough about this to properly discuss it).

Once you have set up a raidz system, it is a very good idea to follow this procedure to test booting:

- pull one drive

- reboot

- reinsert drive

Repeat that until you have booted with each drive removed in turn.

Or, you could boot from non-raidz drives, such as a hardware mirror, or a ZFS-mirror, or a CD-ROM, or UFS drives.

Yes, we’ll hear from people who say this is precisely why they boot from UFS and then attach ZFS.

What next?

I will continue with my drive replacements. The issue is hardware, not ZFS.

When all the 3TB drives have been replaced with 5TB drives, I may try booting from this SSD mirror zpool instead:

[dan@knew:~] $ zpool status zoomzoom

pool: zoomzoom

state: ONLINE

scan: scrub repaired 0 in 0h0m with 0 errors on Thu Aug 17 03:31:50 2017

config:

NAME STATE READ WRITE CKSUM

zoomzoom ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada1p2 ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

errors: No known data errors

$ gpart show ada0 ada1

=> 34 937703021 ada0 GPT (447G)

34 6 - free - (3.0K)

40 16777216 1 freebsd-zfs (8.0G)

16777256 920649728 2 freebsd-zfs (439G)

937426984 276071 - free - (135M)

=> 34 937703021 ada1 GPT (447G)

34 6 - free - (3.0K)

40 16777216 1 freebsd-zfs (8.0G)

16777256 920649728 2 freebsd-zfs (439G)

937426984 276071 - free - (135M)

That 8GB partition is used by the system pool as a log.

The 439G partition was used as a tape spooling location, but that tape library is now on my R610 server. I can delete that.

What I may try is creating a dedicated boot zpool, such as Glen Barber did.

There was no creation of a dedicated zpool. It was not what I wanted. These 5TB drives were fine.