It is time to let the knew server go. It has gone through multiple upgrades, new drives, new boards, and new chassis. It has been replaced by r730-03.

Before I let it go, I want to clear off the drives. By that I mean:

zpool labelclear gpart destroy dd if=/dev/zero of=/dev/ad0

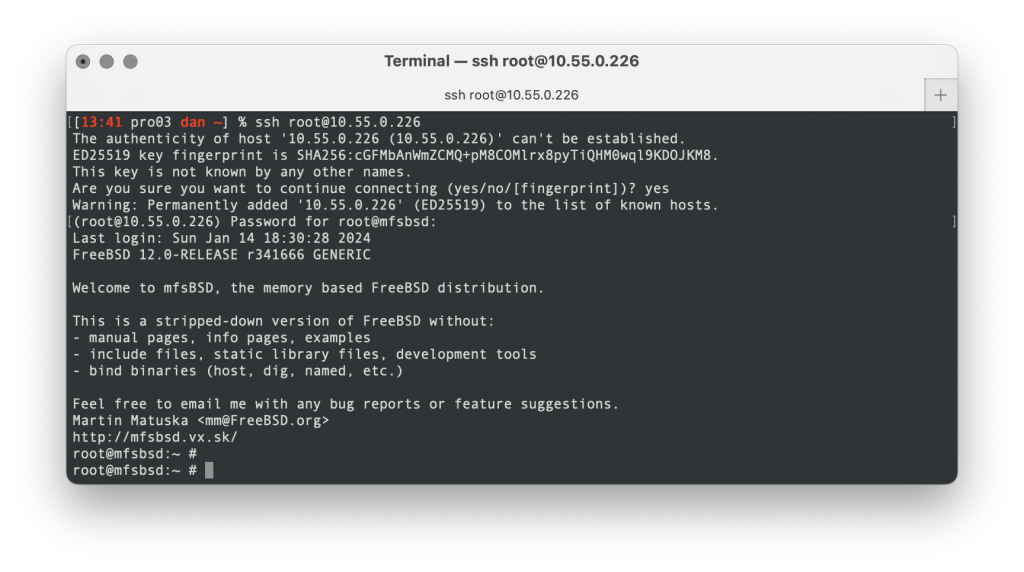

I did this by booting the host using mfBSD (a lovely USB-bootable version of FreeBSD). I then ssh‘d in as root (one of the few situations when ssh as root is acceptable-to-me).

Get a list of the drives, and exclude the boot drive

This is how I got the drives:

sysctl -n kern.disks > clear-drives.sh chmod +x clear-drives.sh

I figured out what drive I was booting from and removed that drive from the list. This is let as a exercise for the reader. Look at mount or df -h / etc. /var/run/dmesg.boot might also be useful.

Write-once, modify-many

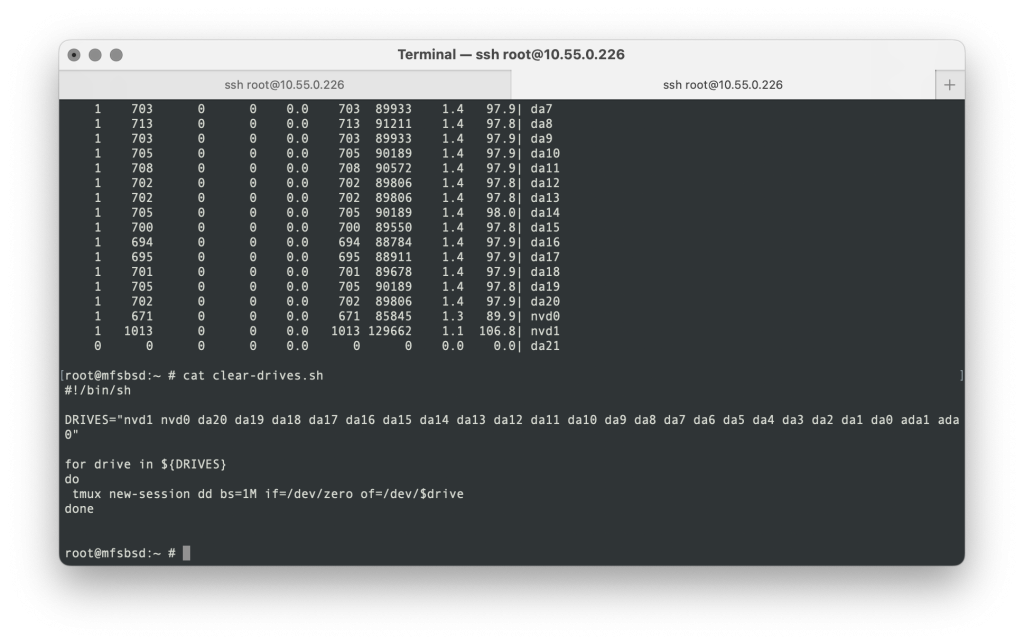

I modified clear-drives.sh to look like this:

root@mfsbsd:~ # cat clear-drives.sh

#!/bin/sh

DRIVES="nvd1 nvd0 da20 da19 da18 da17 da16 da15 da14 da13 da12 da11 da10 da9 da8 da7 da6 da5 da4 da3 da2 da1 da0 ada1 ada0"

for drive in ${DRIVES}

do

zpool labelclear -f /dev/${drive}p1

done

root@mfsbsd:~ #

I modified and ran this script multiple times.

zpool labelclear -f /dev/${drive}p1

zpool labelclear -f /dev/${drive}p3

gpart destroy -F ${drive}

tmux new-session dd bs=1M if=/dev/zero of=/dev/$drive

Why p1 and p3? My drives varied. Some where like this:

=> 34 937703021 ada0 GPT (447G)

34 2014 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 8388608 2 freebsd-swap (4.0G)

8392704 41943040 3 freebsd-zfs (20G)

50335744 887367311 - free - (423G)

And some like this:

=> 34 9767541101 da1 GPT (4.5T)

34 6 - free - (3.0K)

40 9766000000 1 freebsd-zfs (4.5T)

9766000040 1541095 - free - (752M)

The tmux issue

After running the script and invoking tmux, each time, I had to then exit all those tmux sessions to get back to the command line. I’m not sure what I did wrong there, but someone will tell me.

The screen shots

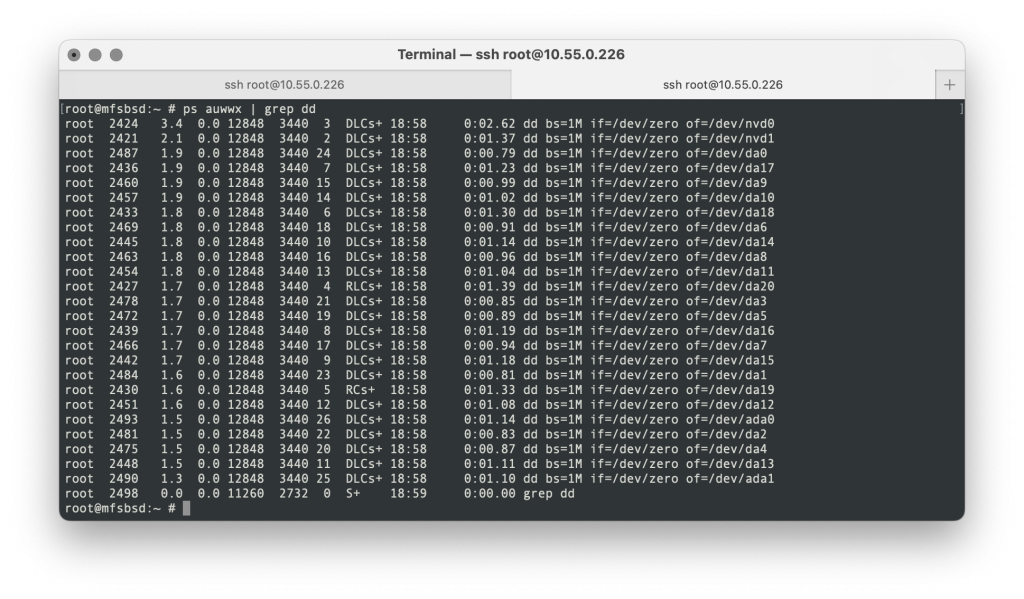

Here’s all the dd sessions:

This is the script, as seen on the big screen:

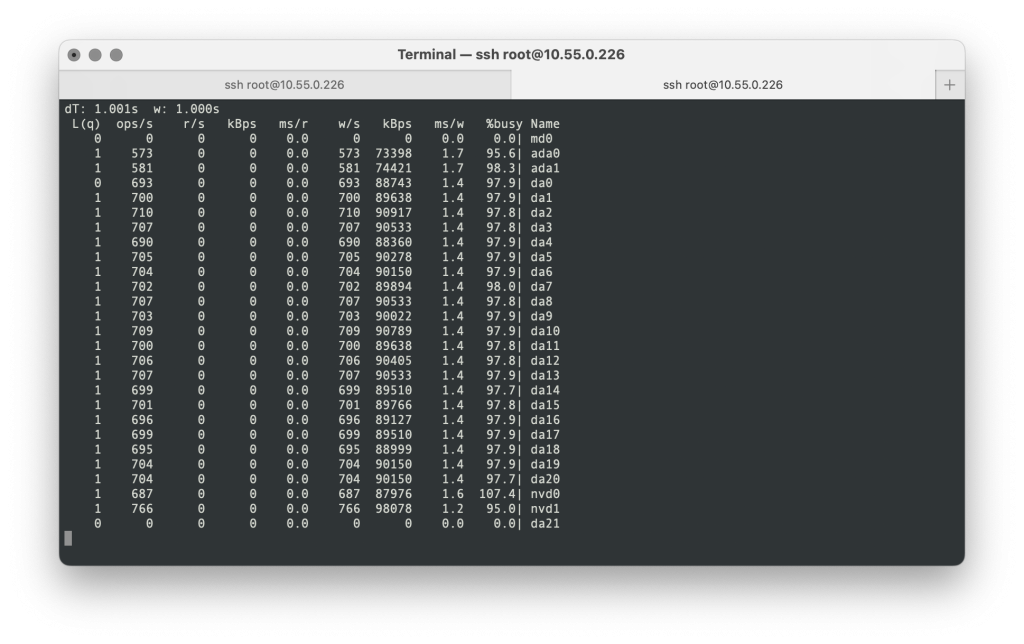

Here is is gstat output showing all the drives are busy:

The aftermath

As I type this, nvd0 and nvd1 are already finished (they are the smallest drives).

I suspect this will be done in about 8-12 hours.

I don’t have the need to do anything more than a dd with zero on this. I’m sure some nation state might be able to pull something of the drives, but I’m quite confident I am not [yet] a target.

Yes, if I’d encrypted the drives, this would not be necessary.

EDIT: adding load output. The system is essentially idle:

root@mfsbsd:~ # w 8:53PM up 2:26, 24 users, load averages: 0.58, 0.70, 0.67 USER TTY FROM LOGIN@ IDLE WHAT root v0 - 6:30PM 2:13 -csh (csh) root pts/0 pro03.startpoint.vpn.unixathome.org 6:45PM 45 gstat -p root pts/1 pro03.startpoint.vpn.unixathome.org 6:52PM - w root pts/4 tmux(2420).%2 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da20 root pts/5 tmux(2420).%3 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da19 root pts/6 tmux(2420).%4 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da18 root pts/7 tmux(2420).%5 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da17 root pts/8 tmux(2420).%6 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da16 root pts/9 tmux(2420).%7 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da15 root pts/10 tmux(2420).%8 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da14 root pts/11 tmux(2420).%9 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da13 root pts/12 tmux(2420).%10 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da12 root pts/13 tmux(2420).%11 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da11 root pts/14 tmux(2420).%12 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da10 root pts/15 tmux(2420).%13 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da9 root pts/16 tmux(2420).%14 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da8 root pts/17 tmux(2420).%15 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da7 root pts/18 tmux(2420).%16 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da6 root pts/19 tmux(2420).%17 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da5 root pts/20 tmux(2420).%18 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da4 root pts/21 tmux(2420).%19 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da3 root pts/22 tmux(2420).%20 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da2 root pts/23 tmux(2420).%21 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da1 root pts/24 tmux(2420).%22 6:58PM 1:55 dd bs=1M if=/dev/zero of=/dev/da0 root@mfsbsd:~ #

And here is top:

last pid: 2563; load averages: 0.81, 0.71, 0.67 up 0+02:27:23 20:55:40 37 processes: 1 running, 36 sleeping CPU: 0.0% user, 0.0% nice, 6.9% system, 0.9% interrupt, 92.2% idle Mem: 45M Active, 300M Inact, 487M Wired, 53M Buf, 61G Free Swap: PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 2430 root 1 21 0 13M 3440K physwr 6 2:40 2.34% dd 2436 root 1 21 0 13M 3440K physwr 3 2:41 2.34% dd 2463 root 1 21 0 13M 3440K physwr 2 2:40 2.34% dd 2478 root 1 21 0 13M 3440K physwr 7 2:40 2.33% dd 2475 root 1 21 0 13M 3440K physwr 3 2:40 2.33% dd 2472 root 1 21 0 13M 3440K physwr 7 2:40 2.32% dd 2484 root 1 20 0 13M 3440K physwr 6 2:40 2.32% dd 2451 root 1 20 0 13M 3440K physwr 2 2:40 2.31% dd 2466 root 1 21 0 13M 3440K physwr 7 2:40 2.31% dd 2460 root 1 21 0 13M 3440K physwr 2 2:40 2.31% dd 2481 root 1 20 0 13M 3440K physwr 4 2:41 2.31% dd 2433 root 1 21 0 13M 3440K physwr 3 2:41 2.30% dd 2487 root 1 21 0 13M 3440K physwr 5 2:39 2.30% dd 2454 root 1 21 0 13M 3440K physwr 6 2:40 2.30% dd 2445 root 1 21 0 13M 3440K physwr 5 2:40 2.29% dd 2448 root 1 21 0 13M 3440K physwr 3 2:40 2.29% dd 2469 root 1 20 0 13M 3440K physwr 6 2:40 2.29% dd 2427 root 1 21 0 13M 3440K physwr 2 2:41 2.28% dd 2442 root 1 21 0 13M 3440K physwr 6 2:40 2.28% dd 2439 root 1 21 0 13M 3440K physwr 5 2:39 2.28% dd 2457 root 1 21 0 13M 3440K physwr 0 2:40 2.26% dd 2509 root 1 20 0 18M 5080K nanslp 0 0:01 0.05% gstat 2563 root 1 20 0 13M 3724K CPU2 2 0:00 0.03% top 1948 root 1 20 0 20M 11M select 4 0:00 0.01% sshd

EDIT 2024-01-15 The next morning, all done:

root@mfsbsd:~ # tmux ls no server running on /tmp/tmux-0/default root@mfsbsd:~ # ps auwwx | grep dd root 2606 0.0 0.0 11104 2700 2 R+ 15:52 0:00.00 grep dd