The FreshPorts dev, test, and stage websites are hosted on a server in my basement. Each instance consists of two jails:

- an ingress node – for pulling in new commits (and other data) into the database.

- a webserver node – for displaying the web pages.

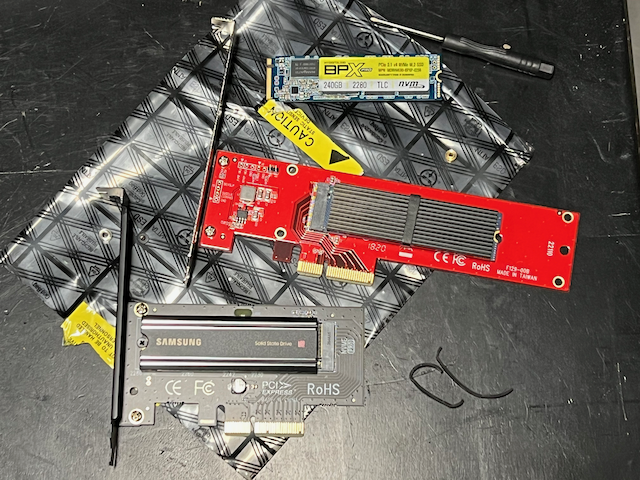

The new drives:

Sometimes the zpool gets too close to full. I tweeted about one incident on March 2021. I held hope that some spare SSDs might be the answer. Eventually, I bought new SSDs after another full incident (I’ve not mentioned them all).

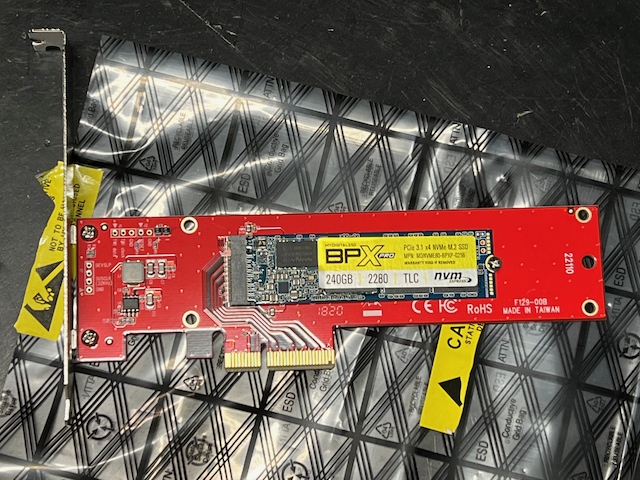

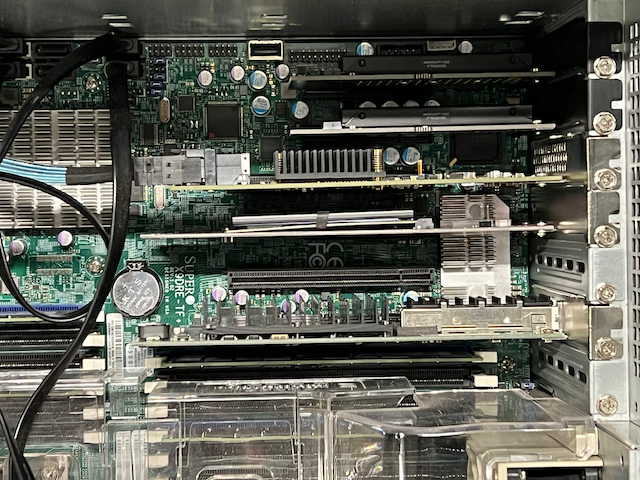

The first drive added to a spare PCIe interface:

They arrived on Saturday and I installed them this morning.

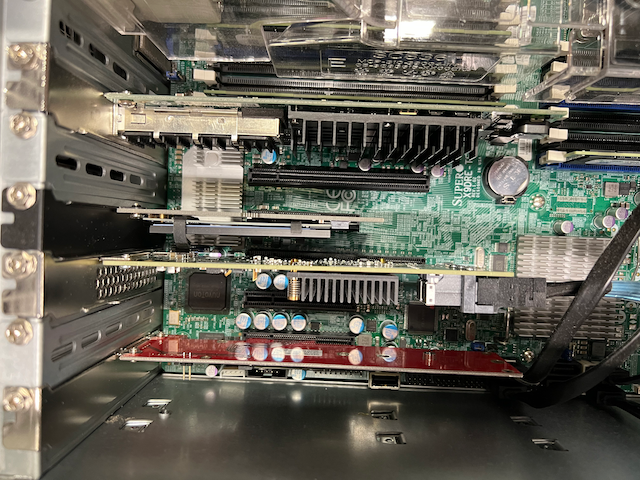

The slots before:

This post is about upgrading that zpool to contain the new devices.

In this post:

- FreeBSD 13.1-RELEASE

- Full disclosure: As an Amazon Associate I earn from qualifying purchases. The links below are my affiliate links.

- SAMSUNG 980 PRO SSD with Heatsink 1TB PCIe Gen 4 NVMe M.2 Internal Solid State Hard Drive, Heat Control, Max Speed, PS5 Compatible, MZ-V8P1T0CW

- M.2 NVME to PCIe 3.0 x4 Adapter with Aluminum Heatsink Solution – I’m not using the supplied heatsink – the NVMe device already has one

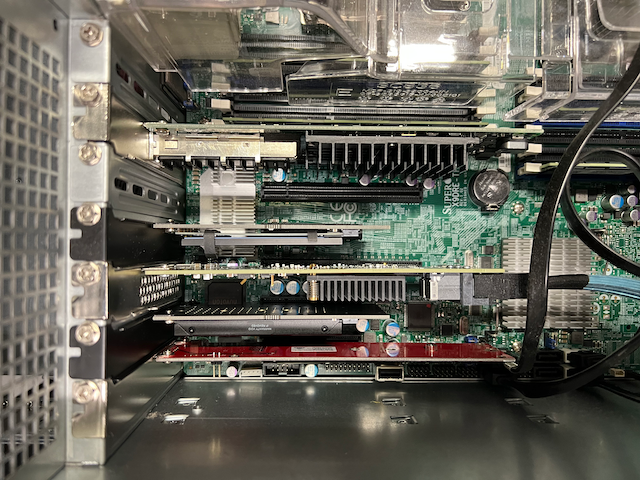

The slots after:

What uses all this space?

The ingress nodes are actually the storage hungry side.

[slocum dan ~] % zfs list tank_fast/data/dev-ingress01 tank_fast/data/dev-nginx01 nvd/freshports/dev nvd/freshports/dev-nginx0 NAME USED AVAIL REFER MOUNTPOINT nvd/freshports/dev 5.10G 62.7G 23K /jails/dev-ingress01/var/db/freshports nvd/freshports/dev-nginx01 98.4M 62.7G 24K none tank_fast/data/dev-ingress01 12.7G 172G 96K none tank_fast/data/dev-nginx01 57.1M 172G 96K none

That’s 17GB for the ingress, compared with only 145MB for the website. Most of the data for the ingress node is the repos and the old text commits still on disk.

[slocum dan ~] % zfs list -r tank_fast/data/dev-ingress01 nvd/freshports/dev NAME USED AVAIL REFER MOUNTPOINT nvd/freshports/dev 5.10G 62.7G 23K /jails/dev-ingress01/var/db/freshports nvd/freshports/dev/cache 106K 62.7G 35.5K /jails/dev-ingress01/var/db/freshports/cache nvd/freshports/dev/cache/html 42K 62.7G 42K /jails/dev-ingress01/var/db/freshports/cache/html nvd/freshports/dev/cache/spooling 28K 62.7G 28K /jails/dev-ingress01/var/db/freshports/cache/spooling nvd/freshports/dev/ingress 5.08G 62.7G 23K /jails/dev-ingress01/var/db/ingress nvd/freshports/dev/ingress/message-queues 1.80M 62.7G 1.33M /jails/dev-ingress01/var/db/ingress/message-queues nvd/freshports/dev/ingress/repos 5.08G 62.7G 2.28G /jails/dev-ingress01/var/db/ingress/repos nvd/freshports/dev/ingress/repos/src 2.80G 62.7G 2.80G /jails/dev-ingress01/var/db/ingress/repos/src nvd/freshports/dev/message-queues 16.1M 62.7G 15.2M /jails/dev-ingress01/var/db/freshports/message-queues tank_fast/data/dev-ingress01 12.7G 172G 96K none tank_fast/data/dev-ingress01/messages 12.7G 172G 96K none tank_fast/data/dev-ingress01/messages/archive 12.7G 172G 12.7G /jails/dev-ingress01/var/db/freshports/message-queues/archive tank_fast/data/dev-ingress01/usr.local.lib.perl5.site_perl.FreshPorts 3.12M 172G 3.12M /jails/dev-ingress01/usr/local/lib/perl5/site_perl/FreshPorts tank_fast/data/dev-ingress01/usr.local.libexec.freshports 2.18M 172G 2.18M /jails/dev-ingress01/usr/local/libexec/freshports

The biggest eater of space is the jail within the jail, specifically, the distfiles directory.

[slocum dan ~] % sudo du -ch -d 1 /jails/dev-ingress01/jails/freshports/usr/ports 19M /jails/dev-ingress01/jails/freshports/usr/ports/games 22M /jails/dev-ingress01/jails/freshports/usr/ports/net 55K /jails/dev-ingress01/jails/freshports/usr/ports/base 5.7M /jails/dev-ingress01/jails/freshports/usr/ports/java 12M /jails/dev-ingress01/jails/freshports/usr/ports/cad 15M /jails/dev-ingress01/jails/freshports/usr/ports/databases 136K /jails/dev-ingress01/jails/freshports/usr/ports/vietnamese 13M /jails/dev-ingress01/jails/freshports/usr/ports/audio 472K /jails/dev-ingress01/jails/freshports/usr/ports/korean 219K /jails/dev-ingress01/jails/freshports/usr/ports/Tools 1.2M /jails/dev-ingress01/jails/freshports/usr/ports/french 21M /jails/dev-ingress01/jails/freshports/usr/ports/graphics 4.4M /jails/dev-ingress01/jails/freshports/usr/ports/japanese 235K /jails/dev-ingress01/jails/freshports/usr/ports/russian 16M /jails/dev-ingress01/jails/freshports/usr/ports/textproc 2.2M /jails/dev-ingress01/jails/freshports/usr/ports/astro 48K /jails/dev-ingress01/jails/freshports/usr/ports/ukrainian 61K /jails/dev-ingress01/jails/freshports/usr/ports/arabic 4.8M /jails/dev-ingress01/jails/freshports/usr/ports/emulators 41G /jails/dev-ingress01/jails/freshports/usr/ports/distfiles 65M /jails/dev-ingress01/jails/freshports/usr/ports/www 3.2M /jails/dev-ingress01/jails/freshports/usr/ports/comms 4.5M /jails/dev-ingress01/jails/freshports/usr/ports/deskutils 11M /jails/dev-ingress01/jails/freshports/usr/ports/misc 909K /jails/dev-ingress01/jails/freshports/usr/ports/news 100K /jails/dev-ingress01/jails/freshports/usr/ports/x11-servers 406K /jails/dev-ingress01/jails/freshports/usr/ports/accessibility 8.4M /jails/dev-ingress01/jails/freshports/usr/ports/editors 1.0M /jails/dev-ingress01/jails/freshports/usr/ports/ftp 11M /jails/dev-ingress01/jails/freshports/usr/ports/science 13M /jails/dev-ingress01/jails/freshports/usr/ports/mail 2.7M /jails/dev-ingress01/jails/freshports/usr/ports/x11-fonts 25M /jails/dev-ingress01/jails/freshports/usr/ports/security 2.8M /jails/dev-ingress01/jails/freshports/usr/ports/net-p2p 4.4M /jails/dev-ingress01/jails/freshports/usr/ports/x11-toolkits 58K /jails/dev-ingress01/jails/freshports/usr/ports/hebrew 7.3M /jails/dev-ingress01/jails/freshports/usr/ports/net-mgmt 3.7M /jails/dev-ingress01/jails/freshports/usr/ports/net-im 26K /jails/dev-ingress01/jails/freshports/usr/ports/Keywords 8.6M /jails/dev-ingress01/jails/freshports/usr/ports/x11 35K /jails/dev-ingress01/jails/freshports/usr/ports/portuguese 13K /jails/dev-ingress01/jails/freshports/usr/ports/.hooks 3.6M /jails/dev-ingress01/jails/freshports/usr/ports/biology 664K /jails/dev-ingress01/jails/freshports/usr/ports/x11-fm 158K /jails/dev-ingress01/jails/freshports/usr/ports/german 501K /jails/dev-ingress01/jails/freshports/usr/ports/polish 31K /jails/dev-ingress01/jails/freshports/usr/ports/hungarian 397K /jails/dev-ingress01/jails/freshports/usr/ports/x11-clocks 17M /jails/dev-ingress01/jails/freshports/usr/ports/math 3.6M /jails/dev-ingress01/jails/freshports/usr/ports/chinese 1.2M /jails/dev-ingress01/jails/freshports/usr/ports/Mk 2.3M /jails/dev-ingress01/jails/freshports/usr/ports/dns 7.0M /jails/dev-ingress01/jails/freshports/usr/ports/finance 24M /jails/dev-ingress01/jails/freshports/usr/ports/sysutils 2.5M /jails/dev-ingress01/jails/freshports/usr/ports/x11-wm 82M /jails/dev-ingress01/jails/freshports/usr/ports/devel 1.2M /jails/dev-ingress01/jails/freshports/usr/ports/benchmarks 8.5M /jails/dev-ingress01/jails/freshports/usr/ports/multimedia 1.2M /jails/dev-ingress01/jails/freshports/usr/ports/shells 1.6M /jails/dev-ingress01/jails/freshports/usr/ports/irc 1.2G /jails/dev-ingress01/jails/freshports/usr/ports/.git 821K /jails/dev-ingress01/jails/freshports/usr/ports/ports-mgmt 5.7M /jails/dev-ingress01/jails/freshports/usr/ports/print 494K /jails/dev-ingress01/jails/freshports/usr/ports/x11-drivers 63M /jails/dev-ingress01/jails/freshports/usr/ports/x11-themes 513K /jails/dev-ingress01/jails/freshports/usr/ports/Templates 23M /jails/dev-ingress01/jails/freshports/usr/ports/lang 1.4M /jails/dev-ingress01/jails/freshports/usr/ports/converters 2.1M /jails/dev-ingress01/jails/freshports/usr/ports/archivers 43G /jails/dev-ingress01/jails/freshports/usr/ports 43G total

FreshPort uses the distfiles to extract information about the port. It doesn’t delete them after using them to save downloading them again.

The drives in the system

After the system booted up, I found this:

[slocum dan ~] % sysctl kern.disks

kern.disks: da11 da10 da9 da8 da7 da6 da5 da4 da3 da2 da1 da0 ada1 ada0 nvd2 nvd1 nvd0

[slocum dan ~] % gpart show nvd0 nvd1 nvd2 12:55:41

=> 40 488397088 nvd0 GPT (233G)

40 488397088 1 freebsd-zfs (233G)

gpart: No such geom: nvd1.

[slocum dan ~] % gpart show nvd2

gpart: No such geom: nvd2.

[slocum dan ~] %

This means neither nvd1 nor nvd2 have partitions.

What does dmesg have to say about this?

[slocum dan ~] % egrep -e 'nvd[0,1,2]' /var/run/dmesg.boot nvd0: <WDC WDS250G2B0C-00PXH0> NVMe namespace nvd0: 238475MB (488397168 512 byte sectors) nvd1: <BPXP> NVMe namespace nvd1: 228936MB (468862128 512 byte sectors) nvd2: <Samsung SSD 980 PRO with Heatsink 1TB> NVMe namespace nvd2: 953869MB (1953525168 512 byte sectors)

The new drive is nvd2. So what’s up with nvd1 not having any partitions. It’s part of the existing (and cleverly named) nvd zpool. See my recent post about my rediscovery of that situation.

smartctl

Let’s look at the drive using smartctl:

[slocum dan ~] % sudo smartctl -a /dev/nvme2 13:20:29 smartctl 7.3 2022-02-28 r5338 [FreeBSD 13.1-RELEASE-p2 amd64] (local build) Copyright (C) 2002-22, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: Samsung SSD 980 PRO with Heatsink 1TB Serial Number: [redacted] Firmware Version: 4B2QGXA7 PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,000,204,886,016 [1.00 TB] Unallocated NVM Capacity: 0 Controller ID: 6 NVMe Version: 1.3 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,000,204,886,016 [1.00 TB] Namespace 1 Utilization: 40,960 [40.9 KB] Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 002538 b22140998d Local Time is: Tue Oct 18 13:20:37 2022 UTC Firmware Updates (0x16): 3 Slots, no Reset required Optional Admin Commands (0x0017): Security Format Frmw_DL Self_Test Optional NVM Commands (0x0057): Comp Wr_Unc DS_Mngmt Sav/Sel_Feat Timestmp Log Page Attributes (0x0f): S/H_per_NS Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg Maximum Data Transfer Size: 128 Pages Warning Comp. Temp. Threshold: 82 Celsius Critical Comp. Temp. Threshold: 85 Celsius Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 8.49W - - 0 0 0 0 0 0 1 + 4.48W - - 1 1 1 1 0 200 2 + 3.18W - - 2 2 2 2 0 1000 3 - 0.0400W - - 3 3 3 3 2000 1200 4 - 0.0050W - - 4 4 4 4 500 9500 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 41 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 12 [6.14 MB] Data Units Written: 2 [1.02 MB] Host Read Commands: 1,619 Host Write Commands: 120 Controller Busy Time: 0 Power Cycles: 1 Power On Hours: 1 Unsafe Shutdowns: 0 Media and Data Integrity Errors: 0 Error Information Log Entries: 0 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 41 Celsius Temperature Sensor 2: 46 Celsius Error Information (NVMe Log 0x01, 16 of 64 entries) No Errors Logged

Partitioning the new drive

This is similar to previous posts:

- create a partitioning schem

- add a ZFS partition

- tell zfs to replace one drive with another (i.e. resilver)

Let’s get start by creating the partitioning scheme.

[slocum dan ~] % sudo gpart create -s gpt nvd2

nvd2 created

[slocum dan ~] % gpart show nvd2

=> 40 1953525088 nvd2 GPT (932G)

40 1953525088 - free - (932G)

[slocum dan ~] %

Then create a ZFS partition:

[slocum dan ~] % sudo gpart add -t freebsd-zfs -a 4K nvd2

nvd2p1 added

[slocum dan ~] % gpart show nvd2

=> 40 1953525088 nvd2 GPT (932G)

40 1953525088 1 freebsd-zfs (932G)

[slocum dan ~] %

I want to point out that I’m going against my own advice to not give ZFS your entire drive, but to save a few MB.

Let’s fix that. Through trial and error, because I didn’t do the correct math, I came up with this:

[slocum dan ~] % sudo gpart delete -i 1 nvd2

nvd2p1 deleted

[slocum dan ~] % sudo gpart add -t freebsd-zfs -a 4K -s 953850M -l Drive_Serial_Number nvd2

nvd2p1 added

[slocum dan ~] % gpart show nvd2

=> 40 1953525088 nvd2 GPT (932G)

40 1953484800 1 freebsd-zfs (931G)

1953484840 40288 - free - (20M)

[slocum dan ~] %

That unused 20MB is my insurance plan. Should I need to replace this with another 1TB drive, it can be short by up to 20MB.

Is this where someone tells me I should have chosen a specific size because this size will cause some problem?

I also supplied the drive serial number as the label – this can be useful when replacing drives – it helps you identify which drive to remove. I should also label the drive. I’m not sure the serial number is printed on the drive. You can view that label with this command:

[slocum dan ~] % gpart show -l nvd2

=> 40 1953525088 nvd2 GPT (932G)

40 1953484800 1 Drive_Serial_Number (931G)

1953484840 40288 - free - (20M)

[slocum dan ~] %

Next, add this drive into the zpool. That deserves a separate section.

Adding the drive into the zpool

Why did I add in the new drive and not remove the old drive? Safety. When you can, it is best to do that. It keeps the existing zpool in optimal condition. If I removed one of the drives and just swapped in the new drive, the zpool would be degraded. With this approach, that never happens.

This command always gives me grief. It’s the commands I use so rarely that cause this. After issuing the following command, I immediately issued the status command, just to see what was happening.

[slocum dan ~] % sudo zpool replace nvd diskid/DISK-C26D07890DC400015192p1 nvd2p1

I will outline the arguments to zpool replace

- nvd – the name of the zpool

- diskid/DISK-C26D07890DC400015192p1 – the vdev which is leaving the zpool

- nvd2p1 – the vdev which is coming into the zpool

[slocum dan ~] % zpool status nvd pool: nvd state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Tue Oct 18 13:37:34 2022 16.2G scanned at 1.48G/s, 6.76M issued at 629K/s, 152G total 8.22M resilvered, 0.00% done, no estimated completion time config: NAME STATE READ WRITE CKSUM nvd ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 nvd0p1 ONLINE 0 0 0 replacing-1 ONLINE 0 0 0 diskid/DISK-C26D07890DC400015192p1 ONLINE 0 0 0 nvd2p1 ONLINE 0 0 0 (resilvering) errors: No known data errors [slocum dan ~] %

Checking in on progress:

[slocum dan ~] % zpool status nvd pool: nvd state: ONLINE scan: resilvered 154G in 00:03:36 with 0 errors on Tue Oct 18 13:41:10 2022 config: NAME STATE READ WRITE CKSUM nvd ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 nvd0p1 ONLINE 0 0 0 nvd2p1 ONLINE 0 0 0 errors: No known data errors [slocum dan ~] %

It’s done. In the time it took me to type the above, it finished.

Next, I repeat the process with the second drive. That will be later today, perhaps at lunch.

Over lunch: which drive to pull?

I have to pull nvd1 from the nvd zpool. Which one is that? Based on the dmesg shown earlier, I know it’s the BPXP drive, and not the WDC drive. If I didn’t have brand / model names to distinguish them, I’d hope serial numbers were visible. Or perhaps this might help:

[slocum dan ~] % pciconf -lv

...

nvme0@pci0:2:0:0: class=0x010802 rev=0x01 hdr=0x00 vendor=0x15b7 device=0x5019 subvendor=0x15b7 subdevice=0x5019

vendor = 'Sandisk Corp'

class = mass storage

subclass = NVM

...

nvme1@pci0:5:0:0: class=0x010802 rev=0x01 hdr=0x00 vendor=0x1987 device=0x5012 subvendor=0x1987 subdevice=0x5012

vendor = 'Phison Electronics Corporation'

device = 'E12 NVMe Controller'

class = mass storage

subclass = NVM

nvme2@pci0:6:0:0: class=0x010802 rev=0x00 hdr=0x00 vendor=0x144d device=0xa80a subvendor=0x144d subdevice=0xa801

vendor = 'Samsung Electronics Co Ltd'

device = 'NVMe SSD Controller PM9A1/PM9A3/980PRO'

class = mass storage

subclass = NVM

Not all output is shown. But I can see that the drive I want to remove is in slot 5, and not slots 2 or 6.

Let’s go see if that’s right.

No, it wasn’t. I don’t know what those number are, but they don’t match up with physical slots, unless they are not numbers consecutively.

I did find the BPXP drive. The Samsung drive would not fit; the screw was not long enough.

When I removed the WDC drive, I noticed the elastic bands had broken. They were holding the heatsink against the drive. Fortunately, the adhesive was still doing its job and the heatsink had not fallen, perhaps against the other card, causing a short.

The Samsung was mounted on the other card, the one with the WDC drive. The WDC drive was moved to the card which had contained the BPXP drive. In the photo, the mounting screw for the BPXP drive is not yet installed. I did attach it before installing the card in the server.

The broken elastics are at the bottom right of the photo.

All in.

After the install

After the system booted up, I found this:

[slocum dan ~] % egrep -e 'nvd[0,1,2]' /var/run/dmesg.boot

nvd0: <WDC WDS250G2B0C-00PXH0> NVMe namespace

nvd0: 238475MB (488397168 512 byte sectors)

nvd1: <Samsung SSD 980 PRO with Heatsink 1TB> NVMe namespace

nvd1: 953869MB (1953525168 512 byte sectors)

nvd2: <Samsung SSD 980 PRO with Heatsink 1TB> NVMe namespace

nvd2: 953869MB (1953525168 512 byte sectors)

[slocum dan ~] % gpart show nvd1

gpart: No such geom: nvd1.

[slocum dan ~] % gpart show nvd2

=> 40 1953525088 nvd2 GPT (932G)

40 1953484800 1 freebsd-zfs (931G)

1953484840 40288 - free - (20M)

[slocum dan ~] %

[slocum dan ~] % zpool status nvd

pool: nvd

state: ONLINE

scan: resilvered 154G in 00:03:36 with 0 errors on Tue Oct 18 13:41:10 2022

config:

NAME STATE READ WRITE CKSUM

nvd ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvd0p1 ONLINE 0 0 0

nvd2p1 ONLINE 0 0 0

errors: No known data errors

[slocum dan ~] %

Some notes:

- Lines 4-5 show the new drive.

- Lines 8-9 confirm it has no partitions.

- Line 16 confirms the zpool is still OK and I did not remove the wrong drive.

Prepare the new drive

I do exactly what I did for the other drive.

For the record. It, like the first one, does not appear to be a used drive. The Host Read Commands value is low. So is the Host Write Commands value. I have no doubt someone knows how to zero that.

[slocum dan ~] % sudo smartctl -a /dev/nvme1 smartctl 7.3 2022-02-28 r5338 [FreeBSD 13.1-RELEASE-p2 amd64] (local build) Copyright (C) 2002-22, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: Samsung SSD 980 PRO with Heatsink 1TB Serial Number: [redacted] Firmware Version: 4B2QGXA7 PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,000,204,886,016 [1.00 TB] Unallocated NVM Capacity: 0 Controller ID: 6 NVMe Version: 1.3 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,000,204,886,016 [1.00 TB] Namespace 1 Utilization: 0 Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 002538 b221409d56 Local Time is: Tue Oct 18 17:35:26 2022 UTC Firmware Updates (0x16): 3 Slots, no Reset required Optional Admin Commands (0x0017): Security Format Frmw_DL Self_Test Optional NVM Commands (0x0057): Comp Wr_Unc DS_Mngmt Sav/Sel_Feat Timestmp Log Page Attributes (0x0f): S/H_per_NS Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg Maximum Data Transfer Size: 128 Pages Warning Comp. Temp. Threshold: 82 Celsius Critical Comp. Temp. Threshold: 85 Celsius Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 8.49W - - 0 0 0 0 0 0 1 + 4.48W - - 1 1 1 1 0 200 2 + 3.18W - - 2 2 2 2 0 1000 3 - 0.0400W - - 3 3 3 3 2000 1200 4 - 0.0050W - - 4 4 4 4 500 9500 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 40 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 8 [4.09 MB] Data Units Written: 0 Host Read Commands: 5,694 Host Write Commands: 0 Controller Busy Time: 0 Power Cycles: 2 Power On Hours: 0 Unsafe Shutdowns: 0 Media and Data Integrity Errors: 0 Error Information Log Entries: 0 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 40 Celsius Temperature Sensor 2: 44 Celsius Error Information (NVMe Log 0x01, 16 of 64 entries) No Errors Logged [slocum dan ~] %

Configuring the drive:

[slocum dan ~] % sudo gpart create -s gpt nvd1

nvd1 created

[slocum dan ~] % sudo gpart add -t freebsd-zfs -a 4K -s 953850M -l Drive_Serial_Number nvd1

nvd1p1 added

[slocum dan ~] % gpart show nvd1

=> 40 1953525088 nvd1 GPT (932G)

40 1953484800 1 freebsd-zfs (931G)

1953484840 40288 - free - (20M)

[slocum dan ~] % gpart show -l nvd1

=> 40 1953525088 nvd1 GPT (932G)

40 1953484800 1 Drive_Serial_Number (931G)

1953484840 40288 - free - (20M)

[slocum dan ~] %

Next, replace the existing vdev with the new vdev:

[slocum dan ~] % sudo zpool replace nvd nvd0p1 nvd1p1 17:41:37 [slocum dan ~] % zpool status nvd 17:42:43 pool: nvd state: ONLINE status: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. action: Wait for the resilver to complete. scan: resilver in progress since Tue Oct 18 17:42:38 2022 18.6G scanned at 1.55G/s, 6.88M issued at 587K/s, 153G total 8.22M resilvered, 0.00% done, no estimated completion time config: NAME STATE READ WRITE CKSUM nvd ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 replacing-0 ONLINE 0 0 0 nvd0p1 ONLINE 0 0 0 nvd1p1 ONLINE 0 0 0 (resilvering) nvd2p1 ONLINE 0 0 0 errors: No known data errors

Not long after, we’re done:

[slocum dan ~] % zpool status nvd pool: nvd state: ONLINE scan: resilvered 154G in 00:02:25 with 0 errors on Tue Oct 18 17:45:03 2022 config: NAME STATE READ WRITE CKSUM nvd ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 nvd1p1 ONLINE 0 0 0 nvd2p1 ONLINE 0 0 0 errors: No known data errors [slocum dan ~] %

But where’s my space?

[slocum dan ~] % zpool list nvd NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT nvd 222G 153G 69.5G - 8G 45% 68% 1.00x ONLINE -

I recalled there was an auto feature, which expanded the zpool size once all the drives had beens swapped. What was it called?

[slocum dan ~] % zpool get all nvd | grep auto nvd autoreplace off default nvd autoexpand off default nvd autotrim off default

Ahh, autoreplace.

How do I fix this now? I found this reference which showed me the way.

[slocum dan ~] % zpool status pool: nvd state: ONLINE scan: resilvered 154G in 00:02:25 with 0 errors on Tue Oct 18 17:45:03 2022 config: NAME STATE READ WRITE CKSUM nvd ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 nvd1p1 ONLINE 0 0 0 nvd2p1 ONLINE 0 0 0 [slocum dan ~] % sudo zpool set autoexpand=on nvd [slocum dan ~] % sudo zpool online -e nvd nvd1p1 [slocum dan ~] % sudo zpool online -e nvd nvd2p1 [slocum dan ~] % zpool list nvd NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT nvd 930G 153G 777G - - 10% 16% 1.00x ONLINE - [slocum dan ~] %

BOOM, the drives are taken online (not that they were offline), and the -e option says to “Expand the device to use all available space”. Done.

Here is the full list you never wanted to see:

[slocum dan ~] % zfs list -r nvd 17:59:33 NAME USED AVAIL REFER MOUNTPOINT nvd 153G 748G 23K none nvd/freshports 152G 748G 23K none nvd/freshports/dan 38.9M 748G 38.9M none nvd/freshports/dev 5.10G 748G 23K /jails/dev-ingress01/var/db/freshports nvd/freshports/dev-nginx01 98.8M 748G 24K none nvd/freshports/dev-nginx01/var 98.7M 748G 24K none nvd/freshports/dev-nginx01/var/db 98.7M 748G 24K none nvd/freshports/dev-nginx01/var/db/freshports 98.7M 748G 24K none nvd/freshports/dev-nginx01/var/db/freshports/cache 98.7M 748G 24K /var/db/freshports/cache nvd/freshports/dev-nginx01/var/db/freshports/cache/categories 56K 748G 41K /var/db/freshports/cache/categories nvd/freshports/dev-nginx01/var/db/freshports/cache/commits 88.4M 748G 88.4M /var/db/freshports/cache/commits nvd/freshports/dev-nginx01/var/db/freshports/cache/daily 234K 748G 219K /var/db/freshports/cache/daily nvd/freshports/dev-nginx01/var/db/freshports/cache/general 9.23M 748G 9.22M /var/db/freshports/cache/general nvd/freshports/dev-nginx01/var/db/freshports/cache/news 299K 748G 284K /var/db/freshports/cache/news nvd/freshports/dev-nginx01/var/db/freshports/cache/packages 38K 748G 24K /var/db/freshports/cache/packages nvd/freshports/dev-nginx01/var/db/freshports/cache/pages 38K 748G 24K /var/db/freshports/cache/pages nvd/freshports/dev-nginx01/var/db/freshports/cache/ports 249K 748G 234K /var/db/freshports/cache/ports nvd/freshports/dev-nginx01/var/db/freshports/cache/spooling 63K 748G 37K /var/db/freshports/cache/spooling nvd/freshports/dev/cache 110K 748G 35.5K /jails/dev-ingress01/var/db/freshports/cache nvd/freshports/dev/cache/html 46K 748G 46K /jails/dev-ingress01/var/db/freshports/cache/html nvd/freshports/dev/cache/spooling 28K 748G 28K /jails/dev-ingress01/var/db/freshports/cache/spooling nvd/freshports/dev/ingress 5.08G 748G 23K /jails/dev-ingress01/var/db/ingress nvd/freshports/dev/ingress/message-queues 1.73M 748G 1.33M /jails/dev-ingress01/var/db/ingress/message-queues nvd/freshports/dev/ingress/repos 5.08G 748G 2.28G /jails/dev-ingress01/var/db/ingress/repos nvd/freshports/dev/ingress/repos/src 2.80G 748G 2.80G /jails/dev-ingress01/var/db/ingress/repos/src nvd/freshports/dev/message-queues 16.8M 748G 16.0M /jails/dev-ingress01/var/db/freshports/message-queues nvd/freshports/jailed 134G 748G 24K none nvd/freshports/jailed/dev-ingress01 44.6G 748G 24K none nvd/freshports/jailed/dev-ingress01/jails 44.3G 748G 24K /jails nvd/freshports/jailed/dev-ingress01/jails/freshports 44.3G 748G 44.3G /jails/freshports nvd/freshports/jailed/dev-ingress01/mkjail 340M 748G 340M /var/db/mkjail nvd/freshports/jailed/stage-ingress01 44.6G 748G 24K none nvd/freshports/jailed/stage-ingress01/jails 44.3G 748G 24K /jails nvd/freshports/jailed/stage-ingress01/jails/freshports 44.3G 748G 44.3G /jails/freshports nvd/freshports/jailed/stage-ingress01/mkjail 340M 748G 340M /var/db/mkjail nvd/freshports/jailed/test-ingress01 44.6G 748G 24K none nvd/freshports/jailed/test-ingress01/jails 44.3G 748G 24K /jails nvd/freshports/jailed/test-ingress01/jails/freshports 44.3G 748G 44.3G /jails/freshports nvd/freshports/jailed/test-ingress01/mkjail 340M 748G 340M /var/db/mkjail nvd/freshports/mobile-nginx01 462K 748G 24K none nvd/freshports/mobile-nginx01/var 438K 748G 24K none nvd/freshports/mobile-nginx01/var/db 414K 748G 24K none nvd/freshports/mobile-nginx01/var/db/freshports 390K 748G 24K none nvd/freshports/mobile-nginx01/var/db/freshports/cache 366K 748G 24K /var/db/freshports/cache nvd/freshports/mobile-nginx01/var/db/freshports/cache/categories 38K 748G 24K /var/db/freshports/cache/categories nvd/freshports/mobile-nginx01/var/db/freshports/cache/commits 38K 748G 24K /var/db/freshports/cache/commits nvd/freshports/mobile-nginx01/var/db/freshports/cache/daily 38K 748G 24K /var/db/freshports/cache/daily nvd/freshports/mobile-nginx01/var/db/freshports/cache/general 38K 748G 24K /var/db/freshports/cache/general nvd/freshports/mobile-nginx01/var/db/freshports/cache/news 38K 748G 24K /var/db/freshports/cache/news nvd/freshports/mobile-nginx01/var/db/freshports/cache/packages 38K 748G 24K /var/db/freshports/cache/packages nvd/freshports/mobile-nginx01/var/db/freshports/cache/pages 38K 748G 24K /var/db/freshports/cache/pages nvd/freshports/mobile-nginx01/var/db/freshports/cache/ports 38K 748G 24K /var/db/freshports/cache/ports nvd/freshports/mobile-nginx01/var/db/freshports/cache/spooling 38K 748G 24K /var/db/freshports/cache/spooling nvd/freshports/stage-ingress01 5.14G 748G 24K none nvd/freshports/stage-ingress01/var 5.14G 748G 24K none nvd/freshports/stage-ingress01/var/db 5.14G 748G 24K none nvd/freshports/stage-ingress01/var/db/freshports 14.8M 748G 24K /jails/stage-ingress01/var/db/freshports nvd/freshports/stage-ingress01/var/db/freshports/cache 144K 748G 24K /jails/stage-ingress01/var/db/freshports/cache nvd/freshports/stage-ingress01/var/db/freshports/cache/html 58K 748G 43K /jails/stage-ingress01/var/db/freshports/cache/html nvd/freshports/stage-ingress01/var/db/freshports/cache/spooling 61.5K 748G 61.5K /jails/stage-ingress01/var/db/freshports/cache/spooling nvd/freshports/stage-ingress01/var/db/freshports/message-queues 14.6M 748G 13.8M /jails/stage-ingress01/var/db/freshports/message-queues nvd/freshports/stage-ingress01/var/db/ingress 5.13G 748G 24K /jails/stage-ingress01/var/db/ingress nvd/freshports/stage-ingress01/var/db/ingress/message-queues 1.32M 748G 961K /jails/stage-ingress01/var/db/ingress/message-queues nvd/freshports/stage-ingress01/var/db/ingress/repos 5.13G 748G 5.12G /jails/stage-ingress01/var/db/ingress/repos nvd/freshports/stage-nginx01 96.9M 748G 24K none nvd/freshports/stage-nginx01/var 96.9M 748G 24K none nvd/freshports/stage-nginx01/var/db 96.9M 748G 24K none nvd/freshports/stage-nginx01/var/db/freshports 96.8M 748G 24K none nvd/freshports/stage-nginx01/var/db/freshports/cache 96.8M 748G 24K /var/db/freshports/cache nvd/freshports/stage-nginx01/var/db/freshports/cache/categories 6.40M 748G 6.39M /var/db/freshports/cache/categories nvd/freshports/stage-nginx01/var/db/freshports/cache/commits 38.4M 748G 38.4M /var/db/freshports/cache/commits nvd/freshports/stage-nginx01/var/db/freshports/cache/daily 1.82M 748G 1.80M /var/db/freshports/cache/daily nvd/freshports/stage-nginx01/var/db/freshports/cache/general 10.5M 748G 10.4M /var/db/freshports/cache/general nvd/freshports/stage-nginx01/var/db/freshports/cache/news 397K 748G 382K /var/db/freshports/cache/news nvd/freshports/stage-nginx01/var/db/freshports/cache/packages 38K 748G 24K /var/db/freshports/cache/packages nvd/freshports/stage-nginx01/var/db/freshports/cache/pages 38K 748G 24K /var/db/freshports/cache/pages nvd/freshports/stage-nginx01/var/db/freshports/cache/ports 39.1M 748G 39.1M /var/db/freshports/cache/ports nvd/freshports/stage-nginx01/var/db/freshports/cache/spooling 66K 748G 41K /var/db/freshports/cache/spooling nvd/freshports/test-ingress01 8.01G 748G 24K none nvd/freshports/test-ingress01/var 8.01G 748G 24K none nvd/freshports/test-ingress01/var/db 8.01G 748G 24K none nvd/freshports/test-ingress01/var/db/freshports 6.74M 748G 24K none nvd/freshports/test-ingress01/var/db/freshports/cache 132K 748G 24K /jails/test-ingress01/var/db/freshports/cache nvd/freshports/test-ingress01/var/db/freshports/cache/html 48K 748G 48K /jails/test-ingress01/var/db/freshports/cache/html nvd/freshports/test-ingress01/var/db/freshports/cache/spooling 60.5K 748G 60.5K /jails/test-ingress01/var/db/freshports/cache/spooling nvd/freshports/test-ingress01/var/db/freshports/message-queues 6.59M 748G 5.80M /jails/test-ingress01/var/db/freshports/message-queues nvd/freshports/test-ingress01/var/db/ingress 8.00G 748G 24K /jails/test-ingress01/var/db/ingress nvd/freshports/test-ingress01/var/db/ingress/message-queues 1.34M 748G 962K /jails/test-ingress01/var/db/ingress/message-queues nvd/freshports/test-ingress01/var/db/ingress/repos 8.00G 748G 5.10G /jails/test-ingress01/var/db/ingress/repos nvd/freshports/test-nginx01 33.6M 748G 24K none nvd/freshports/test-nginx01/var 33.6M 748G 24K none nvd/freshports/test-nginx01/var/db 33.6M 748G 24K none nvd/freshports/test-nginx01/var/db/freshports 33.6M 748G 24K none nvd/freshports/test-nginx01/var/db/freshports/cache 33.5M 748G 24K /var/db/freshports/cache nvd/freshports/test-nginx01/var/db/freshports/cache/categories 360K 748G 345K /var/db/freshports/cache/categories nvd/freshports/test-nginx01/var/db/freshports/cache/commits 21.2M 748G 21.2M /var/db/freshports/cache/commits nvd/freshports/test-nginx01/var/db/freshports/cache/daily 5.01M 748G 4.99M /var/db/freshports/cache/daily nvd/freshports/test-nginx01/var/db/freshports/cache/general 3.82M 748G 3.79M /var/db/freshports/cache/general nvd/freshports/test-nginx01/var/db/freshports/cache/news 300K 748G 285K /var/db/freshports/cache/news nvd/freshports/test-nginx01/var/db/freshports/cache/packages 38K 748G 24K /var/db/freshports/cache/packages nvd/freshports/test-nginx01/var/db/freshports/cache/pages 38K 748G 24K /var/db/freshports/cache/pages nvd/freshports/test-nginx01/var/db/freshports/cache/ports 2.72M 748G 2.71M /var/db/freshports/cache/ports nvd/freshports/test-nginx01/var/db/freshports/cache/spooling 64K 748G 39K /var/db/freshports/cache/spooling

Thank you. I hope this helps.